Rendering Animated Meshes

| What you want to do now is to render your animated character. The rendering method itself appears deceptively simple, so add the code from Listing 13.8 to your class now. Listing 13.8 Rendering Your Animated Character protected override void OnPaint(System.Windows.Forms.PaintEventArgs e) { ProcessNextFrame(); device.Clear(ClearFlags.Target | ClearFlags.ZBuffer, Color.CornflowerBlue, 1.0f, 0); device.BeginScene(); // Draw our root frame DrawFrame((FrameDerived)rootFrame.FrameHierarchy); device.EndScene(); device.Present(); this.Invalidate(); } As you can see, the method is quite simple. Process the next frame, clear the device, draw the root frame, and you're done. A lot of things need to happen in these steps, though. First, look at the things that need to be done to process the next frame: private void ProcessNextFrame() { // Get the current elapsed time elapsedTime = DXUtil.Timer(DirectXTimer.GetElapsedTime); // Set the world matrix Matrix worldMatrix = Matrix.Translation(objectCenter); device.Transform.World = worldMatrix; if (rootFrame.AnimationController != null) rootFrame.AnimationController.AdvanceTime(elapsedTime, null); UpdateFrameMatrices((FrameDerived)rootFrame.FrameHierarchy, worldMatrix); } First, the current elapsed time is stored. Next, the world matrix for the root frame is created. Simply translate to the object's center, and update the device. Assuming this mesh has animation, you should advance the time, using the stored elapsed time. Finally, each of combined transformation matrices needs to be updated. Look at the following method: private void UpdateFrameMatrices(FrameDerived frame, Matrix parentMatrix) { frame.CombinedTransformationMatrix = frame.TransformationMatrix * parentMatrix; if (frame.FrameSibling != null) { UpdateFrameMatrices((FrameDerived)frame.FrameSibling, parentMatrix); } if (frame.FrameFirstChild != null) { UpdateFrameMatrices((FrameDerived)frame.FrameFirstChild, frame.CombinedTransformationMatrix); } } In this method, the current frame's combined transformation matrix is calculated by multiplying the frame's transformation matrix along with its parent's transformation matrix. Each of the siblings uses the same parent matrix that the current frame does. Each of the children should use the current frames combined transformation matrix to combine with their own. This forms a "chain" of matrices where the final child has its own transformation matrix, combined with each of its parents. The next frame has been processed, so now you can actually draw it. The DrawFrame method is actually quite simple, and should look at least somewhat familiar: private void DrawFrame(FrameDerived frame) { MeshContainerDerived mesh = (MeshContainerDerived)frame.MeshContainer; while(mesh != null) { DrawMeshContainer(mesh, frame); mesh = (MeshContainerDerived)mesh.NextContainer; } if (frame.FrameSibling != null) { DrawFrame((FrameDerived)frame.FrameSibling); } if (frame.FrameFirstChild != null) { DrawFrame((FrameDerived)frame.FrameFirstChild); } } You simply walk the tree like normal, only this time, you will attempt to draw every mesh container the frame has a reference to. This method is where the bulk of the work will take place. Use Listing 13.9 to add this method to your application. Listing 13.9 Rendering a Mesh Container private void DrawMeshContainer(MeshContainerDerived mesh, FrameDerived frame) { // Is there skin information? if (mesh.SkinInformation != null) { int attribIdPrev = -1; // Draw for (int iattrib = 0; iattrib < mesh.NumberAttributes; iattrib++) { int numBlend = 0; BoneCombination[] bones = mesh.GetBones(); for (int i = 0; i < mesh.NumberInfluences; i++) { if (bones[iattrib].BoneId[i] != -1) { numBlend = i; } } if (device.DeviceCaps.MaxVertexBlendMatrices >= numBlend + 1) { // first calculate the world matrices for the current set of // blend weights and get the accurate count of the number of // blends Matrix[] offsetMatrices = mesh.GetOffsetMatrices(); FrameDerived[] frameMatrices = mesh.GetFrames(); for (int i = 0; i < mesh.NumberInfluences; i++) { int matrixIndex = bones[iattrib].BoneId[i]; if (matrixIndex != -1) { Matrix tempMatrix = offsetMatrices[matrixIndex] * frameMatrices[matrixIndex]. CombinedTransformationMatrix; device.Transform.SetWorldMatrixByIndex(i, tempMatrix); } } device.RenderState.VertexBlend = (VertexBlend)numBlend; // lookup the material used for this subset of faces if ((attribIdPrev != bones[iattrib].AttribId) || (attribIdPrev == -1)) { device.Material = mesh.GetMaterials()[ bones[iattrib].AttribId].Material3D; device.SetTexture(0, mesh.GetTextures()[ bones[iattrib].AttribId]); attribIdPrev = bones[iattrib].AttribId; } mesh.MeshData.Mesh.DrawSubset(iattrib); } } } else // standard mesh, just draw it after setting material properties { device.Transform.World = frame.CombinedTransformationMatrix; ExtendedMaterial[] mtrl = mesh.GetMaterials(); for (int iMaterial = 0; iMaterial < mtrl.Length; iMaterial++) { device.Material = mtrl[iMaterial].Material3D; device.SetTexture(0, mesh.GetTextures()[iMaterial]); mesh.MeshData.Mesh.DrawSubset(iMaterial); } } } This method looks at least somewhat intimidating. Once it's broken down, though, you'll see it really isn't that complicated. First, the skin information member is checked. If this mesh container has no skeletal information, the mesh will be rendered exactly like our meshes have been in the past. If there is skeletal information, however, the rendering path is much different.

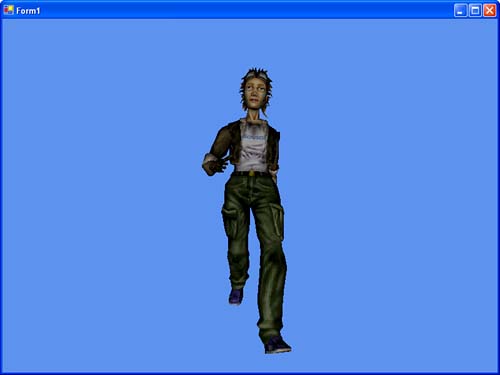

For every attribute entry (set of materials, textures, and so on) in this mesh, a number of operations will need to be performed. First, you must scan through the bone combination table and determine the number of blend weights the mesh will use. The file being used in the example on the included CD uses a maximum of four blend weights, which is what the device creation tests against; however, this code still ensures that the device has the capabilities to blend this many matrices, in case the mesh file has been changed. Once you've determined that your device can render your mesh with these blend weights, you will need to set the world transforms. For each item you find in the bone id member of your bone combination table, you will combine the offset matrix with the frames combined transformation matrix and set the currently indexed world matrix transform to this resulting matrix. This will allow Direct3D to render each blended vertex with the appropriate world transforms. Once that's been completed, you set the vertex blend render state to the number of blends this mesh expects. Finally, you set the material and texture of this subset and draw it. With that, you are ready to run the application. You should expect to see a model walking toward you. See Figure 13.1. Figure 13.1. An animated mesh.

|

EAN: N/A

Pages: 180