Lesson 1: Overview of SQL Server Data Services

You can import and export data from SQL Server with several tools and Transact-SQL statements. Additionally, with the programming models and application programming interfaces (APIs) that are available with SQL Server, such as OLE DB, Microsoft ActiveX Data Objects (ADO), and the Data Transformation Services (DTS) object model, you can write your own programs to import and export data. This lesson provides an overview of the data transfer process and describes the various tools you can use to transfer data in SQL Server 7.

After this lesson, you will be able to:

- Describe the rationale for, and the process of, transferring and transforming data

- Describe the data access interfaces provided by Microsoft SQL Server version 7

- Describe the tools for transferring data in Microsoft SQL Server version 7

Estimated lesson time: 45 minutes

Transferring and Transforming Data

As you have learned in the previous chapters, online transaction processing (OLTP) databases are very different from data warehouse databases. For this reason, it is necessary to change much of the data to fit the structure of the data warehouse database when you transfer the data from the operational systems. This process is called transforming data. To successfully implement data warehousing, you must understand how to manage data and transfer it between applications and environments. Transforming data can be as simple as mapping transformation data types or as complex as programming logic to handle data transformations. Data warehousing and other environments require some degree of data transfer and transformation for one or more of the following reasons:

- To move data to another server or location

- To make a copy of data

- To archive data

- To migrate data

The process of transferring data from one environment to another typically involves

- Identifying the data source

- Specifying the data destination

- Manipulating or transforming the data between the source and destination (optional)

Simple importing and exporting of data is the most basic form of data transfer. Even this simple process can transform data if, for example, you specify a different data type for a column or save a file in another product version or format.

In data warehousing scenarios, you frequently need to transfer data between heterogeneous environments. For example, you might transfer sales information from an Oracle database to a SQL Server database or transfer data from an OLTP system to a data warehouse.

During data transformation, missing values can be added and column values summarized, decoded, decomposed, converted, and translated to a common measure or format. The captured data typically is integrated, made consistent, validated, and restructured before it is stored at the destination.

Transforming data typically involves changing the format of data, restructuring and mapping data, making data consistent, and validating data.

Change the Format of Data

When transforming data, you can change its format. Suppose, for example, that a value of 1 or 0 is stored in the Active_Customer column in your database, but the data that you want to transfer into your database represents the value as the text "true" or "false." You can convert the "true" and "false" values to 1 and 0 values when you transfer the data into your database. Numeric and date formats are frequently changed.

Restructure and Map Data

Restructuring and mapping data often involves combining data from multiple data sources, tables, and columns into a single data set at the destination. For example, you can preprocess the data (this is known as data aggregation or summarization) and store the preprocessed data at your destination. Another example is taking data from an invoice table and an invoice line-item table and combining the data to create a single record in a sales fact table in the data warehouse.

A simple example from the Northwind and Northwind_Mart databases would be taking the values from the FirstName and LastName columns of the source Employee table, and concatenating them before storing the result in a single EmployeeName column in the Employee_Dim dimension table.

Make Data Consistent

When you import data from another source, you should make sure that the new data is consistent with the existing data. This is sometimes called data scrubbing. Data can be inconsistent in several ways:

- The data is accurate, but the representation is not consistent with how you want to store it at the destination. For example, suppose that a credit rating is represented by the values 1, 2, and 3. Making the data consistent may require translating these values to the character string values of Good, Average, and Poor.

- The data representation is correct, but it is inconsistently represented. For example, a company name may be stored in several ways, such as ABC Corp., ABC, or ABC Corporation. In this instance, you can make the data consistent by requiring that the destination always store the company name as ABC Corporation.

You generally can make your data consistent by translating codes or values to readable strings or by converting mixed values to single values.

Validate Data

When you validate data, you verify the accuracy and correctness of the data that you import. For example, you can require that data meet a specific condition before it can be included with your destination data. Alternatively, you can verify that a customer ID already exists at the destination before you transfer additional information for the customer into the destination data.

If you discover any invalid data, try to determine where the fault originated and correct the processes that are contributing to the error. Save invalid data to a log for later examination to determine why it is incorrect.

Loading the Data Warehouse

The preceding section discussed general principles of data transfer and transformation. For data warehousing, you must consider four main factors when moving data into or out of a data warehouse. These factors are data validation, data migration, data scrubbing, and data transformation.

Data Validation

Having valid data in the data warehouse is critical to providing a useful analysis tool. Invalid data should not be transferred into the data warehouse.

Validate Data Before Bringing It into the Data Warehouse

Do not load data into the warehouse until it has been validated. For example, if one country records measurements in meters and centimeters while another uses feet and inches, one country s data must be converted to an agreed-upon standard before bringing it into the data warehouse.

Data Validation Should Occur at the Source

If you correct data in the warehouse only, the base data is still incorrect. Therefore, you should make corrections at the source so that both the source and warehouse data are correct.

DTS Can Perform Data Validation During the Move Process

DTS can use Microsoft ActiveX scripts to check for invalid data, but such a check should flag invalid data for correction at the source rather than just fixing the data in the data warehouse.

Data Migration

Once you are certain that your source data is valid, you can move it. Use the following guidelines when moving data:

- Move the data to an intermediate database first. This is where you will scrub and transform the data before moving it into the data warehouse.

- After you scrub and transform the data, copy it into the data warehouse. If you are not going to scrub and transform the data, you can migrate it directly into the data warehouse.

- Synchronize interrelated sources before migration to ensure the integrity of the data. If you migrate your data while the data sources are not synchronized, you may invalidate the data in the data warehouse.

- Migrate during low system utilization periods. The migration process can be expensive in both processor and disk resources.

Data Scrubbing

The scrubbing process refers to the consistency of your data.

Data Must Be Consistent in the Warehouse

You have already gone through the validation process and know that your data is valid. Now you need to ensure that the data is consistent. For example, in a State field in a database, both NY and New York are valid values, but they are not consistent. Scrubbing the data ensures that it is consistent.

DTS Can Scrub the Data During Migration

The Import and Export wizards can modify data as you move it. DTS can also invoke ActiveX scripts that modify the data in the data source or in the intermediate database. When data is scrubbed in an intermediate database, both the source and the warehouse can continue to run unaffected by the scrubbing process. Data scrubbing can be both processor and disk intensive. Therefore, such scrubbing should occur in a separate database.

Data Transformation

After the data is scrubbed, you can move it into the warehouse. At this time, some data transformation may occur. Data transformation can involve a variety of data changes:

- Data may need to be modified as it is moved.

- Data may need to be broken into multiple fields.

- Multiple fields may need to be combined into one field in the warehouse.

- Values may need to be calculated from the base data.

- Data types in one data source must be mapped to appropriate data types in a target.

Example

Some companies use logic-based product numbers. The product number 12Z-1498731 may represent a part built in December (12) of 1998 (Z) on assembly line fourteen (14), with 98731 the unique product number. This technique enables a product specialist to identify the production date of any product and the assembly line from which it came. However, users of a data warehousing system might not know how to read a product number to decipher manufacturing information. Therefore, it may be necessary to break the data into separate fields as the data is moved into the warehouse. In this case, you would have separate fields for the month, year, assembly line, and unique product number. So, although the product number 12Z-1498731 is valid and does not need to be scrubbed, it still needs to be transformed into another format.

One of the great benefits of the data warehouse is the ability to have aggregated values available quickly. You may use the transformation process to create aggregate information from existing data so the warehouse can return queries more quickly.

OLE DB, ODBC, and OLE DB for OLAP

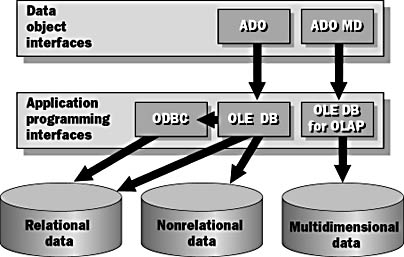

At the core of the Data Warehousing Framework is OLE DB, an object-based, system-level programming interface that helps you access information across a variety of data sources. OLE DB is the Microsoft interface for managing data across an organization. Open Database Connectivity (ODBC) provides access to data in relational databases, whereas OLE DB allows access to both relational and nonrelational data. Figure 6.1 shows these data access interfaces and object models.

Figure 6.1 Data access interfaces and object model

Benefits of OLE DB

OLE DB is an open standard that provides easy connectivity and interoperability between heterogeneous data sources. OLE DB s ability to access a variety of relational and nonrelational sources is particularly useful for data warehousing. Some examples of the data sources that you can access with OLE DB include

- Mainframe indexed sequential access method (ISAM)/virtual storage access method (VSAM) and hierarchical databases

- E-mail stores such as Microsoft Exchange

- Relational databases with native OLE DB providers

- Any source accessible through ODBC

Components of OLE DB

There are two types of OLE DB components: consumers and providers. Providers are further classified as data providers and service providers.

Data Consumers

A consumer is any application that uses (consumes) OLE DB interfaces. The interfaces are used to gain access to the data that a provider makes available. For example, a Microsoft Visual C++ application that uses OLE DB interfaces to connect to a database server is an OLE DB consumer.

Data Providers

An OLE DB provider allows consumers to access data in a uniform way through a known set of documented interfaces that are implemented by the provider. The data is owned by the provider and exposed in a tabular format. An OLE DB provider is similar to an ODBC driver that provides a uniform mechanism for accessing relational data. However, OLE DB providers not only provide a mechanism for relational data but also for nonrelational types of data. OLE DB providers implement Component Object Model (COM) interfaces that allow more flexibility than do ODBC drivers, which use a C-language API specification.

The following table describes some of the available OLE DB providers.

| Data Source or Data Destination | Description |

|---|---|

| Native OLE DB | Accesses applications such as SQL Server, Microsoft Excel, and Microsoft Access as well as workgroup and enterprise databases |

| ODBC | Accesses Oracle, Access, and DB2 by using the OLE DB provider for ODBC |

| ASCII text files | Access ASCII fixed-field-length text files and ASCII delimited text files by using the SQL Server DTS Flat File OLE DB provider |

Service Providers

A service provider does not own data but encapsulates a service that consumes and produces data. Query processors and transaction managers are examples of service providers.

Service providers do not duplicate work that can be performed at the source. For every data source that has an OLE DB provider interface, data processing is performed at the data source and then results are provided for further processing by the service provider. This allows for efficient heterogeneous joins and other services.

OLE DB for OLAP

In pursuing open, extensible systems, Microsoft worked with multiple vendors to develop a standard mechanism for access to multidimensional data. OLE DB for OLAP is the result of their efforts. OLE DB for OLAP is an extension of OLE DB that includes objects and interfaces specific to multidimensional data. OLE DB for OLAP makes it possible for vendors to create products that can work with any multidimensional data provider in the same way that ODBC made it possible for vendors to provide tools that work with any relational data source.

ADO and ADO MD

ADO is an easy-to-use wrapper for the functionality of OLE DB. It exposes objects using a familiar COM automation interface, making it easier for developers to create applications that access a broad range of data sources. The ADO object model is an OLE DB consumer that hides the complexity of the OLE DB provider interfaces for application programmers.

ADO MD is a counterpart to ADO for access to multidimensional data. It is an application-level programming interface to OLE DB for OLAP. OLE DB for OLAP and ADO MD will be discussed in more detail in Chapter 12, "MDX Statements and ADO MD Objects."

Tools for Transferring Data in SQL Server

SQL Server provides several tools and Transact-SQL statements for transferring data, which typically is in the form of tables or files. The method you choose for importing or exporting data depends on a variety of user requirements, including

- The format of the source and destination data

- The location of the source and destination data

- Whether the import or export is a one-time occurrence or an ongoing task

- Whether a command-prompt utility, Transact-SQL statement, or graphical interface is preferred (for ease of use)

- The type of import or export operation

The following table describes the tools that SQL Server provides for transferring data.

| Tool | Description | Use |

|---|---|---|

| DTS Import wizard and DTS Export wizard | Allow users to interactively create DTS packages that can be used to import, export, and transform data. | Transferring data between heterogeneous data sources or transferring all of the objects in a SQL Server 7 database to another SQL Server 7 database. |

| DTS Designer | Allows an experienced database administrator to import, export, and transform data and define complex data workflows. | Transferring homogeneous and heterogeneous data from multiple sources and for setting up complex workflows. |

| Dtsrun utility | A command-prompt utility that allows you to execute existing DTS packages from a command prompt. | Executing a DTS package as part of a batch or scheduled job. |

| Bulk copy program (bcp utility) | A command-prompt utility that imports and exports native SQL Server data files or ASCII text files. | Importing data into a SQL Server table from a file or exporting data from a SQL Server table to a file. |

| Transact-SQL statement | SELECT INTO and INSERT SELECT. | Selecting data to add to a table from an existing SQL Server table; SELECT INTO creates a new table, and INSERT SELECT requires an existing table. |

| BULK INSERT. | Copying a data file into a database table in a user-specified format the fastest method of loading large amounts of data into a table. | |

| BACKUP and RESTORE. | Copying a complete SQL Server database (all data and objects) to another SQL Server. | |

| sp_attach_db | Attaches a database to a server. | Moving or copying a complete SQL Server database (all data and objects) to another SQL Server by copying the database files. |

| Replication | Maintains duplicate table schema, data, or stored procedure definitions from a source database to a destination database, usually on separate servers. | Maintaining copies of data intermittently on multiple databases (does not guarantee that the data will be consistent at the same point in time) an ongoing process. |

Other Tools for Transferring Data

Using the tools provided by SQL Server affords the significant advantage of having much of the code already completed. There are other methods for transferring data. In certain cases for example, when SQL Server is replacing legacy SQL DBMS technology or when there is significant familiarity with these other methods these alternatives may still be employed. These methods usually involve using an independent software vendor tool or a custom program to transfer data to a text file. You can then use the SQL Server tools to load the data from the text file.

Independent Software Vendors Tools

Prior to the days of open standards like ODBC and OLE DB, these tools were often employed to move data from one environment to another.

Custom Written

In certain cases for example, when a legacy system does not have support for modern standard interfaces such as ODBC custom programming is the only mechanism for getting data from those legacy systems to the data warehouse.

Lesson Summary

This lesson provided an overview of the data transfer and transformation process, the data access architecture used by SQL Server, and the various tools you can use to load your data warehouse in SQL Server 7. You can import and export data from SQL Server with several tools and Transact-SQL statements. Additionally, with the programming models and application programming interfaces (APIs) that are available with SQL Server, such as OLE DB, ADO, and the Data Transformation Services (DTS) object model, you can write your own programs to import and export data.

The method you choose for transferring data depends on a variety of user requirements, including the format of the data, the location of the data, how often the transfer will be occurring, the type of import or export, and finally, ease of use.

EAN: 2147483647

Pages: 114