Scaling Project Server Deployments

Microsoft has provided support for a number of scalability options when deploying Project Server. Both clustering and load balancing can be leveraged, and various components of a Project Server implementation can be distributed across servers to achieve performance gains.

Failover clustering is supported for SQL Server, which adds availability, not scalability. In this type of clustering arrangement, one server responds to user requests while the other server exists to detect a failure in the primary server, taking over the load seamlessly if the need arises. The second server is otherwise idle, so it’s common practice to use a lesser box for failover where reduced performance is acceptable in the event of a primary server failure. Clustering is a service available in Windows 2000 Advanced Server or Datacenter Server editions only. This feature isn’t available in Windows 2000 Server standard edition. Clustering can also be applied to the application servers running other Project Server components, but this isn’t compatible with STS. In other words, if Project Server and STS are installed on the same application server, you can’t cluster it.

Microsoft provides Network Load Balancing (NLB) as a service in IIS. Using Microsoft’s NLB, up to 32 servers can constitute a server farm. You can use hardware solutions from Cisco, F5, and others, and these solutions may not have the same limitation. It’s unlikely that a 32-server limitation is a problem unless all your boxes are P2 450 MHz machines. The fact is that, when using current technologies, your system isn’t likely to approach the 32-server limit. Inasmuch as NLB also detects failures in the farm, I believe it’s a better solution for availability for Web servers than clustering. All the servers in the farm share the load equally, which addresses scalability as well as availability. Of course, you can also load balance clusters!

You achieve scalability in Project Server largely by distributing various application components across multiple servers. The components of Project Server that you can distribute across various servers are as follows:

-

Project Web Access: The IIS ASP application

-

Project Server database: The database containing project data

-

SharePoint Team Services: Provides the document and issues management services accessed through Project Web Access

-

SQL Analysis Services: Provides OLAP services for Analyzer views and the modeler

-

Microsoft Session Manager Service: Replaces ASP sessions for Project Server and tracks user sessions

-

Views Notification service: Handles the updating of information between the Project tables in the database and the Web tables that drive Project Web Access views

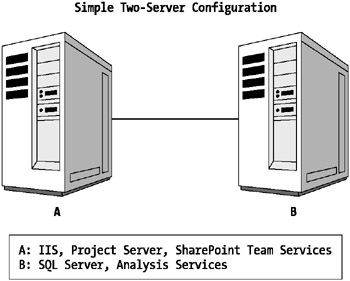

Scaling Project Server implementations by distributing these components is fairly straightforward. The first step toward a scaled implementation is to separate the Project Server application server from the database server. Figure 4-1 shows a simple two-server implementation.

Figure 4-1. Two-server implementation

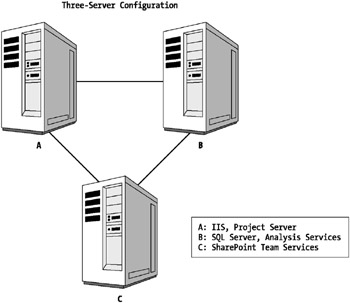

The next logical step is to give STS its own box. This is particularly helpful if your teams are making heavy use of the issues and document libraries features. Figure 4-2 shows a three-server implementation.

Figure 4-2. Three-server implementation

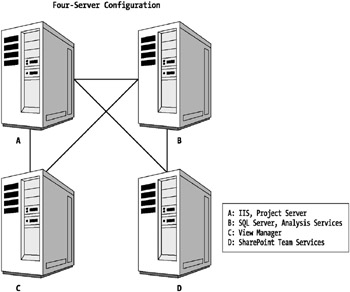

Adding a fourth server allows you to offload the Views Notification service, which is a notorious resource consumer. This service gets activated every time projects are saved to the server and during each publish operation. Additionally, it controls the build of the OLAP cube in Analysis Services. As you can see, it’s a prime candidate for its own server. A four-box implementation is shown in Figure 4-3.

Figure 4-3. Four-server implementation

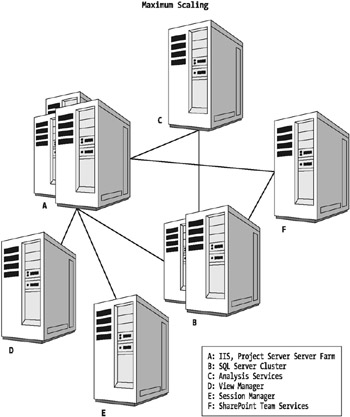

Scaling out the application to the max will yield a configuration something like the one represented in Figure 4-4. Except for clustering every box in the diagram, there’s not much more you can do to physically scale the application environment.

Figure 4-4. Multiserver implementation

Before you go overboard and order a dozen servers, or faint from the mere thought of it, there are other measures you can take to scale and maximize your implementation’s performance. Two significant obstacles to scaling Project Server implementations are STS and the Project Server database itself. The data tables in the Project Server database can’t be distributed across multiple servers, so at some point, your SQL Server capacity poses a hard limit on scalability. Here the answer truly is hardware horsepower and connectivity. The number of processors, disk drive speed, RAM, and connectivity between the database server and the application server running Project Web Access are all focal points. Add network interface cards (NICs) to your servers to widen the pipe. This is an inexpensive and easy-to-implement performance tactic.

Only one STS site may be connected to a single instance of Project Server with automatic subweb creation enabled. You don’t have the option of farming this service, but you can add servers if you’re willing to manage subweb creation manually. Microsoft indicates a maximum of 750 subwebs per server instance. As each project gets its own subweb, this necessarily translates to a 750 active project limit per Project Server instance. The way around this is to create multiple instances of Project Server on the same hardware, except that each additional site gets its own STS server.

This strategy is a no-brainer when you’re trying to serve multiple departments across an enterprise. It’s often better to serve multiple departments with multiple instances of Project Server rather than one all-encompassing instance. This decision, however, must take resource pool usage into consideration. When departments share many resources in a substantial way, using separate instances is probably not the approach to take. In organizations where departments operate mostly independently with few cross-departmental assignments, using separate instances is likely the best solution. Stratifying Project Server instances must follow resource pool usage.

Where substantial cross-departmental resource usage occurs, multiple instances become impractical because resource availability isn’t articulated between instances. In order to manage resource availability across two instances, the shared resources must have assignments mirrored in both instances or in some way represented in both. It’s not difficult to create this representation either by blocking a resource’s calendar when on loan to the other department or by creating a plan to contain this cross-departmental representation when it occurs infrequently. However, if cross-departmental resource usage is constant and a regular practice, it quickly becomes undesirable double work.

If it’s practical for you to implement two or more separate instances, you also overcome the performance issues that crop up when the resource pool approaches 1,000 resources. The larger it gets, the longer it takes to open and save. This is the inevitable consequence of manipulating large datasets.

EAN: 2147483647

Pages: 185