Chapter 7. Risk-Based Security Testing[1][1] Parts of this chapter appeared in original form in IEEE Security & Privacy magazine co-authored with Bruce Potter [Potter and McGraw 2004].

A good threat is worth a thousand tests. Boris Beizer Security testing has recently moved beyond the realm of network port scanning to include probing software behavior as a critical aspect of system behavior (see the box From Outside In to Inside In to Inside Out on page 189). Unfortunately, testing software security is a commonly misunderstood task. Security testing done properly goes much deeper than simple black box probing on the presentation layer (the sort performed by so-called application security tools, which I rant about in Chapter 1)and even beyond the functional testing of security apparatus. Out on page 189). Unfortunately, testing software security is a commonly misunderstood task. Security testing done properly goes much deeper than simple black box probing on the presentation layer (the sort performed by so-called application security tools, which I rant about in Chapter 1)and even beyond the functional testing of security apparatus. Testers must carry out a risk-based approach, grounded in both the system's architectural reality and the attacker's mindset, to gauge software security adequately. By identifying risks in the system and creating tests driven by those risks, a software security tester can properly focus on areas of code where an attack is likely to succeed. This approach provides a higher level of software security assurance than is possible with classical black box testing. Security testing has much in common with (the new approach to) penetration testing as covered in Chapter 6. The main difference between security testing and penetration testing is the level of approach and the timing of the testing itself. Penetration testing is by definition an activity that happens once software is complete and installed in its operational environment. Also, by its nature, penetration testing is focused outside in and is somewhat cursory. By contrast, security testing can be applied before the software is complete, at the unit level, in a testing environment with stubs and pre-integration.[2] This distinction is similar to the slippery distinction between unit testing and system testing. in and is somewhat cursory. By contrast, security testing can be applied before the software is complete, at the unit level, in a testing environment with stubs and pre-integration.[2] This distinction is similar to the slippery distinction between unit testing and system testing. Security testing should start at the feature or component/unit level, prior to system integration. Risk analysis carried out during the design phase (see Chapter 5) should identify and rank risks and discuss intercomponent assumptions. At the component level, risks to the component's assets must be mitigated within the bounds of contextual assumptions. Tests should be structured in such a way as to attempt both unauthorized misuse of and access to target assets as well as violations of the assumptions the system writ large may be making relative to its components. A security fault may well surface in the complete system if tests like these are not devised and executed. Security unit testing carries the benefit of breaking system security down into a number of discrete parts. Theoretically, if each component is implemented safely and fulfills intercomponent design criteria, the greater system should be in reasonable shape (though this problem is much harder than it may seem at first blush [Anderson 2001]).[3] By identifying and leveraging security goals during unit testing, the security posture of the entire system can be significantly improved. [3] Ross Anderson refers to the idea of component-based distributed software and the composition problem as "programming the devil's computer."

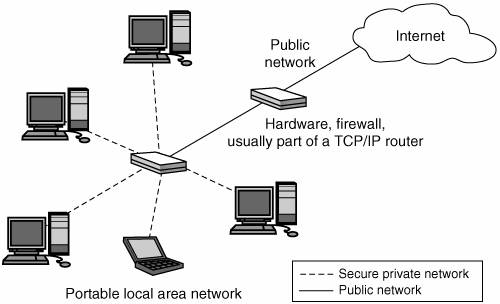

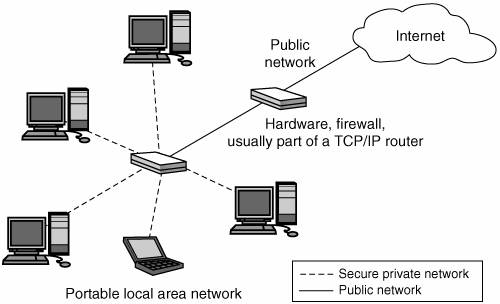

Security testing should continue at the system level and should be directed at properties of the integrated software system. This is precisely where penetration testing meets security testing, in fact. Assuming that unit testing has successfully achieved its goals, system-level testing should shift the focus toward identifying intracomponent failures and assessing security risk inherent at the design level. If, for example, a component assumes that only another trusted component has access to its assets, a test should be structured to attempt direct access to that component from elsewhere. A successful test can undermine the assumptions of the system and would likely result in a direct, observable security compromise. Data flow diagrams, models, and intercomponent documentation created during the risk analysis stage can be a great help in identifying where component seams exist. Finally, abuse cases developed earlier in the lifecycle (see Chapter 8) should be used to enhance a test plan with adversarial tests based on plausible abuse scenarios. Security testing involves as much black hat thinking as white hat thinking. From Outside In to Inside In to Inside Out Out Traditional approaches to computer and network security testing focus on network infrastructure, firewalls, and port scanning. This is especially true of network penetration testing (see Chapter 6) and its distant cousin, software penetration testing (sometimes called application penetration testing by vendors). Early approaches to application-level penetration testing were lacking because they attempted to test all possible programs with a fixed number of (lame) canned attacks. Better penetration testing approaches take architectural risks, code scanning results, and security requirements into account, but still focus on an outside in perspective. in perspective. The notion behind old-school security testing is to protect vulnerable systems (and software) from attack by identifying and defending a perimeter. In this paradigm, testing focuses on an outside in approach. in approach. One classic example is the use of port scanning with tools such as Nessus <http://www.nessus.org/> or nmap <http://www.insecure.org/nmap/> to probe network ports and see which service is listening. Figure 7-1 shows a classic outside in paradigm focusing on firewall placement. In this figure, the LAN is separated from the Internet (or public network) by a firewall. The natural perimeter is the firewall itself, which is supposed to provide a choke point for network traffic and a position from which very basic packet-level enforcement is possible. Firewalls do things like "drop all packets to port 81" or "only allow traffic from specific IP addresses on specific ports through." in paradigm focusing on firewall placement. In this figure, the LAN is separated from the Internet (or public network) by a firewall. The natural perimeter is the firewall itself, which is supposed to provide a choke point for network traffic and a position from which very basic packet-level enforcement is possible. Firewalls do things like "drop all packets to port 81" or "only allow traffic from specific IP addresses on specific ports through." Figure 7-1. The outside in approach. A firewall protects a LAN by blocking various network traffic on its way in; outside in approach. A firewall protects a LAN by blocking various network traffic on its way in; outside in security testing (especially penetration testing) involves probing the LAN with a port scanner to see which ports are "open" and which services are listening on those ports. A major security risk associated with this approach is that the services traditionally still available through the firewall are implemented with insecure software. in security testing (especially penetration testing) involves probing the LAN with a port scanner to see which ports are "open" and which services are listening on those ports. A major security risk associated with this approach is that the services traditionally still available through the firewall are implemented with insecure software.

The problem is that this perimeter is only apparent at the network/packet level. At the level of software applications (especially geographically distributed applications), the perimeter has all but disappeared. That's because firewalls have been configured (or misconfigured, depending on your perspective) to allow advanced applications to tunnel right through them. A good example of this phenomenon is the SOAP protocol, which is designed (on purpose) to shuttle traffic through port 80 for various different applications. In some sense, SOAP is an anti-security device invented by software people so that they could avoid having to ask hard-nosed security people to open a firewall port for them. Once a tunnel like this is operational, the very idea of a firewall seems quaint.[*] In the brave new world of Service Oriented Architecture (SOA) for applications, we should not be surprised that the firewall is quickly becoming irrelevant. By contrast, I advocate an inside out approach to security, whereby software inside the LAN (and exposed on LAN boundaries) is itself subjected to rigorous risk management and security testing. This is just plain critical for modern distributed applications. out approach to security, whereby software inside the LAN (and exposed on LAN boundaries) is itself subjected to rigorous risk management and security testing. This is just plain critical for modern distributed applications. |

[*] If you're having trouble getting through the firewall, just aim your messages through port 80, use a little SOAP, and you're back in business.

|

In to Inside

In to Inside in and is somewhat cursory. By contrast, security testing can be applied before the software is complete, at the unit level, in a testing environment with stubs and pre-integration.[2] This distinction is similar to the slippery distinction between unit testing and system testing.

in and is somewhat cursory. By contrast, security testing can be applied before the software is complete, at the unit level, in a testing environment with stubs and pre-integration.[2] This distinction is similar to the slippery distinction between unit testing and system testing.