Section 4.4. System V Shared Memory

4.4. System V Shared MemoryShared memory affords an extremely efficient means of sharing data among multiple processes on a Solaris system since the data need not actually be moved from one process's address space to another. As the name implies, shared memory is exactly that: the sharing of the same physical memory (RAM) pages by multiple processes, such that each process has mappings to the same physical pages and can access the memory through pointer dereferencing in code. The use of shared memory in an application requires implementation of just a few interfaces bundled into the standard 3C language library, as listed in Table 4.3. Consult the manual pages for more detailed information. In the following sections, we examine what these interfaces do from a kernel implementation standpoint.

The shared memory kernel module is not loaded automatically by Solaris at boot time; none of the System V IPC facilities are. The kernel will dynamically load a required module when a call that requires the module is made. Thus, if the shmsys and ipc modules are not loaded, then the first time an application makes a shared memory system call (for example, shmget(2)), the kernel loads the module and executes the system call. The module remains loaded until it is explicitly unloaded by the modunload(1M) command or until the system reboots. The kernel maintains certain resources for the implementation of shared memory. Specifically, a shared memory identifier, shmid, is initialized and maintained by the operating system whenever a shmget(2) system call is executed successfully. The shmid identifies a shared segment that has two components: the actual shared RAM pages and a data structure that maintains information about the shared segment, the shmid_ds data structure, detailed in Table 4.4.

Since Solaris 10, only two tuneable parameters are associated with shared memory. They are described in Table 4.5.

They are defined quite simply.

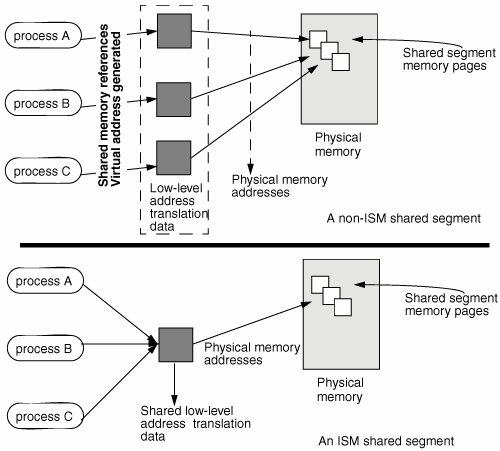

4.4.1. Shared Memory Kernel ImplementationIn this section we look at the flow of kernel code that executes when the shared memory system calls are called. Applications first call shmget(2) to get a shared memory identifier. The kernel uses a key value passed in the call to locate (or create) a shared segment. [application code] shmget(key, size, flags [PRIVATE or CREATE]) [kernel] shmget() ipcget() /* get an identifier - shmid_ds */ if (new shared segment) check size against min and max tunables get anon_map and anon array structures initialize anon structures initialize shmid_ds structure else /* existing segment */ check size against existing segment size return shmid (or error) back to application ipcget() if (key == IPC_PRIVATE) loop through shmid_ds structures, looking for a free one if (found) goto init else return ENOSPC /* tough cookies */ else /* key is NOT IPC_PRIVATE */ loop through shmid_ds structures, for each one if (structure is allocated) if (the key matches) if (CREATE or EXCLUSIVE flags set as passed args) return EEXIST error /* segment with matching key exists */ if (permissions do NOT allow access) return EACCESS error set status set base address of shmid_ds return 0 /* that's a good thing */ set base address of shmid_ds /* if we reach this, we have an unallocated shmid_ ds structure */ if (do not CREATE) return ENOENT error /*we're through them all, and didn't match keys */ if (CREATE and no space) return ENOSPC error do init return 0 /* goodness */ shmget(), when entered, calls ipcget() to fetch the shmid_ds data structure. Remember, all possible shmid_ds structures are allocated up-front when /kernel/ sys/shmsys loads, so we need to either find an existing one that matches the key value or initialize an unallocated one if the flags indicate we should and a structure is available. The final init phase of ipcget() sets the mode bits, creator UID, and creator GID. When ipcget() returns to shmget(), the anon page mappings are initialized for a new segment or a simple size check is done. (The size argument is the argument passed in the shmget(2) call, not the size of the existing segment.) Once a shmget(2) call returns success to an application, the code has a valid shared memory identifier. The program must call shmat(2) to create the mappings (attach) to the shared segment. [application] shmat(shmid, address, flags) [kernel] shmat() ipc_access() /* check permissions */ /* ipc_access() will return EACCESS if permission tests fail */ /* and cause shmat() to bail out */ if (kernel has ISM disabled) clear SHM_SHARE_MMU flag if (ISM and SHM_SHARE_MMU flag) calculate number pages find a range of pages in the address space and set address if (user-supplied address) check alignment and range map segment to address create shared mapping tables if (NOT ISM) if (no user-supplied address) find a range of pages in the address space and set address else check alignment and range return pointer to shared segment, or error Much of the work done in shmat() requires calls into the lower-level address space support code and related memory management routines. The details on a process's address space mappings are covered in Chapter 9. Remember, attaching to a shared memory segment is essentially just another extension to a process's address space mappings. At this point, applications have a pointer to the shared segment they use in their code to read or write data. The shmdt(2) interface allows a process to unmap the shared pages from its address space (detach itself). Unmapping does not cause the system to remove the shared segment, even if all attached processes have detached themselves. A shared segment must be explicitly removed by the shmctl(2) call with the IPC_RMID flag set or from the command line with the ipcrm(1) command. Obviously, permissions must allow for the removal of the shared segment. We should point out that the kernel makes no attempt at coordinating concurrent access to shared segments. The software developer must coordinate this access by using shared memory to prevent multiple processes attached to the same shared pages from writing to the same locations at the same time. Coordination can be done in several ways, the most common of which is the use of another IPC facility, semaphores, or mutex locks. The shmctl(2) interface can also be used to get information about the shared segment (returns a populated shmid_ds structure), to set permissions, and to lock the segment in memory (processes attempting to lock shared pages must have sufficient process privileges; see Chapter 5). You can use the ipcs(1) command to look at active IPC facilities in the system. When shared segments are created, the system maintains permission flags similar to the permission bits used by the file system. They determine who can read and write the shared segment, as specified by the user ID (UID) and group ID (GID) of the process attempting the operation. You can see extended information on the shared segment by using the -a flag with the ipcs(1) command. The information is fairly intuitive and is documented in the ipcs(1) manual page. We also listed in Table 4.4 the members of the shmid_ds structures that are displayed by ipcs(1) output and the corresponding column name. The permissions (mode) and key data for the shared structure are maintained in the ipc_perm data structure, which is embedded in (a member of) the shmid_ds structure and described in Table 4.2. 4.4.2. Intimate Shared Memory (ISM)Many applications, and especially databases, use shared memory to cache frequently used data (the buffer cache) and to facilitate interprocess communication. Solaris provides an optimized shared memory capability known as Intimate Shared Memory (ISM), and all major databases take advantage of it. ISM offers a number of benefits: The shared memory is automatically locked by the kernel when the segment is created. This not only ensures that the memory cannot be paged out but also allows the kernel to use a fast locking mechanism when doing I/O into or out of the shared memory segment, thereby saving significant CPU time. Kernel virtual-to-physical memory address translation structures are shared between processes that attach to the shared memory, saving kernel memory and CPU time. Large pages, supported by the UltraSPARC Memory Management Unit (MMU), are automatically allocated for ISM segments (as of Solaris 2.6). The Solaris page size is currently 8 Kbytes; large MMU pages can be up to 4 Mbytes in size, so large pages can reduce the number of memory pointers by a factor of 512. This reduction in complexity translates into noticeable performance improvements, especially on systems with large amounts of memory. Since the memory is locked, it is not necessary to provide swap space to back it, thereby saving disk space. Intimate shared memory (ISM) is an optimization introduced first in Solaris 2.2. It allows for the sharing of the low-level kernel data and structures involved in the virtual-to-physical address translation for shared memory pages, as opposed to just sharing the actual physical memory pages. Typically, non-ISM systems maintain per-process mapping information for the shared memory pages. With many processes attaching to shared memory, this scheme creates a lot of redundant mapping information to the same physical pages that the kernel must maintain. Figure 4.2 illustrates the difference between ISM and non-ISM shared segments. Figure 4.2. Comparison of ISM and Non-ISM Shared Segments The actual mapping structures differ across processors. That is, the low-level address translation structures and functions are part of the hardware-specific kernel code, known as the Hardware Address Translation (HAT) layer. The HAT layer is coded for a specific processor's Memory Management Unit (MMU), which is an integral part of the processor design and responsible for translating virtual addresses generated by running processes to physical addresses generated by the system hardware to fetch the memory data. In addition to the translation data sharing, ISM provides another useful feature: When ISM is used, the shared pages are locked down in memory and will never be paged out. This feature was added for the RDBMS vendors. As we said earlier, shared memory is used extensively by commercial RDBMS systems to cache data (among other things, such as stored procedures). Non-ISM implementations treat shared memory just like any other chunk of anonymous memoryit gets backing store allocated from the swap device, and the pages themselves are fair game to be paged out if memory contention becomes an issue. The effects of paging out shared memory pages that are part of a database cache would be disastrous from a performance standpointRAM shortages are never good for performance. Since a vast majority of customers that purchase Sun servers use them for database applications and since database applications make extensive use of shared memory, addressing this issue with ISM was an easy decision. Solaris implements memory page locking by setting some bits in the memory page's page structure. Every page of memory has a corresponding page structure that contains information about the memory page. Page sizes vary across different hardware platforms. UltraSPARC-based systems implement an 8-Kbyte memory page size, which means that 8 Kbytes is the smallest unit of memory that can be allocated and mapped to a process's address space. The page structure contains several fields, among which are two fields called p_cowcnt and p_lckcnt, that is, page copy-on-write count and page lock count. Copy-on-write tells the system that this page can be shared as long as it's being read, but once a write to the page is executed, the system should make a copy of the page and map it to the process that is doing the write. Lock count maintains a count of how many times page locking was done for this page. Since many processes can share mappings to the same physical page, the page can be locked from several sources. The system maintains a count to ensure that processes that complete and exit do not result in the unlocking of a page that has mappings from other processes. The system's pageout code, which runs if free memory gets low, checks the status to the page's p_cowcnt and p_lckcnt fields. If either of these fields is nonzero, the page is considered locked in memory and thus not marked as a candidate for freeing. Shared memory pages using the ISM facility do not use the copy-on-write lock (that would make for a nonshared page after a write). Pages locked through ISM implement the p_lckcnt page structure field. ISM locks pages in memory so that they'll never be paged out; swap is not allocated for ISM pages. The net effect is that allocation of shared segments by ISM requires sufficient available unlocked RAM for the allocation to succeed. Using ISM requires setting a flag in the shmat(2) system call. Specifically, the SHM_SHARE_MMU flag must be set in the shmflg argument passed in the shmat(2) call to instruct the system to set up the shared segment as intimate shared memory. Otherwise, the system will create the shared segment as a non-ISM shared segment. In Solaris 9 onward, we can use the pmap utility to check whether allocations are using ISM shared memory. In the example below, we start a program called maps, which creates several mappings, including creating and attaching two 8-Mbyte shared segments, and we use a key value of 2 and 3. Looking at the address space listing, we can see that at the address of the shared segment, 0A000000, we have a shared memory segment created with ISM. sol9$ pmap -x 15492 15492: ./maps Address Kbytes RSS Anon Locked Mode Mapped File 00010000 8 8 - - r-x-- maps 00020000 8 8 8 - rwx-- maps 00022000 20344 16248 16248 - rwx-- [ heap ] 03000000 1024 1024 - - rw-s- dev:0,2 ino:4628487 04000000 1024 1024 512 - rw--- dev:0,2 ino:4628487 05000000 1024 1024 512 - rw--R dev:0,2 ino:4628487 06000000 1024 1024 1024 - rw--- [ anon ] 07000000 512 512 512 - rw--R [ anon ] 08000000 8192 8192 - 8192 rwxs- [ dism shmid=0x5] 09000000 8192 4096 - - rwxs- [ dism shmid=0x4] 0A000000 8192 8192 - 8192 rwxsR [ ism shmid=0x2 ] 0B000000 8192 8192 - 8192 rwxsR [ ism shmid=0x3 ] FF280000 680 672 - - r-x-- libc.so.1 FF33A000 32 32 32 - rwx-- libc.so.1 FF390000 8 8 - - r-x-- libc_psr.so.1 FF3A0000 8 8 - - r-x-- libdl.so.1 FF3B0000 8 8 8 - rwx-- [ anon ] FF3C0000 152 152 - - r-x-- ld.so.1 FF3F6000 8 8 8 - rwx-- ld.so.1 FFBFA000 24 24 24 - rwx-- [ stack ] -------- ------- ------- ------- ------- total Kb 50464 42264 18888 16384 See Section 12.2.5 for more detail on the SPARC implementation of ISM. 4.4.3. Dynamic ISM Shared MemoryDynamic Intimate Shared Memory (DISM) was introduced in the 1/01 release of Solaris 8 (Update 3) to supply applications with ISM shared memory that is dynamically resizable. The first major application to support DISM was Oracle9i. Oracle9i uses DISM for its newly introduced dynamic System Global Area (SGA) capability. With regular ISM, it is not possible to change the size of an ISM segment once it has been created. To change the size of database buffer caches, databases must be shut down and restarted (or designed to use a variable number of shared memory segmentsa more complicated alternative). This limitation has a negative impact on system availability. For example, if memory is to be removed from a system because of a dynamic reconfiguration event, one or more database instances may first have to be shut down. DISM was designed to overcome this limitation. A large DISM segment can be created when the database boots, and sections of it can be selectively locked or unlocked as memory requirements change. Instead of the kernel automatically locking DISM memory, though, locking and unlocking is done by the application (for example, Oracle), providing the flexibility to make adjustments dynamically. DISM is like ISM except that it isn't automatically locked. The application, not the kernel does the locking is done by using mlock(). Kernel virtual-to-physical memory address translation structures are shared among processes that attach to the DISM segment, saving kernel memory and CPU time. 4.4.3.1. DISM PerformanceTests have shown that, as of this release, DISM and ISM performance are equivalent. This is an important development, since it means that the availability benefits of DISM can be realized without compromising performance in any way. 4.4.3.2. DISM ImplementationAs with ISM, shmget(2) creates the segment. The shmget() size specified is the total size for the segment, that is, the maximum size. The size of the segment can be larger than physical memory. If the segment size is larger than physical memory, then enough of disk swap should be available to cover maximum possible DISM size. The DISM segment is attached to a process through the shmat(2) interface. A new shmat(2) flag, SHM_DYNAMIC, tells shmat(2) to create Dynamic ISM. Physical memory is allocated on demand (ZFOD) page by access or by locking within DISM virtual area. |

EAN: 2147483647

Pages: 244