Section 1.9. Resource Management

1.9. Resource ManagementThe hardware resources of a system are typically subdivided into four major categories: processors, memory, disk I/O, and network I/O. Resource management refers to the facilities and infrastructure available in the operating system to manage these hardware resources. Generically, the primary purpose of any operating system kernel is to manage available resources for applications and, generally, to allow multiple users, applications, and processes to share the resources effectively. Certainly, Solaris OS is no exception to that rule, and running a variety of workloads out of the box, without using additional resource management utilities to specifically allocate processors, memory, etc., works very well much of the time. That said, there are some compelling reasons to implement and use resource allocation controls in today's IT environments:

Mixing multiple workloads within a single instance of Solaris OS can impose some risk when applications are not well-behaved or when usage patterns are unpredictable. In the default shared environment, an application with a memory leak or software bug that results in CPU-bound threads can consume an inordinate amount of resources at the expense of other applications, resulting in suboptimal performance. Setting effective resource management controls ensures that performance requirements and service levels are sustained. 1.9.1. Processor Controls and DomainsThe introduction of resource management controls in Solaris OS has evolved over time, beginning with basic processor-binding capabilities (Solaris 2.4) through Solaris Containers (Solaris 10). Processor controls are bundled utilities that bind processes and threads to specific processors on the system, partition a processor, and manage interrupts.

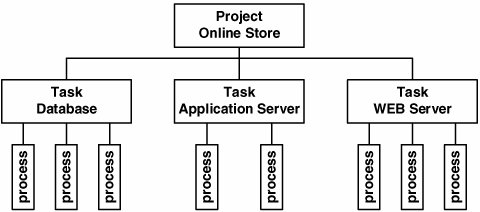

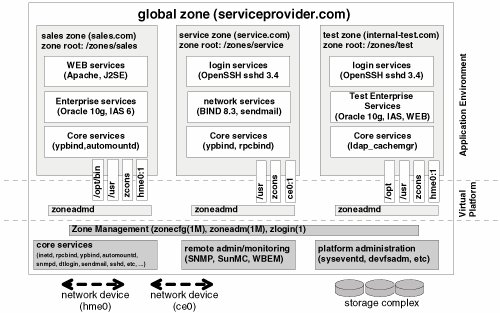

Processor sets and bindings can, of course, be used in conjunction with dynamic system domains, by which one or more processor sets can be configured in the processors within a specific domain, thus allowing multiple levels of resource allocation and control. Processors can also be taken offline entirely, meaning that the kernel scheduler will no longer use the processor for scheduling threads or handling interrupts. The psradm(1M) command manages a processor's operational state. 1.9.2. Solaris Resource ManagementThe naming convention for resource management changes after Solaris 8. For Solaris 8, an unbundled product called Solaris Resource Manager, or SRM, is available. In Solaris 9 and 10, resource management is integrated into the operating system. Generically speaking, resource management refers to specific software components and utilities used to manage hardware resources. Each release builds on the functions and features of the previous release; whereas Solaris 8 requires the installation of SRM for a share-based thread scheduler (SHR), a share-based scheduler is integrated into Solaris 9 (FSS), along with a new framework for managing resource allocations and limits. Solaris 9 also adds resource pools, which are further enhanced in Solaris 10 with the addition of dynamic resource pools. Finally, Solaris 10 adds virtualized execution environments, or Zones. Read on to learn more about these features. 1.9.2.1. Resource Management FrameworkThe Fair Share Scheduling class (FSS) is integrated into Solaris 9 (that is, it is not an unbundled add-on), along with a new framework for configuration and management. In addition to FSS, Solaris 9 introduces two new abstractions for defining resource allocations and limits; projects and tasks. Where the SHR scheduler in Solaris 8 used lnodes and UIDs for allocation and control, Solaris 9 (and 10) manage CPU shares through the projects database and administrative commands. The projects framework provides a stateful namespace for binding users, processes, and applications to resource allocations and limits. The framework is hierarchically structured (see Figure 1.6); a project may have one or more tasks associated with it, and a task may have one or more processes associated with it. Figure 1.6. Projects and Tasks The projects database and administrative interface allow groups of processes to be defined as a workload and configured opn the FSS scheduling class. Share allocation is done through attributes in the project definition file. In addition to allowing the allocation of CPU shares, the projects framework provides for setting resource limits at the project, task, and process level. For example, System V IPC resources for shared memory, semaphores, and message queues are defined at the project level. The maximum number of LWPs can be set either at the project or task level, and traditional UNIX resource limits are defined in the projects database on a per-process basis. The projects framework enables another new feature to Solaris 9 resource management: resource pools. Resource pools are a persistent configuration mechanism for processor sets. Recall that processor sets managed with psrset(1M) have only in-memory state, meaning that a reboot requires a reconfiguration of the processor sets and bindings. Resource pools address this by using the project's database to store processor set configurations and bindings. Thus, a set of CPUs can be configured as a resource pool, with a specific project bound to the pool. All the tasks, processes, and LWPs associated with the project will be scheduled. For physical memory control, the resource capping mechanism described previously has been added to the Solaris 9 12/03 release, and has been extended to use the project's database for establishing physical memory consumption limits at the project level. See the System Administration Guide: Resource Management and Network Services for specific information on configuring and managing resources with projects in Solaris 9. 1.9.2.2. Enhancements to Resource Management in Solaris 10Adding to the features introduced in Solaris 9, Solaris 10 contributes two significant features to Solaris resource management: Dynamic Resource Pools (DRPs) and Zones. Recall that resource pools in Solaris 9 give persistent process sets the option of binding a scheduling class as an attribute for threads that execute in the pool. Resource pools include a facility for dynamically adjusting the resources (number of CPUs) assigned to the pool in response to system load conditions. In Solaris 10, dynamic resource pools automatically adjust for utilization data and performance goals established in the configuration. A new Solaris daemon, poold, monitors system load and decides whether resource allocation adjustments are required. Solaris 10 Zones provide multiple, virtualized, isolated execution environments for running multiple workloads or applications within a single kernel instance. When a zone is created, all the processes executing within the zone are isolated from processes running in other zones on the system. Think of zones as software partitions; kernel zone software sets the boundaries and isolation within each zone. By default, a global zone, which has visibility into all zones (see Figure 1.7), is the control point for systemwide zone configuration and management. Figure 1.7. Zones in Solaris Each nonglobal zone configured in a Solaris 10 system has at least one virtual network interface with its own network identity (address, hostname, domain). The network interface for each zone is channeled through one of the physical network interfaces on the system. The network traffic for a nonglobal zone is not visible to the other nonglobal zones on the system. Additionally, each nonglobal zone has its own root password and is only visible to a subset of the system's file system hierarchy, as defined when the zone is configured. At the center of the zones design was consolidation: the ability to run multiple applications, including several instances of the same type of service (Web server, database server, etc.) in a contained and secure environment, with a simple management framework. Also, installing, configuring, and running applications in a zone must be no different than doing so on a stand-alone system. In other words, the zone must appear to the administrator as just another server running Solaris OS. No changes are required at the application level in order to install and run the software within a zone. In Solaris 10, zones and resource pools have been integrated, such that a resource pool can be bound to specific zone. The combination of zones and resource pools is a powerful foundation for consolidating multiple applications and workloads within a single Solaris 10 instance; the environment is secure, manageable, flexible, and configurable to meet performance requirements and service levels for each application. See the System Administration Guide: Solaris Containers: Resource Management and Solaris Zones for information on configuring and using zones, resource limits, processor sets, and resource pools. 1.9.3. Internet Protocol Quality of ServiceAdded to Solaris 9 9/02, the Internet Protocol Quality of Service (IPQoS) enabled administrators to manage resources for network services. Using IPQoS controls, administrators can allocate and regulate available network bandwidth for different classes of services and users through the use of filters configured in accordance with the following:

With IPQoS, administrators can prioritize, control, and gather statistics for the different service levels configured with the filter keys listed above. For information on configuring and monitoring IPQoS, see the System Administration Guide: IP Services for Solaris 10 and the IPQoS Administration Guide for Solaris 9. 1.9.4. Resource Management and ObservabilityMany of the bundled tools and utilities that ship with Solaris OS have been updated to improve the observability of a system running with configured processor sets, resource pools and zones. For example, CPU usage can be monitored with the prstat(1M) command on a per-processor set, per-project, or per-zone basis with the appropriate command-line flags. Commonly used commands, such as ps(1), ipcs(1), pgrep(1), proc(1), sar(1), and others, now include the option to specify a zone ID on the command line to gather information about a specific zone. The mpstat(1M) command, when executed in a zone bound to a resource pool, displays information only about the processors in the configured pool. Memory consumption and rcapd daemon activity can be monitored with rcapstat(1). Resource pool statistics on size and load can be viewed with poolstat(1). The bundled Solaris accounting subsystem has been updated to provide resource usage reporting on projects, tasks, and zones. Extended accounting, added to Solaris 9, includes a new accounting database and command set, along with a set of Perl interface modules with which scripts that access the extended accounting files can be developed in the Perl language. Many other commands have been made aware of processor sets, resource pools, or zones. The key point here is the tight integration of these features into Solaris OS. |

EAN: 2147483647

Pages: 244