Section 14.6. The Vnode

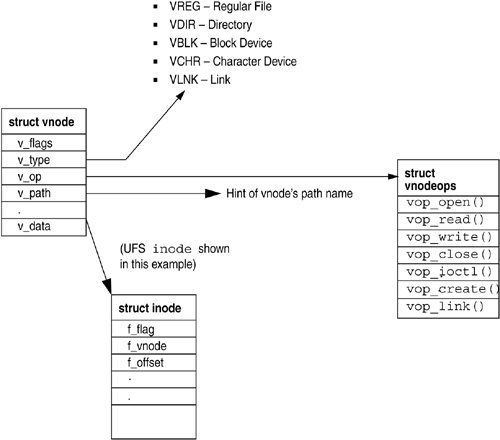

14.6. The VnodeA vnode is a file-system-independent representation of a file in the Solaris kernel. A vnode is said to be objectlike because it is an encapsulation of a file's state and the methods that can be used to perform operations on that file. A vnode represents a file within a file system; the vnode hides the implementation of the file system it resides in and exposes file-system-independent data and methods for that file to the rest of the kernel. A vnode object contains three important items (see Figure 14.7).

Figure 14.7. The vnode Object 14.6.1. Object InterfaceThe kernel uses wrapper functions to call vnode functions. In that way, it can perform vnode operations (for example, read(), write(), open(), close()) without knowing what the underlying file system containing the vnode is. For example, to read from a file without knowing that it resides on a UFS file system, the kernel would simply call the file-system-independent function for read(), VOP_READ(), which would call the vop_read() method of the vnode, which in turn calls the UFS function, ufs_read(). A sample of a vnode wrapper function from sys/vnode.h is shown below. #define VOP_READ(vp, uiop, iof, cr, ct) \ fop_read(vp, uiop, iof, cr, ct) int fop_read( vnode_t *vp, uio_t *uiop, int ioflag, cred_t *cr, struct caller_context *ct) { return (*(vp)->v_op->vop_read)(vp, uiop, ioflag, cr, ct); } See usr/src/uts/common/sys/vnode.h The vnode structure in Solaris OS can be found in sys/vnode.h and is shown below. It defines the basic interface elements and provides other information contained in the vnode. typedef struct vnode { kmutex_t v_lock; /* protects vnode fields */ uint_t v_flag; /* vnode flags (see below) */ uint_t v_count; /* reference count */ void *v_data; /* private data for fs */ struct vfs *v_vfsp; /* ptr to containing VFS */ struct stdata *v_stream; /* associated stream */ enum vtype v_type; /* vnode type */ dev_t v_rdev; /* device (VCHR, VBLK) */ /* PRIVATE FIELDS BELOW - DO NOT USE */ struct vfs *v_vfsmountedhere; /* ptr to vfs mounted here */ struct vnodeops *v_op; /* vnode operations */ struct page *v_pages; /* vnode pages list */ pgcnt_t v_npages; /* # pages on this vnode */ pgcnt_t v_msnpages; /* # pages charged to v_mset */ struct page *v_scanfront; /* scanner front hand */ struct page *v_scanback; /* scanner back hand */ struct filock *v_filocks; /* ptr to filock list */ struct shrlocklist *v_shrlocks; /* ptr to shrlock list */ krwlock_t v_nbllock; /* sync for NBMAND locks */ kcondvar_t v_cv; /* synchronize locking */ void *v_locality; /* hook for locality info */ struct fem_head *v_femhead; /* fs monitoring */ char *v_path; /* cached path */ uint_t v_rdcnt; /* open for read count (VREG only) */ uint_t v_wrcnt; /* open for write count (VREG only) */ u_longlong_t v_mmap_read; /* mmap read count */ u_longlong_t v_mmap_write; /* mmap write count */ void *v_mpssdata; /* info for large page mappings */ hrtime_t v_scantime; /* last time this vnode was scanned */ ushort_t v_mset; /* memory set ID */ uint_t v_msflags; /* memory set flags */ struct vnode *v_msnext; /* list of vnodes on an mset */ struct vnode *v_msprev; /* list of vnodes on an mset */ krwlock_t v_mslock; /* protects v_mset */ } vnode_t; See usr/src/uts/common/sys/vnode.h 14.6.2. vnode TypesSolaris OS has specific vnode types for files. The v_type field in the vnode structure indicates the type of vnode, as described in Table 14.2.

14.6.3. vnode Method RegistrationThe vnode interface provides the set of file system object methods, some of which we saw in Figure 14.1. The file systems implement these methods to perform all file-system-specific file operations. Table 14.3 shows the vnode interface methods in Solaris OS.

File systems register their vnode and vfs operations by providing an operation definition table that specifies operations using name/value pairs. The definition is typically provided by a predefined template of type fs_operation_def_t, which is parsed by vn_make_ops(), as shown below. The definition is often set up in the file system initialization function. /* * File systems use arrays of fs_operation_def structures to form * name/value pairs of operations. These arrays get passed to: * * - vn_make_ops() to create vnodeops * - vfs_makefsops()/vfs_setfsops() to create vfsops. */ typedef struct fs_operation_def { char *name; /* name of operation (NULL at end) */ fs_generic_func_p func; /* function implementing operation */ } fs_operation_def_t; int vn_make_ops( const char *name, /* Name of file system */ const fs_operation_def_t *templ, /* Operation specification */ vnodeops_t **actual); /* Return the vnodeops */ Creates and builds the private vnodeops table void vn_freevnodeops(vnodeops_t *vnops); Frees a vnodeops structure created by vn_make_ops() void vn_setops(vnode_t *vp, vnodeops_t *vnodeops); Sets the operations vector for this vnode vnodeops_t * vn_getops(vnode_t *vp); Retrieves the operations vector for this vnode int vn_matchops(vnode_t *vp, vnodeops_t *vnodeops); Determines if the supplied operations vector matches the vnode's operations vector. Note that this is a "shallow" match. The pointer to the operations vector is compared, not each individual operation. Returns non-zero (1) if the vnodeops matches that of the vnode. Returns zero (0) if not. int vn_matchopval(vnode_t *vp, char *vopname, fs_generic_func_p funcp) Determines if the supplied function exists for a particular operation in the vnode's operations vector See usr/src/uts/common/sys/vfs.h The following example shows how the tmpfs file system sets up its vnode operations. struct vnodeops *tmp_vnodeops; const fs_operation_def_t tmp_vnodeops_template[] = { VOPNAME_OPEN, tmp_open, VOPNAME_CLOSE, tmp_close, VOPNAME_READ, tmp_read, VOPNAME_WRITE, tmp_write, VOPNAME_IOCTL, tmp_ioctl, VOPNAME_GETATTR, tmp_getattr, VOPNAME_SETATTR, tmp_setattr, VOPNAME_ACCESS, tmp_access, See usr/src/uts/common/fs/tmpfs/tmp_vnops.c static int tmpfsinit(int fstype, char *name) { ... error = vn_make_ops(name, tmp_vnodeops_template, &tmp_vnodeops); if (error != 0) { (void) vfs_freevfsops_by_type(fstype); cmn_err(CE_WARN, "tmpfsinit: bad vnode ops template"); return (error); } ...} See usr/src/uts/common/fs/tmpfs/tmp_vfsops.c 14.6.4. vnode MethodsThe following section describes the method names that can be passed into vn_make_ops(), followed by the function prototypes for each method. extern int fop_access(vnode_t *vp, int mode, int flags, cred_t *cr); Checks to see if the user (represented by the cred structure) has permission to do an operation. Mode is made up of some combination (bitwise OR) of VREAD, VWRITE, and VEXEC. These bits are shifted to describe owner, group, and "other" access. extern int fop_addmap(vnode_t *vp, offset_t off, struct as *as, caddr_t addr, size_t len, uchar_t prot, uchar_t maxprot, uint_t flags, cred_t *cr); Increments the map count. extern int fop_close(vnode_t *vp, int flag, int count, offset_t off, cred_t *cr); Closes the file given by the supplied vnode. When this is the last close, some file systems use vop_close() to initiate a writeback of outstanding dirty pages by checking the reference count in the vnode. extern int fop_cmp(vnode_t *vp1, vnode_t *vp2); Compares two vnodes. In almost all cases, this defaults to fs_cmp() which simply does a: return (vp1 == vp2); NOTE: NFS/NFS3 and Cachefs have their own CMP routines, but they do exactly what fs_cmp() does. Procfs appears to be the only exception. It looks like it follows a chain. extern int fop_create(vnode_t *dvp, char *name, vattr_t *vap, vcexcl_t excl, int mode, vnode_t **vp, cred_t *cr, int flag); Creates a file with the supplied path name. extern int fop_delmap(vnode_t *vp, offset_t off, struct as *as, caddr_t addr, size_t len, uint_t prot, uint_t maxprot, uint_t flags, cred_t *cr); Decrements the map count. extern void fop_dispose(vnode_t *vp, struct page *pp, int flag, int dn, cred_t *cr); Frees the given page from the vnode. extern int fop_dump(vnode_t *vp, caddr_t addr, int lbdn, int dblks); Dumps data when the kernel is in a frozen state. extern int fop_dumpctl(vnode_t *vp, int action, int *blkp); Prepares the file system before and after a dump. extern int fop_fid(vnode_t *vp, struct fid *fidp); Puts a unique (by node, file system, and host) vnode/xxx_node identifier into fidp. Used for NFS file-handles. extern int fop_frlock(vnode_t *vp, int cmd, struct flock64 *bfp, int flag, offset_t off, struct flk_callback *flk_cbp, cred_t *cr); Does file and record locking for the supplied vnode. Most file systems either map this to fs_frlock() or do some special case checking and call fs_frlock() directly. As you might expect, fs_frlock() does all the dirty work. extern int fop_fsync(vnode_t *vp, int syncflag, cred_t *cr); Flushes out any dirty pages for the supplied vnode. extern int fop_getattr(vnode_t *vp, vattr_t *vap, int flags, cred_t *cr); Gets the attributes for the supplied vnode. extern int fop_getpage(vnode_t *vp, offset_t off, size_t len, uint_t protp, struct page **plarr, size_t plsz, struct seg *seg, caddr_t addr, enum seg_rw rw, cred_t *cr); Gets pages in the range offset and length for the vnode from the backing store of the file system. Does the real work of reading a vnode. This method is often called as a result of read(), which causes a page fault in seg_map, which calls vop_getpage. extern int fop_getsecattr(vnode_t *vp, vsecattr_t *vsap, int flag, cred_t *cr); Gets security access control list attributes. extern void fop_inactive(vnode_t *vp, cred_t *cr); Frees resources and releases the supplied vnode. The file system can choose to destroy the vnode or put it onto an inactive list, which is managed by the file system implementation. extern int fop_ioctl(vnode_t *vp, int cmd, intptr_t arg, int flag, cred_t *cr, int *rvalp); Performs an I/O control on the supplied vnode. extern int fop_link(vnode_t *targetvp, vnode_t *sourcevp, char *targetname, cred_t *cr); Creates a hard link to the supplied vnode. extern int fop_lookup(vnode_t *dvp, char *name, vnode_t **vpp, int flags, vnode_t *rdir, cred_t *cr); Looks up the name in the directory vnode dvp with the given dirname and returns the new vnode in vpp. The vop_lookup() does file-name translation for the open, stat system calls. extern int fop_map(vnode_t *vp, offset_t off, struct as *as, caddr_t *addrp, size_t len, uchar_t prot, uchar_t maxprot, uint_t flags, cred_t *cr); Maps a range of pages into an address space by doing the appropriate checks and calling as_map(). extern int fop_mkdir(vnode_t *dvp, char *name, vattr_t *vap, vnode_t **vpp, cred_t *cr); Makes a directory in the directory vnode (dvp) with the given name (dirname) and returns the new vnode in vpp. extern int fop_vnevent(vnode_t *vp, vnevent_t vnevent); Interface for reporting file events. File systems need not implement this method. extern int fop_open(vnode_t **vpp, int mode, cred_t *cr); Opens a file referenced by the supplied vnode. The open() system call has already done a vop_lookup() on the path name, which returned a vnode pointer and then calls to vop_open(). This function typically does very little, since most of the real work was performed by vop_lookup(). Also called by file systems to open devices as well as by anything else that needs to open a file or device. extern int fop_pageio(vnode_t *vp, struct page *pp, u_offset_t io_off, size_t io_len, int flag, cred_t *cr); Paged I/O support for file system swap files. extern int fop_pathconf(vnode_t *vp, int cmd, ulong_t *valp, cred_t *cr); Establishes file system parameters with the pathconf system call. extern int fop_poll(vnode_t *vp, short events, int anyyet, short *reventsp, struct pollhead **phpp); File system support for the poll() system call. extern int fop_putpage(vnode_t *vp, offset_t off, size_t len, int, cred_t *cr); Writes pages in the range offset and length for the vnode to the backing store of the file system. Does the real work of writing a vnode. extern int fop_read(vnode_t *vp, uio_t *uiop, int ioflag, cred_t *cr, caller_context_t *ct); Reads the range supplied for the given vnode. vop_read() typically maps the requested range of a file into kernel memory and then uses vop_getpage() to do the real work. extern int fop_readdir(vnode_t *vp, uio_t *uiop, cred_t *cr, int *eofp); Reads the contents of a directory. extern int fop_readlink(vnode_t *vp, uio_t *uiop, cred_t *cr); Follows the symlink in the supplied vnode. extern int fop_realvp(vnode_t *vp, vnode_t **vpp); Gets the real vnode from the supplied vnode. extern int fop_remove(vnode_t *dvp, char *name, cred_t *cr); Removes the file for the supplied vnode. extern int fop_rename(vnode_t *sourcedvp, char *sourcename, vnode_t *targetdvp, char *targetname, cred_t *cr); Renames the file named (by sourcename) in the directory given by sourcedvp to the new name (targetname) in the directory given by targetdvp. extern int fop_rmdir(vnode_t *dvp, char *name, vnode_t *vp, cred_t *cr); Removes the name in the directory given by dvp. extern int fop_rwlock(vnode_t *vp, int write_lock, caller_context_t *ct); Holds the reader/writer lock for the supplied vnode. This method is called for each vnode, with the rwflag set to 0 inside a read() system call and the rwflag set to 1 inside a write() system call. POSIX semantics require only one writer inside write() at a time. Some file system implementations have options to ignore the writer lock inside vop_rwlock(). extern void fop_rwunlock(vnode_t *vp, int write_lock, caller_context_t *ct); Releases the reader/writer lock for the supplied vnode. extern int fop_seek(vnode_t *vp, offset_t oldoff, offset_t *newoffp); Checks the FS-dependent bounds of a potential seek. NOTE: VOP_SEEK() doesn't do the seeking. Offsets are usually saved in the file_t structure and are passed down to VOP_READ/VOP_WRITE in the uiostructure. extern int fop_setattr(vnode_t *vp, vattr_t *vap, int flags, cred_t *cr, caller_context_t *cr); Sets the file attributes for the supplied vnode. extern int fop_setfl(vnode_t *vp, int oldflags, int newflags, cred_t *cr); Sets the file system-dependent flags (typically for a socket) for the supplied vnode. extern int fop_setsecattr(vnode_t *vp, vsecattr_t *vsap, int flag, cred_t *cr); Sets security access control list attributes. extern int fop_shrlock(vnode_t *vp, int cmd, struct shrlock *shr, int flag, cred_t *cr); ONC shared lock support. extern int fop_space(vnode_t vp*, int cmd, struct flock64 *bfp, int flag, offset_t off, cred_t *cr, caller_context_t *ct); Frees space for the supplied vnode. extern int fop_symlink(vnode_t *vp, char *linkname, vattr_t *vap, char *target, cred_t *cred); Creates a symbolic link between the two path names. extern int fop_write(vnode_t *vp, uio_t *uiop, int ioflag, cred_t *cr, caller_context_t *ct); Writes the range supplied for the given vnode. The write system call typically maps the requested range of a file into kernel memory and then uses vop_putpage() to do the real work. See usr/src/uts/common/sys/vnode.h 14.6.5. Support Functions for VnodesFollowing is a list of the public functions available for obtaining information from within the private part of the vnode. int vn_is_readonly(vnode_t *); Is the vnode write protected? int vn_is_opened(vnode_t *, v_mode_t); Is the file open? int vn_is_mapped(vnode_t *, v_mode_t); Is the file mapped? int vn_can_change_zones(vnode_t *vp); Check if the vnode can change zones: used to check if a process can change zones. Mainly used for NFS. int vn_has_flocks(vnode_t *); Do file/record locks exist for this vnode? int vn_has_mandatory_locks(vnode_t *, int); Does the vnode have mandatory locks in force for this mode? int vn_has_cached_data(vnode_t *); Does the vnode have cached data associated with it? struct vfs *vn_mountedvfs(vnode_t *); Returns the vfs mounted on this vnode if any int vn_ismntpt(vnode_t *); Returns true (non-zero) if this vnode is mounted on, zero otherwise See usr/src/uts/common/sys/vnode.h 14.6.6. The Life Cycle of a VnodeA vnode is an in-memory reference to a file. It is a transient structure that lives in memory when the kernel references a file within a file system. A vnode is allocated by vn_alloc() when a first reference to an existing file is made or when a file is created. The two common places in a file system implementation are within the VOP_LOOKUP() method or within the VOP_CREAT() method. When a file descriptor is opened to a file, the reference count for that vnode is incremented. The vnode is always in memory when the reference count is greater than zero. The reference count may drop back to zero after the last file descriptor has been closed, at which point the file system framework calls the file system's VOP_INACTIVE() method. Figure 14.8. The Life Cycle of a vnode Object Once a vnode's reference count becomes zero, it is a candidate for freeing. Most file systems won't free the vnode immediately, since to recreate it will likely require a disk I/O for a directory read or an over-the-wire operation. For example, the UFS keeps a list of inactive inodes on an "inactive list" (see Section 15.3.1). Only when certain conditions are met (for example, a resource shortage) is the vnode actually freed. Of course, when a file is deleted, its corresponding in-memory vnode is freed. This is also performed by the VOP_INACTIVE() method for the file system: Typically, the VOP_INACTIVE() method checks to see if the link count for the vnode is zero and then frees it. 14.6.7. vnode Creation and DestructionThe allocation of a vnode must be done by a call to the appropriate support function. The functions for allocating, destroying, and reinitializing vnodes are shown below. vnode_t *vn_alloc(int kmflag); Allocate a vnode and initialize all of its structures. void vn_free(vnode_t *vp); Free the allocated vnode. void vn_reinit(vnode_t *vp); (Re)initializes a vnode. See usr/src/uts/common/sys/vnode.h 14.6.8. The vnode Reference CountA vnode is created by the file system at the time a file is first opened or created and stays active until the file system decides the vnode is no longer needed. The vnode framework provides an infrastructure that keeps track of the number of references to a vnode. The kernel maintains the reference count by means of the VN_HOLD() and VN_RELE() macros, which increment and decrement the v_count field of the vnode. The vnode stays valid while its reference count is greater than zero, so a subsystem can rely on a vnode's contents staying valid by calling VN_HOLD() before it references a vnode's contents. It is important to distinguish a vnode reference from a lock; a lock ensures exclusive access to the data, and the reference count ensures persistence of the object. When a vnode's reference count drops to zero, VN_RELE() invokes the VOP_INACTIVE() method for that file system. Every subsystem that references a vnode is required to call VN_HOLD() at the start of the reference and to call VN_RELE() at the end of each reference. Some file systems deconstruct a vnode when its reference count falls to zero; others hold on to the vnode for a while so that if it is required again, it is available in its constructed state. UFS, for example, holds on to the vnode for a while after the last release so that the virtual memory system can keep the inode and cache for a file, whereas PCFS frees the vnode and all of the cache associated with the vnode at the time VOP_INACTIVE() is called. 14.6.9. Interfaces for Paging vnode CacheSolaris OS unifies file and memory management by using a vnode to represent the backing store for virtual memory (see Chapter 8). A page of memory represents a particular vnode and offset. The file system uses the memory relationship to implement caching for vnodes within a file system. To cache a vnode, the file system has the memory system create a page of physical memory that represents the vnode and offset. The virtual memory system provides a set of functions for cache management and I/O for vnodes. These functions allow the file systems to cluster pages for I/O and handle the setup and checking required for synchronizing dirty pages with their backing store. The functions, described below, set up pages so that they can be passed to device driver block I/O handlers.

14.6.10. Block I/O on vnode PagesThe block I/O subsystem supports I/O initiation to and from vnode pages. It schedules I/O from the device drivers directly to and from a page without buffering the data in the buffer cache. These functions are typically used in the implementation of vop_getpage() and vop_putpage() to do the physical I/O on behalf of the file system. Three functions, shown below, initiate I/O between a physical page and a device. struct buf *pageio_setup(struct page *, size_t, struct vnode *, int); Sets up a block buffer for I/O on a page of memory so that it bypasses the block buffer cache by setting the B_PAGEIO flag and putting the page list on the b_pages field. extern int bdev_strategy(struct buf *); Initiates an I/O on a page, using the block I/O device. void pageio_done(struct buf *); Waits for the block device I/O to complete. See usr/src/uts/common/sys/bio.h 14.6.11. vnode Information Obtainable with mdbYou can use mdb to traverse the vnode cache, inspect a vnode object, view the path name, and examine linkages between vnodes. With the centralized vn_alloc(), a central vnode cache holds all the vnode structures. It is a regular kmem cache and can be traversed with mdb and the generic kmem cache walker. sol10# mdb -k > ::walk vn_cache ffffffff80f24040 ffffffff80f24140 ffffffff80f24240 ffffffff8340d940 ... Similarly, you can inspect a vnode object. sol10# mdb -k > ::walk vn_cache ffffffff80f24040 ffffffff80f24140 ffffffff80f24240 ffffffff8340d940 ... > ffffffff8340d940::print vnode_t { v_lock = { _opaque = [ 0 ] } v_flag = 0x10000 v_count = 0x2 v_data = 0xffffffff8340e3d8 v_vfsp = 0xffffffff816a8f00 v_stream = 0 v_type = 1 (VREG) v_rdev = 0xffffffffffffffff v_vfsmountedhere = 0 v_op = 0xffffffff805fe300 v_pages = 0 v_npages = 0 v_msnpages = 0 v_scanfront = 0 v_scanback = 0 v_filocks = 0 v_shrlocks = 0 v_nbllock = { _opaque = [ 0 ] } v_cv = { _opaque = 0 } v_locality = 0 v_femhead = 0 v_path = 0xffffffff8332d440 "/zones/gallery/root/var/svc/log/work-inetd:default.log" v_rdcnt = 0 v_wrcnt = 0x1 v_mmap_read = 0 v_mmap_write = 0 v_mpssdata = 0 v_scantime = 0 v_mset = 0 v_msflags = 0 v_msnext = 0 v_msprev = 0 v_mslock = { _opaque = [ 0 ] } } With other mdb d-commands, you can view the vnode's path name (a guess, cached during vop_lookup), the linkage between vnodes, which processes have them open, and vice versa. > ffffffff8340d940::vnode2path /zones/gallery/root/var/svc/log//network-inetd:default.log > ffffffff8340d940::whereopen file ffffffff832d4bd8 ffffffff83138930 > ffffffff83138930::ps S PID PPID PGID SID UID FLAGS ADDR NAME R 845 1 845 845 0 0x42000400 ffffffff83138930 inetd > ffffffff83138930::pfiles FD TYPE VNODE INFO 0 CHR ffffffff857c8580 /zones/gallery/root/dev/null 1 REG ffffffff8340d940 /zones/gallery/root/var/svc/log//network-inetd:default.log 2 REG ffffffff8340d940 /zones/gallery/root/var/svc/log//network-inetd:default.log 3 FIFO ffffffff83764940 4 DOOR ffffffff836d1680 [door to 'nscd' (proc=ffffffff835ecd10)] 5 DOOR ffffffff83776800 [door to 'svc.configd' (proc=ffffffff8313f928)] 6 DOOR ffffffff83776900 [door to 'svc.configd' (proc=ffffffff8313f928)] 7 FIFO ffffffff83764540 8 CHR ffffffff83776500 /zones/gallery/root/dev/sysevent 9 CHR ffffffff83776300 /zones/gallery/root/dev/sysevent 10 DOOR ffffffff83776700 [door to 'inetd' (proc=ffffffff83138930)] 11 REG ffffffff833fcac0 /zones/gallery/root/system/contract/process/template 12 SOCK ffffffff83215040 socket: AF_UNIX /var/run/.inetd.uds 13 CHR ffffffff837f1e40 /zones/gallery/root/dev/ticotsord 14 CHR ffffffff837b6b00 /zones/gallery/root/dev/ticotsord 15 SOCK ffffffff85d106c0 socket: AF_INET6 :: 48155 16 SOCK ffffffff85cdb000 socket: AF_INET6 :: 20224 17 SOCK ffffffff83543440 socket: AF_INET6 :: 5376 18 SOCK ffffffff8339de80 socket: AF_INET6 :: 258 19 CHR ffffffff85d27440 /zones/gallery/root/dev/ticlts 20 CHR ffffffff83606100 /zones/gallery/root/dev/udp 21 CHR ffffffff8349ba00 /zones/gallery/root/dev/ticlts 22 CHR ffffffff8332f680 /zones/gallery/root/dev/udp 23 CHR ffffffff83606600 /zones/gallery/root/dev/ticots 24 CHR ffffffff834b2d40 /zones/gallery/root/dev/ticotsord 25 CHR ffffffff8336db40 /zones/gallery/root/dev/tcp 26 CHR ffffffff83626540 /zones/gallery/root/dev/ticlts 27 CHR ffffffff834f1440 /zones/gallery/root/dev/udp 28 CHR ffffffff832d5940 /zones/gallery/root/dev/ticotsord 29 CHR ffffffff834e4b80 /zones/gallery/root/dev/ticotsord 30 SOCK ffffffff83789580 socket: AF_INET 0.0.0.0 514 31 SOCK ffffffff835a6e80 socket: AF_INET6 :: 514 32 SOCK ffffffff834e4d80 socket: AF_INET6 :: 5888 33 CHR ffffffff85d10ec0 /zones/gallery/root/dev/ticotsord 34 CHR ffffffff83839900 /zones/gallery/root/dev/tcp 35 SOCK ffffffff838429c0 socket: AF_INET 0.0.0.0 11904 14.6.12. DTrace Probes in the vnode LayerDTrace provides probes for file system activity through the vminfo provider and, optionally, through deeper tracing with the fbt provider. All the cpu_vminfo statistics are updated from pageio_setup() (see Section 14.6.10). The vminfo provider probes correspond to the fields in the "vm" named kstat: a probe provided by vminfo fires immediately before the corresponding vm value is incremented. Table 14.4 lists the probes available from the VM provider; these are further described in Section 6.11 in Solaris™ Performance and Tools. A probe takes the following arguments.

For example, the following paging activity that is visible with vmstat indicates page-in from the file system (fpi). sol8# vmstat -p 3 memory page executable anonymous filesystem swap free re mf fr de sr epi epo epf api apo apf fpi fpo fpf 1512488 837792 160 20 12 0 0 0 0 0 8102 0 0 12 12 12 1715812 985116 7 82 0 0 0 0 0 0 7501 0 0 45 0 0 1715784 983984 0 2 0 0 0 0 0 0 1231 0 0 53 0 0 1715780 987644 0 0 0 0 0 0 0 0 2451 0 0 33 0 0 sol10$ dtrace -n fspgin'{@[execname] = count()}' dtrace: description 'fspgin' matched 1 probe svc.startd 1 sshd 2 ssh 3 dtrace 6 vmstat 8 filebench 13 See Section 6.11 in Solaris™ Performance and Tools for examples of how to use dtrace for memory analysis. Below is an example of tracing a generic vnode layer with DTrace. dtrace:::BEGIN { printf("%-15s %-10s %51s %2s %8s %8s\n", "Event", "Device", "Path", "RW", "Size", "Offset"); self->trace = 0; self->path = ""; } fbt::fop_*:entry /self->trace == 0/ { /* Get vp: fop_open has a pointer to vp */ self->vpp = (vnode_t **)arg0; self->vp = (vnode_t *)arg0; self->vp = probefunc == "fop_open" ? (vnode_t *)*self->vpp : self->vp; /* And the containing vfs */ self->vfsp = self->vp ? self->vp->v_vfsp : 0; /* And the paths for the vp and containing vfs */ self->vfsvp = self->vfsp ? (struct vnode *)((vfs_t *)self->vfsp)->vfs_vnodecov ered : 0; self->vfspath = self->vfsvp ? stringof(self->vfsvp->v_path) : "unknown"; /* Check if we should trace the root fs */ ($1 == "/all" || ($1 == "/" && self->vfsp && \ (self->vfsp == `rootvfs))) ? self->trace = 1 : self->trace; /* Check if we should trace the fs */ ($1 == "/all" || (self->vfspath == $1)) ? self->trace = 1 : self->trace; } /* * Trace the entry point to each fop * */ fbt::fop_*:entry /self->trace/ { self->path = (self->vp != NULL && self->vp->v_path) ? stringof(self->vp->v_path) : "unknown"; self->len = 0; self->off = 0; /* Some fops has the len in arg2 */ (probefunc == "fop_getpage" || \ probefunc == "fop_putpage" || \ probefunc == "fop_none") ? self->len = arg2 : 1; /* Some fops has the len in arg3 */ (probefunc == "fop_pageio" || \ probefunc == "fop_none") ? self->len = arg3 : 1; /* Some fops has the len in arg4 */ (probefunc == "fop_addmap" || \ probefunc == "fop_map" || \ probefunc == "fop_delmap") ? self->len = arg4 : 1; /* Some fops has the offset in arg1 */ (probefunc == "fop_addmap" || \ probefunc == "fop_map" || \ probefunc == "fop_getpage" || \ probefunc == "fop_putpage" || \ probefunc == "fop_seek" || \ probefunc == "fop_delmap") ? self->off = arg1 : 1; /* Some fops has the offset in arg3 */ (probefunc == "fop_close" || \ probefunc == "fop_pageio") ? self->off = arg3 : 1; /* Some fops has the offset in arg4 */ probefunc == "fop_frlock" ? self->off = arg4 : 1; /* Some fops has the pathname in arg1 */ self->path = (probefunc == "fop_create" || \ probefunc == "fop_mkdir" || \ probefunc == "fop_rmdir" || \ probefunc == "fop_remove" || \ probefunc == "fop_lookup") ? strjoin(self->path, strjoin("/", stringof(arg1))) : self->path; printf("%-15s %-10s %51s %2s %8d %8d\n", probefunc, "-", self->path, "-", self->len, self->off); self->type = probefunc; } fbt::fop_*:return /self->trace == 1/ { self->trace = 0; } /* Capture any I/O within this fop */ io:::start /self->trace/ { printf("%-15s %-10s %51s %2s %8d %8u\n", self->type, args[1]->dev_statname, self->path, args[0]->b_flags & B_READ ? "R" : "W", args[0]->b_bcount, args[2]->fi_offset); } sol10# ./voptrace.d /tmp Event Device Path RW Size Offset fop_putpage - /tmp/bin/i386/fastsu - 4096 4096 fop_inactive - /tmp/bin/i386/fastsu - 0 0 fop_putpage - /tmp/WEB-INF/lib/classes12.jar - 4096 204800 fop_inactive - /tmp//WEB-INF/lib/classes12.jar - 0 0 fop_putpage - /tmp/s10_x86_sparc_pkg.tar.Z - 4096 7655424 fop_inactive - /tmp/s10_x86_sparc_pkg.tar.Z - 0 0 fop_putpage - /tmp/xanadu/WEB-INF/lib/classes12.jar - 4096 782336 fop_inactive - /tmp/xanadu/WEB-INF/lib/classes12.jar - 0 0 fop_putpage - /tmp/bin/amd64/filebench - 4096 36864 |

EAN: 2147483647

Pages: 244