11.7 Hybrid Mechanisms

11.7 Hybrid Mechanisms

As we have seen in this chapter, there are benefits with both switching and routing, and some mechanisms have been developed that combine these mechanisms in various ways. Interconnection mechanisms that combine characteristics of switching and routing are termed here hybrid mechanisms.

In this section, we will discuss the following hybrid mechanisms: LANE, MPOA, nonbroadcast multiple access (NBMA) hybrid mechanisms, service switching, and IP switching. As we will see, these interconnection mechanisms combine characteristics of switching and routing, as well as other characteristics discussed earlier (multicast capability, connection orientation, offered abilities and services, performance upgrades, and flow considerations).

11.7.1 NHRP

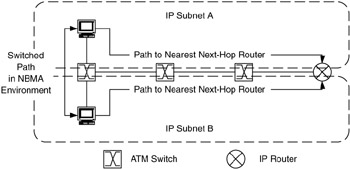

The NHRP is one method to take advantage of a shorter, faster data-link layer path in NBMA networks. For example, if we have multiple IP subnetworks using the same NBMA infrastructure, instead of using the standard IP path from source to destination, NHRP can be used to provide a shorter path directly through the NBMA network, as in Figure 11.12.

Figure 11.12: NHRP flow optimization in an NBMA environment.

NHRP provides a path through the NBMA network toward the destination. If the destination is directly on the NBMA network, the path will be to the destination. When the destination is beyond the NBMA network, the path will be to the exit router from the NBMA network closest to the destination.

NBMA is one method for diverging from the standard IP routing model to optimize paths in the network. A strong case for the success of a method such as NHRP is the open nature of the ongoing work on NHRP by the Internet Engineering Task Force, along with its acceptance as part of the MPOA mechanism.

11.7.2 MPOA

MPOA applies NHRP to LANE to integrate LANE and multiprotocol environments and to allow optimized switching paths across networks or subnetworks. MPOA is an attempt to build scalability into ATM systems, through integrating switching and routing functions into a small number of NHRP/MPOA/LANE-capable routers and reducing the routing functions in the large number of edge devices.

MPOA builds on LANE to reduce some of the trade-offs discussed earlier with LANE. First, by integrating LANE with NHRP, paths between networks or subnetworks can be optimized over the ATM infrastructure. We now also have an integrated mechanism for accessing other network-layer protocols. The complexity trade-off with LANE is still there, however, and is increased with the integration of NHRP with MPOA. There are numerous control, configuration, and information flows in an MPOA environment, between MPOA clients (MPCs), MPOA servers (MPSs), and LECSs. In each of these devices reside network-layer routing/forwarding engines, MPOA client-server functions, LANE client functions, and possibly NHRP server functions.

It should be noted that, until link-layer and network-layer functions are truly integrated, perhaps through a common distributed forwarding table (combining what we think of today as switching and routing tables), where and how information flows are configured, established, and maintained by the network will be quite confusing. MPOA may be an answer toward this integration, as well as PNNI or NHRP.

11.7.3 Service Switching

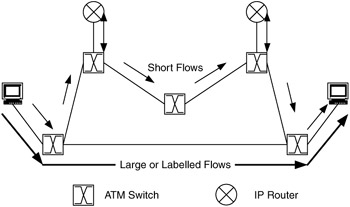

Service switching is switching based on flow or end-to-end information, dependent on the type of service required. Service switching is used to generically describe mechanisms for optimizing paths in a hybrid switching/routing environment, based on determining when to switch or route traffic (Figure 11.13).

Figure 11.13: Using end-to-end or flow information to make forwarding decisions.

Multiple vendors are deploying their own standards for hybrid mechanisms, and since networking vendors and vendor-specific interconnection mechanisms are purposefully avoided in this book, the generic term is used instead.

For example, one criterion for determining when to route or switch is the amount of traffic transferred during an application session or the size and duration of the flow. The concept behind this is to be able to amortize the costs of setting up and tearing down switched connections over large amounts of traffic. It does not make sense to go through the overhead of setting up a connection (e.g., via ATM) through the network to send one or a few packets, frames, or cells of information, which is likely for some traffic types (ping, network file server, domain name system, and UDP in general). On the other hand, for traffic types that typically transfer relatively large amounts of information (FTP and TCP in general), this overhead is a small percentage of the transferred information.

We can use some general rules to determine when to switch or route. Some examples include either counting the number of cells, frames, or packets that have the same destination address and session type or using the port number of the traffic flow. We may choose to route all UDP traffic and switch TCP traffic or switch traffic with particular port numbers. Although this mechanism provides some optimization between switching and routing, it by itself does not address end-to-end services. It appears that this mechanism is particularly useful when switching a large number of connections of different types of information, which is likely to occur over backbones or at traffic aggregation points in the network.

Another mechanism is to take a more generic approach to distinguish traffic types. By allowing devices such as routers to create labels to identify a particular characteristic of traffic (protocol, transport mechanism, port ID, even useror application-specific information), these labels can be exchanged between devices to allow them to handle labeled traffic in special ways, such as providing priority queues, cutthrough paths, or other service-related support for the traffic. This type of mechanism has the advantage of being configurable for a particular environment, especially if it can support user-or application-specific requirements.

Now let's take a look at the interconnection mechanisms presented in this chapter, along with evaluation criteria. Figure 11.14 presents a summary of this information. When an interconnection mechanism is evaluated against a criterion, it may be optimized for that criterion (+), it may have a negative impact on that criterion (-), or it may not be applicable to the criterion (N/A). Each interconnection mechanism is evaluated against design goals and criteria developed in this chapter. You can use this information as a start toward developing your own evaluation criteria. There will likely be customer- and environment-specific criteria that can be added to what is presented here, or you may develop a set of criteria that is entirely different, based on your experience, perspectives, customers, and environments.

| Shared-Medium | Switching | Routing | LANE | MPOA | 2225 | NHRP | Service Switching | |

|---|---|---|---|---|---|---|---|---|

| Scalability | - | 0 | + | 0 | + | - | + | 0 to + |

| Optimizing Flow Models | - | + | 0 | 0 | + | 0 | + | + |

| External Connectivity | - | - | + | - | 0 | + | 0 | 0 |

| Support For Services | 0 | 0 to + | 0 | 0 | + | - | + | + |

| Cost | + | + | - | + | + | 0 | + | + |

| Performance | - | + | + | 0 | 0 | 0 | + | + |

| Ease of Use | + | + | 0 | - | - | 0 | - | - |

| Adaptability | - | 0 | + | + | + | 0 | + | + |

Figure 11.14: Summary of evaluation criteria for interconnection mechanisms.

EAN: 2147483647

Pages: 161