Conceptual Framework and Research Model

|

| < Day Day Up > |

|

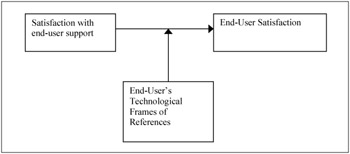

The objective of this research is to examine satisfied and dissatisfied end users in an organization to determine if they hold different technological frames of reference towards end user computing (EUC). Can their different frames of reference be used to explain their different satisfaction levels? What is the relationship between satisfaction with end user support and satisfaction with the overall end user computing environment? The research model is presented in Figure 1.

Figure 1: Research Model

Measuring EUC Satisfaction

Several different tools have been developed to assess end user computing satisfaction. Two validated instruments commonly used to measure satisfaction with end user computing are the Doll & Torkzadeh (1988) instrument and the Ives et al. (1983) instrument. These instruments can be used in one of two ways: as a straightforward measurement of the level of satisfaction within an organization, or as a tool to identify factors or determinants that can affect satisfaction. This study uses a variation of the Ives et al. (1983) instrument developed by Mirani & King (1994) that was specifically adapted for the EUC context (see Appendix A).

A review of the EUC satisfaction literature (shown in Table 1) surfaced several factors that are shown to significantly influence EUC satisfaction.

| End-User participation in design | Doll & Torkzadeh (1988), Montazemi (1988), Mirani & King (1994), Amoako-Gyampah & White (1993), McKeen et al. (1994), Lawrence & Low (1993), Guimaraes et al. (1992), Hartwick & Barki (1994), Baroudi et al. (1986), Yoon & Guimaraes (1995), McKeen & Guimaraes (1997), Park et al. (1993-1994), Saleem (1996), Choe (1998) |

| End-User computing self efficacy (i.e. the belief that one is able to master a particular behavior) | Henry & Stone (1994), Montazemi (1988) |

| Technical support | Buyukkurt & Vass (1993), Lederer & Spencer (1988), Ranier & Carr (1992), Bowman et al. (1993), Brancheau & Wetherbe (1988), Trauth & Cole (1992), Mirani & King (1994), Shaw et al. (2002) |

| Documentation | Torkzadeh & Doll (1993) |

| Management Support | Henry & Stone (1994), Guimaraes et al. (1992), Lawrence & Low, (1993), Igbaria et al., (1995), Yoon & Guimaraes (1995) |

| Ease of System Use | Henry & Stone (1994), Davis (1989), Igbaria et al. (1995), Davis et al. (1989) |

| Previous computing experience | Henry & Stone (1994), Lehman & Mukthy (1989), Lawrence & Low (1993), Palvia (1996), Montazemi et al. (1996), Ryker & Nath (1995), Yoon & Guimaraes (1995), Thompson et al. (1994), Venkatesh (1999), Chan & Storey (1996), Igbaria et al. (1989), Blili et al. (1998) |

| End-user computing attitudes | Henry & Stone (1994), Lee et al. (1995), Hartwick & Burki (1994), Davis et al. (1989), Thompson et al. (1994), Satzinger & Olfman (1995), Aladwani (2002), Shaw et al. (2002) |

| Outcome Expectancy | Henry & Stone (1994) |

| Existence of Hot Line | |

| Existence of Information Center | Bergeron & Berube (1988) |

| Number of systems analysts | Montazemi (1988) |

| Level of requirements analysis | |

| Proportion of online applications | |

| Degree of decentralization | |

| Standards and guidelines | Mirani & King (1994) |

| Data provision support | |

| Purchasing relating support | |

| Variety of software supported | |

| Post development support | |

| Training on backup and security | |

| New software upgrades | Remenyi & Money (1994) |

| New hardware upgrades | |

| Low percentage of H/S downtime | |

| System response time | |

| Cost effectiveness of IS | |

| Ability of system to improve productivity | |

| Timeliness | Buyukkurt & Vass (1993) |

| EUC application characteristics | |

| User Expectations | Szajna & Scamell (1993), Lawrence & Loh (1993), Davis (1989), Yoon & Guimaraes (1995), Guimaraes et al. (1996) |

| User Skills | Lehman et al. (1986), Lawrence & Low (1993), Palvia (1996), Lee et al. (1995), Yoon & Guimaraes (1995, Thompson et al. (1994), Guimaraes et al. (1996), Saarinen (1996), Igbaria et al. (1995) |

The results from end user satisfaction studies are quite variable, with some studies giving support to the influence of one factor, while others finding little or no support for the same variable. In a meta-analysis of 45 end user satisfaction studies, Mahmood et al. (2000) separate factors that affect satisfaction into three general categories: perceived benefits and convenience, user background and involvement, and organizational attitude and support. As listed in Table 1, end user support was shown to have significantly affected EUC satisfaction in eight studies. As we are interested in examining the existing partnership between the IS department and end users in an organization, and, given the significance of end user support as highlighted by previous studies, we decide to concentrate on the effect of end user support on EUC satisfaction or dissatisfaction.

Measuring the Effectiveness of EUC Support

As indicated in the section above, prior research has shown that end user support contributes to end user satisfaction (Buyukkurt & Vass, 1993; Lederer & Spencer, 1988; Rainer & Carr, 1992; Bowman et al., 1993; Brancheau & Wetherbe, 1988; Trauth & Cole, 1992; Mirani & King, 1994; Shaw et al., 2002). One measurement of support is service quality, which measures how well the service level delivered matches customer expectations (Lewis & Booms, 1983). Service quality is more difficult to measure than product quality, as it is a function of the recipient's perception of quality. For example, one end user may expect installation of a new software package to take an hour and be very happy that it takes 45 minutes; another may be unhappy when expecting it to take 15 minutes but having to wait 30 minutes for the activity to be completed. Objectively, the latter was more productive, but in the former the end user was more satisfied.

Service quality can be measured by a comparison of user expectations (or needs) with the perceived performance (or capabilities) of the department or unit providing the service. The difference between these two measurements is called the service quality gap (Parasuraman et al., 1985). Parasuraman's work resulted in a 45-item instrument, SERVQUAL, for assessing customer expectations and perceptions of the quality of service in retailing and service organizations. Service quality has been the most researched area of services marketing (Fisk et al., 1993)

Service quality measurements have been used in IS research as a measure of IS success. Recognizing that a major component of the product an IS department delivers has a service dimension, IS researchers have recently begun to look for ways to assess the quality of that service (Shaw et al, 2002). The gap analysis method was first used by Kim (1990) to measure the quality of service of an IS department. Pitt et al. (1995) used a 22-item version of the SERVQUAL instrument developed by Parasuraman et al. (1985), to test the instrument's usefulness in the MIS environment. They assessed several aspects of the instrument's validity, including content validity, reliability, convergent validity, nomological validity and discriminant validity. They concluded that SERVQUAL could be used with confidence in the MIS environment. They also reported that the results of a service quality assessment was very useful in not only assessing current levels of service quality, but also as a diagnostic tool for determining actions for raising service quality (Pitt et al., 1995).

The instrument itself has been the subject of considerable debate (Brown et al., 1993; Parasuraman et al., 1993; Fisk et al., 1993; Van Dyke et al., 1997; Pitt et al., 1997). The focus of the debate concerns calculating differences between two possibly different constructs: expectations and perceptions of performance. To counteract the concerns surrounding the validity of the instrument in an IS context, Pitt et al., (1997) demonstrated that the service quality perception-expectation subtraction is rigorously grounded. See Kohlmeyer & Blanton (2000) for a complete discussion of the SERVQUAL debate. Researchers generally agree that the instrument is a good predictor of overall service quality, and is applicable for use in the IS context (Fisk et al., 1993; Kettinger & Lee, 1997; Pitt et al., 1997). Remenyi & Money (1994) developed a service-quality instrument specifically for the EUC environment to establish the effectiveness of the computer service and to identify key problem areas with EUC. This instrument is used in the current study (see Appendix B).

Assessing End User's Frame of Reference

While measuring levels of end user satisfaction in an organization is relatively straightforward and has been heavily documented, measuring or assessing views and perspectives of individuals is not so straightforward. This field of research originated in the social sciences domain. However, over the past few years, these concepts have been applied to several other areas of research, and more recently to those areas related to the management and use of computers.

The cognitive sciences suggest that the world as it is experienced does not consist of events that are meaningful in and of themselves. Cognitions, interpretations, or ways of understanding events are all guided by what happened in the past (Schutz, 1970). When faced with an unknown situation or object (artifact), we automatically create our own interpretation of what that artifact is. Which particular past experiences are called up, and how those experiences are imposed onto a structure is what determines our individual cognitive structures (Gioia, 1986). Individual cognitive structures, or schemas, allow individuals to draw on knowledge and past experiences to help them make sense of information. They influence perception and memory, and can be both facilitating and constraining. Schemas can change over time; existing schemas can also inhibit the learning of new schemas (Markus & Zajonc, 1985).

A minimal amount of research has been conducted on the social cognitive perspectives that individuals hold towards technology. Bostrom & Heinen (1977) first introduced the concept of frame of reference when they suggested that some of the social problems encountered during the implementation of information systems were due to the frames of reference held by the systems designers. Later work by Dagwell & Weber (1983), Kumar & Bjorn-Anderson (1989) and Boland (1978, 1979) expanded on the earlier study by examining the influence of the designer's values and conceptual framework on the resultant systems. This earlier work became the basis for a group of studies investigating the social aspects of information technology that considered the perceptions and values of both the designers and users (Hirschheim & Klein, 1989; Kling & Iacono, 1984; Markus, 1984). While these studies proposed the idea that individuals have assumptions and expectations regarding technology, Orlikowski & Gash (1994) expanded on this concept to emphasize the social nature of technological frames, their content, and the implications of these frames on the development, implementation, and use of that technology.

Technological frame of reference was introduced by Orlikowski & Gash (1994) in a study that proposed a systematic approach for examining the underlying assumptions, expectations, and knowledge that people have about technology. They argue that an understanding of an individual's interpretation of a technology is critical to understanding their interaction with it. Of particular significance in Orlikowski & Gash's work is the discussion of the contextual dimension of frames. Members of a social group as a whole will come to have an understanding of particular technological artifacts, including not only knowledge about the particular technology, but also a local understanding of specific uses in a given setting. Earlier work by Noble (1986) and Pinch & Bijker (1987) had shown that technological frames could strongly influence the choices made regarding the design and use of technology, including adoption rates (Jurison, 2000).

In our paper, we are interested in assessing the technological frame of reference that users hold towards end user computing. In particular, we are interested in determining if satisfied and dissatisfied end users hold different views of the technology, and ultimately if these different views influence their satisfaction with that technology.

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 191