What Happens When You Shoot Raw

| The image sensor in your digital camera is covered with a grid of tiny cells, one for each pixel that the sensor generates. Each cell, called a photosite, is covered with a small piece of light-sensitive metal. When light strikes the photosite, the metal releases a number of electrons that is directly proportional to the amount of light that struck the site. By measuring the voltage at each site, the sensor determines how much light struck that particular pixel. In this way, the sensor builds up a grid of grayscale pixels. Your camera, of course, outputs a full-color image. So where does the color information come from? Each pixel on your camera's sensor has a colored filter over it, usually a red, green, or blue filter (some cameras use different colors, but the theory is the same no matter what colors are used; Figure 6.1). So each pixel on the sensor is able to register one primary color. To turn this mosaic of primary-color pixels into a full-color image, a process called demosaicing is employed. Figure 6.1. The image sensor in your camera is covered with a grid of photosites, each of which is covered with a separate red, green, or blue filter.

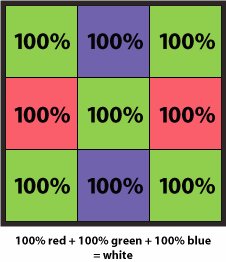

Demosaicing is an interpolation process that calculates the color of any one pixel by examining all of the surrounding pixels. For example, say you're trying to determine the color of a pixel that has a green filter over it and a current value of 255 (the maximum value a pixel can have). You examine the surrounding pixels and discover that the red pixels to the left and right also have values of 255, as do the blue pixels above and below and the green pixels at the corners. From this analysis, it's pretty safe to say that the pixel in question is white, because an equal mix of full red, green, and blue is white (Figure 6.2). Figure 6.2. Though each photosite is covered with a filter of a single color, your image sensor can interpolate the true color of each pixel by examining all surrounding pixels.

Of course, there is a possibility that your pixel is supposed to be pure greenthat your subject matter included a single tiny spot of green amidst a field of white. However, the pixels on an image sensor are very, very small, so it's unlikely that you would ever have a single pixel of one isolated color. Just to be safe, though, a special low-pass filter is placed between your camera's lens and its sensor. This filter blurs your image ever so slightly, so that any individual color pixels will smear their neighbors, preventing incorrect interpolation of a single pixel or single row of pixels. The example that we've been discussing is very simple. Obviously, trying to interpolate every subtle shade of color is a complex process, and demosaicing accuracy is one of the factors that can make one digital camera yield better images than another. Thus, demosaicing algorithms are carefully guarded trade secrets. When you shoot in JPEG mode, one of the first things your camera does with its captured image data is demosaic it to generate a full-color image. When you shoot in raw format, however, no demosaicing is performed by the camera. Instead, the raw data that your image sensor captures is written directly to your camera's storage card. Demosaicing is then performed in your raw conversion software, such as Aperture. While most decent cameras these days do a very good job of demosaicing, the fact is it's a very complex, computationally intensive task. Cameras don't have a lot of time to spend on this type of computation, because they need to be ready to shoot another image as quickly as possible. Because of this, some in-camera demosaicing algorithms take a few shortcuts that can sometimes affect image quality. Since your Mac doesn't have to rush through things, it can perform some slightly more sophisticated demosaicing algorithms. This is one of the reasons that raw images can yield better quality than JPEG images. What's more, raw images can be processed and stored as 16-bit images, allowing you to preserve all of the color data that your camera originally captured. (Of course, they can also be converted to JPEG files if you ultimately need those.) Note Some cameras can output TIFF files. Because TIFF is an uncompressed format, these images are free of the compression artifacts you can find in JPEG files. However, TIFF files are already demosaiced, so they contain three full channels of data. A raw file has only a single channel of luminance information. Consequently, raw files are usually much smaller than TIFF files. Some cameras perform a lossless compression on their raw files before writing them to the card to save space.

|