Introduction

|

| < Day Day Up > |

|

Background and Context

One of the key characteristics of our information economy is the requirement for lifelong learning. Industrial and occupational changes, global competition, and the explosion of information technologies have highlighted the need for skills, knowledge, and training. Focused on attracting and retaining staff, companies have placed an emphasis on training to bolster soft and hard skills to meet new corporate challenges. In many cases, career training has been placed in the hands of employees, with the understanding that employees must be able to keep ahead of technological change and perform innovative problem solving. One way of meeting the demand for these new skills (especially in information technology) is through online distance learning, which also offers the potential for continuous learning. Moreover, distance learning provides answers for the rising costs of tuition, the shortage of qualified training staff, the high cost of campus maintenance, and the need to reach larger learner populations. Key trends for corporate distance learning, germane to privacy and trust, include the following (Hodgins, 2000):

-

Learners may access courseware using many different computing devices, from different locations, via different networks (i.e., the distance-learning system is distributed).

-

Distance-learning technology will overtake classroom training to meet the needs for “know what” and “know how” training.

-

Distance learning will offer more user personalization, whereas courseware will dynamically change based on learner preferences or needs. In other words, distance-learning applications of the future will be intelligent and adaptive.

-

Corporate training is becoming knowledge management. This is the general trend in the digital economy. With knowledge management, employee competencies are assets, which increase in value through training. This trend has pushed the production of training that is more task specific than generic. Changes in corporate strategic directions are often reflected as changes in distance-learning requirements prompted by the need to train staff for those new directions.

-

Distance learning is moving toward open standards.

Most distance-learning innovations have focused on course development and delivery, with little or no consideration to privacy and security as required elements. However, it is clear from the above trends that there will be a growing need for high levels of confidentiality, privacy, and trust in distance-learning applications, and that security technologies must be put in place to meet these needs. The savvy of consumers regarding their rights to privacy is increasing, and new privacy legislations have recently been introduced by diverse jurisdictions. It is also clear that confidentiality is vital for information concerning distance-learning activities undertaken by corporate staff. While corporations may advertise their learning approaches to skills and knowledge development in order to attract staff, they do not want competitors to learn the details of training provided, which could compromise their strategic directions.

Privacy, Trust, and Security in Distance Learning

We explain here what we mean by “privacy,” “trust,” and “security” in the context of distance learning. A learner’s “privacy” represents the conditions under which he or she is willing to share personal and other valued information with others. Thus, information is private where conditions exist for its sharing. Privacy is violated where the underlying conditions for sharing are violated. A learner’s “trust” is his or her level of confidence in the ability of the distancelearning system to comply with the conditions the learner has stated (privacy preferences) for sharing information, function as expected for distance learning, and act in the learner’s best interest when made vulnerable. “Security” refers to the electronic means (e.g., encrypted traffic) used by a distance-learning system to comply with the learner’s privacy preferences, and function correctly without being compromised by an attack.

The focus of this chapter is on a distance learner’s privacy and trust: the requirements for privacy, the issues faced in providing for privacy, the standards and technologies available (security) for ensuring that privacy preferences are followed, and the technologies available (human factors design techniques) that promote trust. This means, for example, that we will not be just concerned with such issues as learner authentication, or even data integrity, unless it concerns the integrity of private data.

Agent-Supported Distributed Learning (ADL)

By agent-supported distributed learning, we mean a distance-learning system that has the following characteristics:

-

Distance learning is carried out via a network (including a wireless network).

-

The components of the distance-learning system are distributed across a network.

-

Software agents act on behalf of the learner or the provider of the distance-learning system to provide or enhance functionality, e.g., retrieval of learning material. These agents may be mobile or stationary or autonomous or dependent on some other party for their actions.

In the literature, a few authors (the number of authors is not extensive) have written on the use of agents for distance learning.

Santos and Rodriguez (2002) discussed an agent architecture that provides knowledge-based facilities for distance education. Their approach is to take advantage of recent standardization activities to integrate information from different sources (in standardized formats) in order to improve the learning process, both detecting learner problems and recommending new contents that can be more suitable for the learner’s skills and abilities. They accomplish this by using a suite of different agents, such as a “learning content agent,” a “catalog agent,” a “competency agent,” a “certification agent,” a “profile agent,” and a “learner agent.”

Rosi et al. (2002) looked at the application of the Semantic Web together with personal agents in distance education. They saw the following possibilities of such a combination: (a) enable sharing of knowledge bases regardless of how the information is presented, (b) allow access to services of other information systems that are offered through the Semantic Web, and (c) allow reuse of already stored data without the need to learn the relations and terminology of the knowledge base creator.

Koyama et al. (2001) proposed the use of a multifunctional agent for distance learning that would collect the learner’s learning material requirements, perform management, do information analysis, determine the learner’s understanding of a particular domain, handle the teaching material, and communicate with the learners. The distance-learning system would be built on the WWW, and this agent would reside in a Web server. Koyama et al. also proposed a fairly elaborate “judgment algorithm” that monitors the learner’s progress and learning time and does learner testing in conjunction with learner requirements, learner personal history, and the existence of “re-learning items” in order to decide appropriate learning materials for the learner.

Finally, Cristea and Okamoto (2000) described an agent-managed adaptive distance-learning system for teaching English that adapts over time to a learner’s needs and preferences in order to improve future learning performance. They use two agents, a Global agent (GA) and a personal agent (PA), to manage two student models, a global student model (GS) and an individual student model (IS), respectively. The GS contains global student information, such as the common mistakes, favorite pages, favorite lessons, search patterns, and so on. The IS contains personal student information, such as the last page accessed, grades for all tests taken, mistakes and their frequency, the order of access of texts inside each lesson, and so on. The PA manages the user information and extracts from it useful material for user guidance. The PA also requests information from the GA and collaborates with other PAs to obtain more specific information (e.g., what material other learners have used in a similar situation) than is available from the GA. In short, the PA acts as a personal assistant to the learner to provide guidance as to what material the learner should be studying. The GA averages information from several users to fill in the general student model. Its role is to give the PAs condensed information that might show trends and patterns. The GA cannot contact the learner directly unless the PA requests it.

It is noteworthy that privacy and trust issues abound in the above paper references. Yet none of the above authors even mentioned, let alone considered, such issues. However, we refer the interested reader to El-Khatib et al. (2003) who discussed privacy, security, and trust issues in e-learning from the perspectives of standards, requirements, and technology.

As shown by the above reference papers, apart from El-Khatib et al. (2003), every author has a different agent architecture for agent-supported distance learning. We show here how a standard set of agents for distance learning can be derived from IEEE P1484.1/D9: the Learning Technology Systems Architecture (LTSA) (IEEE LTSC, 2001).

LTSA-Based Architectural Model for ADL

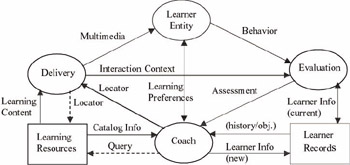

The LTSA prescribes processes, storage areas, and information flows for elearning. Figure 1 shows the relationships between these elements. The solid arrows represent data flows; the dashed arrows represent control flows. The overall operation is as follows: Learning Preferences, including the learning styles, strategies, methods, etc., are initially passed from the learner entity to the Coach process; the Coach reviews the set of incoming information, such as performance history, future objectives, and searches Learning Resources, via Query, for appropriate learning content; the Coach extracts Locators for the content from the Catalog Info and passes them to Delivery, which uses them to retrieve the content for delivery to the learner as multimedia; multimedia represents learning content, to which the learner exhibits a certain behavior; this behavior is evaluated and results in an Assessment or Learner Information such as performance; Learner Information is stored in Learner

Figure 1: LTSA system components

Records; and Interaction Context provides the context used to interpret the learner’s behavior.

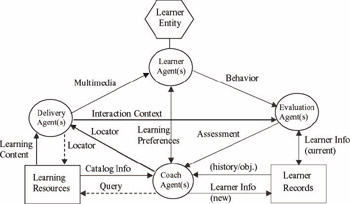

An agent architecture for distance learning can be derived from this model simply by mapping each LTSA process to one or more agents that are then responsible for implementing the process, mapping the information flows (both data and control) to messaging flows, and letting the storage areas stay the same. This mapping is defined by the first two columns of Table 1. The third column of Table 1 shows the agent owners, the parties on whose behalf the agents act.

| LTSA Components | LTSA-Based Agent Architecture | Agent Owners |

|---|---|---|

| Learner entity | Learner agent(s) | Learning entity |

| Delivery process | Delivery agent(s) | Learning entity |

| Evaluation process | Evaluation agent(s) | Distancelearning system |

| Coach process | Coach agent(s) | Distancelearning system |

| Information flow (both data and control) | Message flow | |

| Storage areas | Storage areas |

It may be necessary or convenient to have more than one agent implement a process for reasons of improved modularity, performance, or system organization. For example, a single delivery agent may get overloaded if it has to deal with too many learners. Rather, several delivery agents could be employed, wherein each agent deals with a certain number (determined through analysis or experimentation) of learners, and moreover, could be distributed to reduce communication bottleneck. As another example, improved modularity may be obtained through the use of several delivery agents in which each agent is specialized to retrieve specific material. If the learning material concerns software engineering, such a division of labor could be one agent for retrieving material on requirements specification, another agent for design specification, and a third agent for testing. Figure 2 illustrates the LTSA-based agent architecture.

Figure 2: LTSA-based agent architecture for distance learning

Scenarios

To further strengthen the concept of agent-supported distance learning, we list here some typical scenarios or use cases of an agent-supported distancelearning system. The first three scenarios are from Santos and Rodriguez (2002), but using the agent types derived from the LTSA:

-

A coach agent may ask an evaluation agent for the previous week’s learner monitoring information. The evaluation agent will search and retrieve this information and give it to the coach agent, who will analyze the information to determine if the learner needs help.

-

A coach agent may ask a learner agent for the learner’s preference information in order to send material availability notifications to delivery agents for forwarding to learner agents.

-

Coach agents can request information on learner preferences from learner agents and learner skills and attained certifications from other coach agents. Using this information, the coach agents can then make recommendations to the learner regarding new skills in which the learner might be interested, in order to achieve a new certification.

-

A learner agent may ask a coach agent for the availability of specific learning material. The coach agent then asks the learner agent for the learner’s preferences and uses this, together with the information on specific learning material, to query the learning resources for the availability of the material.

-

An evaluation agent checks with a learner agent to determine if it can go ahead with monitoring the learner’s performance on specific learning material. The learner agent checks its stored learner privacy preferences and gets back to the evaluation agent with the answer.

-

A coach agent queries learning resources for the suppliers of particular learning material. Using this information, the coach agent then queries the reputation or trustworthiness (assumed contained in learning resources) of the suppliers and rejects the learning material that is from untrustworthy suppliers.

The last two use cases constitute a foretaste of how agents can be used to maintain privacy and guard against disreputable content suppliers. We will deal with solutions for privacy and trust in the following sections.

Challenges and Issues

We highlight here some of the challenges and issues associated with satisfying the requirements for privacy and trust in agent-supported distance learning. The challenges and issues arise from first identifying the requirements and then looking at how to satisfy the requirements. Requirements can come from privacy legislation, standards, or popular usage (as in de facto standards). We examine the first two of these in the section entitled “Privacy Legislation and Standards.”

Challenges and issues arise from looking at how to satisfy the privacy requirements, as set out in the Privacy Principles, within an agent-supported distance-learning system. For example, how can agents be used to satisfy the Limiting Collection Principle? How can agents be used to satisfy the Safeguards Principle? Other issues are from a learner’s trust point of view and involve the perceived trustability of the system (see section entitled “Promoting Trust in ADL Systems”). For example, how can a learner be assured that specific requested learning content is reliable? How can a learner be convinced that his or her privacy is actually being protected according to his or her expressed wishes? In order for the learner to fully learn from an ADL system, she must accept and trust the system. Therefore, it is paramount that answers be found for these questions.

State of Privacy and Trust Research for ADL

It is safe to say that privacy and trust research for ADL is still in its infancy. Privacy and trust issues for distributed systems in general have only recently started to receive attention from researchers, and ADL is a distributed system. In addition, a search through both IEEE and ACM databases in April, 2003, found only about half a dozen papers on ADL alone (four of these papers are described above) and no papers on privacy and trust for ADL. Luckily, some privacy and trust research for other distributed systems (e.g., online banking) may be applied to ADL. Indeed, we will mostly follow this approach in the sections below.

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 121