ADO.NET

| | ||

| | | |

| | ||

The final technology we need to discuss here is ADO.NET , the core data-access technology provided by the .NET Framework. It allows us to interact with relational databases such as SQL Server or Oracle, and provides XML support that allows us to treat XML data as a data source in its own right.

Obviously, ADO.NET is a very large and complex technology that we can't cover in a comprehensive manner in this chapter. However, there are some key concepts around ADO.NET that we do need to deal with, along with some of the techniques that we'll be using through the rest of the book.

ADO.NET Architecture

To start with, it's important to understand the basic architecture of ADO.NET itself, so that we can see how best to use it when manipulating data in a distributed environment. ADO.NET is designed following a 2- tier model.

Data Providers

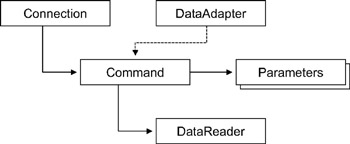

At the bottom tier, ADO.NET provides interaction with databases via data providers . A data provider allows us to connect to a data source, and to execute commands that add, update, delete, or read data. To retrieve data, data providers include a data-reader object. The objects that compose a data provider are shown in Figure 3-19.

Figure 3-19: ADO.NET data-provider classes

Microsoft supplies data providers that interact with various database engines, including the following:

-

Microsoft SQL Server

-

Oracle

-

Databases with an OLEDB driver that's supported by .NET

-

Databases with an ODBC driver that's supported by .NET

The SQL Server and Oracle providers are very fast, because they interact directly with the database. Be aware that the OLEDB and ODBC providers rely on OLEDB and ODBC to do the actual data interaction, so they involve an extra layer of overhead in order to reach the underlying database.

The DataSet Object

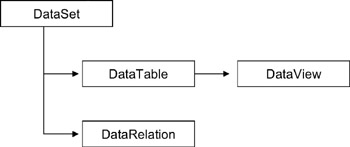

A data provider only provides interaction with the data source, not any form of data representation . ADO.NET provides a separate tier in order to represent data: the DataSet . The DataSet and its related objects provide a powerful and abstract representation of tabular data as shown in Figure 3-20.

Figure 3-20: ADO.NET DataSet and related objects

Each DataSet can contain multiple DataTable objects that can be related to each other via DataRelation objects. Each DataTable object represents a set of tabular data. That data can be accessed directly from the DataTable object, or we can construct DataView objects in order to provide different views of that data based on filters, sorts, and so forth.

The DataSet is entirely decoupled from any concept of a data source or provider. If we wish, we can use a DataSet by itself to represent any tabular datait can be thought of as an in-memory scratch pad for data.

Data Provider and DataSet Interaction

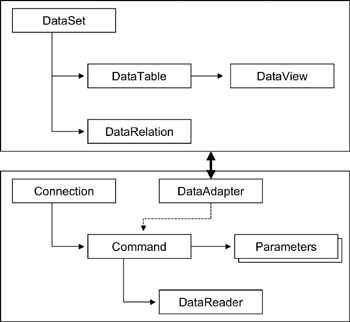

The link between a DataSet and a data provider is the data-adapter object, which understands how to get data into and out of a DataSet . A data adapter is linked to four command objectsone each for reading, updating, adding, and deleting data as shown in Figure 3-21.

Figure 3-21: Relationship between ADO.NET data providers and DataSet objects

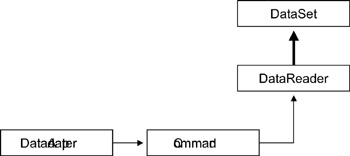

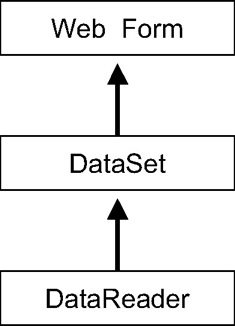

When we load a DataSet with data from a data provider, the data adapter invokes the appropriate command object to retrieve the data, and then uses a data-reader object to pump the data from the data source into a DataTable object within the DataSet . In short, this means that a DataSet is populated via a data-reader object as shown in Figure 3-22.

Figure 3-22: ADO.NET objects used to populate a DataSet

The separation between the interaction with a data source and the representation of the data in memory is very powerful. It means that the DataSet is totally disconnected from any data source. We can pass the DataSet object around a network, from machine to machine, without worrying about database connections and so forth. It also means, however, that there's some obvious overhead involved. Not only does the DataSet end up containing our data, but it also contains a lot of metadata that describes the data types, constraints, and relationships of our data. Depending on exactly what we intend to do with the data, the DataSet may or may not be the right choice.

Consider a typical web application that generates a display of data in the browser. We could build such a page using a DataSet the data reader will copy the data into the DataSet , and we can then copy the data from the DataSet into our page as shown in Figure 3-23.

Figure 3-23: Data flowing through a DataReader to a DataSet to a web page

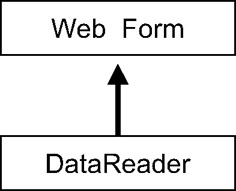

Alternatively, we could write our code to read the data directly from the data reader and put it into our page, thereby avoiding an entire data-copy operation as shown in Figure 3-24.

Figure 3-24: Data flowing from a DataReader directly into a web page

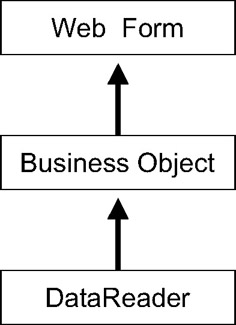

We can skip this extra copy step when we're building object-oriented systems as well. It may not make sense to copy the data into a DataSet . It may only make sense to turn around and copy that data into a set of objects. As shown in Figure 3-25, it will often make more sense to copy the data directly from the data reader into our objects.

Figure 3-25: Data flowing from a DataReader into a business object into the web page

The flexibility inherent in the architecture of ADO.NET is powerful, and we'll exploit it as we design our overall architecture in this book.

Basic Use of ADO.NET

Because we'll be making use of ADO.NET to interact with our data-storage tier throughout this book, it's worth spending some time briefly examining the basic use of it. First, we need to reference the assembly containing the appropriate data provider, and then import the latter's namespace to keep our code simple.

| Note | If we're using SQL Server or an OLEDB database, then most project types in VS .NET already reference the appropriate assembly. If we're using Oracle or an ODBC database, then we'll need to add a reference manually. |

Throughout this book, we'll be using Microsoft SQL Server 2000, but while we're developing we may also choose to use MSDE, which is a subset of SQL Server 2000. MSDE is included with some versions of VS .NET and is also downloadable from MSDN. The following URLs contain information about licensing and downloading MSDE:

-

http://support.microsoft.com/default.aspx?scid=KB;EN-US;Q324998

-

http://www.microsoft.com/sql/howtobuy/msdeuse.asp?LN=en-us&gssnb=1

The data provider for SQL Server 2000 (and therefore for MSDE) is in System.Data.dll , and its namespace is System.Data.SqlClient . If it isn't already referenced in our project, we'll need to use the Add References dialog box to add a reference to the assembly, and then add a using statement to the top of our code as follows :

using System.Data.SqlClient;

Connecting to a Database

Connecting to a SQL Server database involves creating and initializing a SqlConnection object, and then calling its Open () method. When we're done with the connection, we should always call its Close() or Dispose() methods . To ensure that the Close() method is always called, we should employ a try finally block or the using statement.

Initializing the SqlConnection object can be done via its properties, or by providing a connection string. The latter is the most common approach, mainly because it allows us to place the string in a configuration file, a registry entry, or some other location where we can change it without having to recompile the application.

| Note | In .NET, the recommended location for database connection strings is in the application configuration file. That is the technique we'll be using throughout this book. |

At a minimum, the connection string consists of the server name, the database name , and security information. For instance, a connection string for using Windows' integrated security to access the sample pubs database would look something like this:

data source= myserver ;initial catalog=pubs;integrated security=SSPI

Alternatively, we can provide a user ID and a password as follows:

data source= myserver ;initial catalog=pubs;user=myuser;password=mypassword

ADO.NET data providers implement database connection pooling inherently , so it's always available to us. However, database connections can only be pooled if they're identical. This means that all connections to the same database with the exactly the same connection string are pooled, so if we use integrated security in an environment where all code runs under the same user account, then we'll get connection pooling. However, if we use integrated security in an environment where our code runs under different user accounts, we'll get little or no pooling.

The key lies in whether the server impersonates the client's identity. For instance, if each client connects to the server anonymously, then all our server-side code will run under one identity for all users. This means that integrated security will result in all the database connections being under that identity, and so we'll get pooling. On the other hand, if all our clients connect to the server using integrated security, then the server-side code will run under the same account as each client. In this case, integrated security will result in database connections being established under the identities of the various users. The result will be that we'll have little or no database connection pooling.

Once we've decided on our database connection string, we can open the connection. The following code illustrates how this is done, and includes a using block in order to ensure that the connection is disposed (and thus closed), whether or not we encounter any errors:

string dbConn = @"data source=myserver;initial catalog=pubs;integrated security=SSPI"; using(SqlConnection cn = new SqlConnection(dbConn)) { cn.Open(); // Do database interaction here } We'll use this basic structure throughout our code as we interact with the database.

Reading Data with a Data Reader

Earlier, we discussed how data-adapter objects use a data reader to populate a DataSet object. This is great when we're building a datacentric application where we'll be making use of the DataSet object. However, if we're trying to populate a set of object-oriented business objects, we'll probably want to use a data reader directly. This will provide the best performance when working with objects.

A data-reader object is generated from a command object. The command object will execute either a stored procedure that returns data, or a text SQL SELECT statement. It's preferable to use stored procedures for this purpose, because they provide better performance and also keep the data-storage tier better separated from the data-access tier within our logical model, as we discussed in Chapter 1.

In either case, the basic process is to open a database connection, create and initialize a command object, and then execute the command object to create a data-reader object. We can then retrieve the data from the data reader and use it as we see fit.

Using Dynamic SQL

The following code illustrates the basic flow of operation when using a text SQL statement:

string dbConn = @"data source=myserver;initial catalog=pubs;integrated security=SSPI"; using(SqlConnection cn = new SqlConnection(dbConn)) { cn.Open(); string dbConn = @"data source=ineroth;initial catalog=pubs;integrated security=SSPI"; using(SqlConnection cn = new SqlConnection(dbConn)) { cn.Open(); using(SqlCommand cm= cn.CreateCommand()) { cm.CommandText = "SELECT au_lname FROM authors WHERE au_lname LIKE @name"; cm.Parameters.Add("@name", myCriteria); using(SqlDataReader dr = cm.ExecuteReader()) { // Read the data here } } } Notice how we use the command object's Parameters collection to add a parameter for use within the SQL statement. Although we could have used string concatenation to insert the parameter value directly, this technique is far superior . By using the parameter approach, we avoid having to worry about formatting the value properly based on data typethat's handled for us automatically. Also, this approach means that we can switch to calling a stored procedure with very little change to our code. Perhaps most importantly, this technique automatically guards against SQL injection attacks.

| Note | It's almost always preferable to call a stored procedure in a production application. That said, it's often faster and easier to debug if we use dynamic SQL statementsthey make it easier for the developer to experiment, and to tweak the statement to get everything right. The SQL can be placed in a stored procedure prior to going into production. Be aware that there are differences between dynamic SQL and the SQL you may put into your stored procedures, so there will be some work involved in converting to stored procedures and debugging them and the application. Using formal parameters in our dynamic SQL statements, as shown earlier, helps to minimize this effort. |

Calling a Stored Procedure

The code to call a stored procedure is similar. We would have a stored procedure similar to this:

CREATE PROCEDURE getAuthorName (@Name varchar(20)) AS SELECT au_lname FROM authors WHERE Name=@Name; RETURN

We could call it with the following code:

string dbConn = @"data source=ineroth;initial catalog=pubs;integrated security=SSPI"; using(SqlConnection cn = new SqlConnection(dbConn)) { cn.Open(); using(SqlCommand cm= cn.CreateCommand()) { cm.CommandType = CommandType.StoredProcedure; cm.CommandText = "getAuthorName"; cm.Parameters.Add("@name", myCriteria); using(SqlDataReader dr = cm.ExecuteReader()) { // Read the data here } } } As we can see, we just need to set the CommandType property to indicate that we're calling a stored procedure, and then supply the stored procedure name as the CommandText .

Reading the Data

In both cases, the result of the ExecuteReader() method is a new SqlDataReader object that we can use to read the resulting data. This object has a lot of properties to support the various ways we might choose to use it. The most basic (though not ideal) approach is to use the GetValue() method to retrieve all values as type Object . A loop to retrieve all the values from a data reader could look like this:

while(dr.Read()) { for (int field = 0; field < dr.FieldCount; field++) { System.Diagnostics.Debug.WriteLine(dr.GetValue(field)); } } The reason why this isn't ideal is that the GetValue() method always returns values as type Object , which isn't particularly fast. This code also has another potential flaw, in that it doesn't handle null values from the database. The one positive thing about this code is that we're using a numeric index to select the field to retrieve. We do have the option of using field names instead, so that rather than GetValue(0) , we might use GetValue("au_lname") . The numeric option provides better performance, but it obviously decreases the readability of our code.

Instead of using GetValue() , we should be using the set of type-specific methods available on the data-reader object, although this does require that we know the data types of the fields ahead of time. Consider the following code:

while(dr.Read()) { _name = dr.GetString(0)); _BirthDate = dr.GetDateTime(1)); _sales = dr.GetDouble(2)); } We're now retrieving the values using type-specific methods. This is faster because .NET doesn't need to typecast the data as we put it into our variables . This is the approach we'll be using in our code throughout this book.

Using Output Parameters

Sometimes, we'll have an output parameter from a stored procedure that provides us with data along with the result set that we get from the data reader. When this is the case, we need to be aware that the output parameter values will not be available until after we've read through all the data in the data reader. As an example, consider the following code, which calls a stored procedure that has an output parameter:

string dbConn = @"data source=ineroth;initial catalog=pubs;integrated security=SSPI"; SqlParameter param; using(SqlConnection cn = new SqlConnection(dbConn)) { cn.Open(); using(SqlCommand cm= cn.CreateCommand()) { cm.CommandType = CommandType.StoredProcedure; cm.CommandText = "getAuthorName"; param = new SqlParameter(); param.ParameterName = "@au_id"; param.Direction = ParameterDirection.Output; cm.Parameters.Add(param); cm.Parameters.Add("@name", myCriteria); using(SqlDataReader dr = cm.ExecuteReader()) { // Read the data here } } } This shows how to define the parameter to accept an output value from the stored procedure. The following code illustrates how to get at the output parameter value:

using(SqlDataReader dr = cm.ExecuteReader()) { // Read the data here while(dr.Read()) { for (int field = 0; field < dr.FieldCount; field++) { System.Diagnostics.Debug.WriteLine(dr.GetValue(field)); } } } } string result = (string)param.Value; We can't read the output parameter value from the SqlParameter object until after we've finished retrieving all the data from the data reader.

Handling Null Values

So far none of our code handles any possible null values in the data. Exactly how we handle nulls will depend on what they mean to us. In the case that we don't really care about the difference between a value that has never been entered and one that's just empty, we must do extra work to deal with the null values. In our framework, we'll be creating a SafeDataReader class to encapsulate these details, so that our business code doesn't have to worry about nulls at all. Here though, let's discuss the options at our disposal via ADO.NET.

In the case that we do care about the difference between a value that has never been entered and one that's just empty, we'll need to use data structures within our application to make that distinction. The easiest data structure is the object data type, which can hold a null value. In that case, we'll need to use the data reader's IsDBNull() method throughout our code to display (or otherwise deal with) our values appropriately, as shown here:

If (dr.IsDBNull(0)) { // Handle null value appropriately } else { // Handle regular value appropriately } More common is the case in which the difference between a null value and an empty value isn't important, but where the database wasn't properly designed, and is allowing nulls where they don't belong. In such a case, we'll need to detect the fact that a field is null, and convert that null into an appropriate empty value. For a given field, we can do the following:

if (dr.IsDBNull(0)) _name = string.Empty; else _name = dr.GetString(0);

Reading Multiple Result Sets

It's possible for a stored procedure or a SQL statement to return multiple data sets. This is a powerful feature when our data contains relationships: We can retrieve, for example, an invoice and all of its line-item data with a single stored procedure call. The invoice data and the line item data would be returned as two different result sets from the same call. We'll use this technique when we create a set of stored procedures in Chapter 6.

A data-reader object supports this concept through the NextResult() method. Given our invoice example, we might have code that looks something like this:

dr = cm.ExecuteReader(); using(dr) { dr.Read(); // Read invoice data dr.NextResult(); while(dr.Read()) { // Read this line item's data } } This reads the first row of the first result set, which presumably contains our invoice header data. We then call the NextResult() method to move to the second result set, where we can loop through and read all the line-item data.

Updating Data

Updating data is done using a command object with an appropriate INSERT , UPDATE , or DELETE statement, or by calling a stored procedure that handles the operation. As with data retrieval, it's preferable to use stored procedures in a production setting, though dynamic SQL is often used in development.

Calling a stored procedure to update data is similar to calling one to retrieve data, though instead of calling ExecuteReader() on the command object, we'll be calling ExecuteNonQuery() . Suppose that we have a stored procedure such as the following:

CREATE PROCEDURE updateAuthorName (@ID uniqueidentifier, @Name varchar(50)) AS SET NOCOUNT ON UPDATE authors SET au_lname=@Name WHERE au_id=@ID; RETURN

We could call it with the following code:

string dbConn = @"data source=ineroth;initial catalog=pubs;integrated security=SSPI"; using(SqlConnection cn = new SqlConnection(dbConn)) { cn.Open(); using(SqlCommand cm= cn.CreateCommand()) { cm.CommandType = CommandType.StoredProcedure; cm.CommandText = "updateAuthorName"; cm.Parameters.Add("@id", myID); cm.Parameters.Add("@name", myCriteria); cm.ExecuteNonQuery(); } } As you can see, using a command object to update data is pretty straightforward. Something a little more complex is when we call a stored procedure that not only updates data, but also returns values via output parameters.

Suppose that we have an authors table with an autoincrementing id column. The following stored procedure could add a record into that table, thereby returning the newly created identity value via an output parameter as follows:

CREATE PROCEDURE addOrder @id int output, @Name varchar(50) AS INSERT INTO authors (au_lname) VALUES (@Name) SET @id = @@Identity RETURN

The code to set up a parameter to accept an output value is a bit different from the code for simply sending a value into the stored procedure, as shown here:

string dbConn = @"data source=ineroth;initial catalog=pubs;integrated security=SSPI"; using(SqlConnection cn = new SqlConnection(dbConn)) { cn.Open(); using(SqlCommand cm= cn.CreateCommand()) { cm.CommandType = CommandType.StoredProcedure; cm.CommandText = "updateAuthorName"; SqlParameter param = new SqlParameter("@id", 0); param.Direction = ParameterDirection.Output; cm.Parameters.Add(param); cm.Parameters.Add("@id", myID); cm.Parameters.Add("@name", myCriteria); cm.ExecuteNonQuery(); newID = cm.Parameters["@id"].Value; } } Rather than allow the Add() method to create a SqlParameter object for us using its defaults, we create a SqlParameter object ourselves . This allows us to set the Direction property to indicate that it's an output parameter. The SqlParameter object is then added to the Parameters collection so that it can transfer the data from the stored procedure back to our code.

After the stored procedure has been executed, we can retrieve the value from the parameter object, and then use it as needed within our application.

ADO.NET Transactions

Earlier in the chapter, we discussed Enterprise Services transactions, or two-phase transactions. ADO.NET itself supports a simpler form of transactions that can be used to protect updates made to a single database. We can write code to update multiple tables in a single database, or make multiple changes to a single table, using ADO.NET transactions to ensure that the update will be rolled back in case of error, or when our business logic decides that the operation must be cancelled.

To support this concept, ADO.NET includes transaction objects. These objects are part of the data-provider tier in the ADO.NET architecture, so the transaction object for Microsoft SQL Server is called SqlTransaction .

The transaction object is associated with the connection object: We use a connection object to create a transaction object. From that point forward, any command objects to be run against this connection must first be associated with the active transaction object. The following code illustrates the changes from our previous data-update code to utilize an ADO.NET transaction object as shown here:

string dbConn = @"data source=ineroth;initial catalog=pubs;integrated security=SSPI"; using(SqlConnection cn = new SqlConnection(dbConn)) { cn.Open(); SqlTransaction tr = cn.BeginTransaction(); using(SqlCommand cm= cn.CreateCommand()) { cm.Transaction = tr; cm.CommandType = CommandType.StoredProcedure; cm.CommandText = "updateAuthorName"; SqlParameter param = new SqlParameter("@id", 0); param.Direction = ParameterDirection.Output; cm.Parameters.Add(param); cm.Parameters.Add("@id", myID); cm.Parameters.Add("@name", myCriteria); try { cm.ExecuteNonQuery(); newID = cm.Parameters["@id"].Value; tr.Commit(); } catch { tr.Rollback(); } } } First, we begin a transaction by calling the BeginTransaction() method on the connection object. This results in an active transaction object. The transaction object itself has a Connection property; we must link our command objects to both the transaction object and the connection property from the transaction. If these are out of sync, we'll get a runtime error.

When implementing manual transactions, we also need to implement a catch block in our error handler so we can call the Rollback() method in case of error. Otherwise, we call the Commit() method to commit the transaction.

| | ||

| | | |

| | ||

EAN: 2147483647

Pages: 111

If you may any questions please contact us: flylib@qtcs.net