Framework Design

| Note | Throughout this book, we're assuming we're building business applications, in which case almost all objects are ultimately stored in the database at one time or another. Even if our object isn't persisted to a database, we can still use BusinessBase to gain access to the n-Level undo, broken-rule tracking, and "dirty"-tracking features built into the framework. |

For example, an Invoice is typically a root object, though the LineItem objects contained by an Invoice object are child objects. It makes perfect sense to retrieve or update an Invoice , but it makes no sense to create, retrieve, or update a LineItem without having an associated Invoice . To make this distinction, BusinessBase includes a method that we can call to indicate that our object is a child object: MarkAsChild() . By default, business objects are assumed to be root objects, unless this method is invoked. This means that a child object might look like this:

[Serializable()] public class Child : BusinessBase { public Child() { MarkAsChild(); } } The BusinessBase class provides default implementations of the four data-access methods that exist on all root business objects. These methods will be called by the data portal mechanism. These default implementations all raise an error if they're called. The intention is that our business objects will override these methods if they need to support, create, fetch, update, or delete operations. The names of these four methods are as follows :

-

DataPortal_Create()

-

DataPortal_Fetch()

-

DataPortal_Update()

-

DataPortal_Delete()

BusinessBase provides a great deal of functionality to our business objects, whether root or child. In Chapter 4, we'll build BusinessBase itself, and in Chapter 7 we'll implement a number of business objects that illustrate how BusinessBase is used.

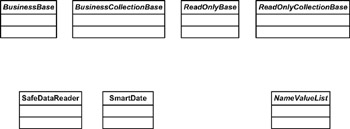

BusinessCollectionBase

The BusinessCollectionBase class is the base from which all editable collections of business objects will be created. If we have an Invoice object with a collection of LineItem objects, this will be the base for creating that collection:

[Serializable()] public class LineItems : BusinessCollectionBase { } Of course, we'll have to implement Item() , Add() , and Remove() methods respectively in order to create a strongly typed collection object. The process is the same as if we had inherited from System.Collections.CollectionBase , except that our collection will include all the functionality required to support n-Level undo, object persistence, and the other business-object features.

| Note | BusinessCollectionBase will ultimately inherit from System.Collections.CollectionBase , so we'll start with all the core functionality of a .NET collection. |

The BusinessCollectionBase class also defines the four data-access methods that we discussed in BusinessBase . This will allow us to retrieve a collection of objects directly (rather than retrieving a single object at a time), if that's what is required by our application design.

ReadOnlyBase

Sometimes, we don't want to expose an editable object. Many applications have objects that are read-only or display-only. Read-only objects need to support object persistence only for retrieving data, not for updating data. Also, they don't need to support any of the n-Level undo or other editing-type behaviors, because they're created with read-only properties.

For editable objects, we created BusinessBase , which has a property that we can set to indicate whether it's a parent or child object. The same base supports both types of objects, allowing us to switch dynamically between parent and child at runtime. Making an object read-only or read-write is a bigger decision, because it impacts the interface of our object. A read-only object should only include read-only Property methods as part of its interface, and that isn't something that we can toggle on or off at runtime. By implementing a specific base class for read-only objects, we allow them to be more specialized, and to have fewer overheads.

The ReadOnlyBase class is used to create read-only objects as follows:

[Serializable()] public class StaticContent : ReadOnlyBase { } We shouldn't implement any read-write properties in classes that inherit from ReadOnlyBase ”were we to do so, it would be entirely up to us to handle any undo, persistence, or other features for dealing with the changed data. If an object has editable fields, it should subclass from BusinessBase .

ReadOnlyCollectionBase

Not only do we sometimes need read-only business objects, but we also occasionally require immutable collections of objects. The ReadOnlyCollectionBase class allows us to create strongly typed collections of objects whereby the object and collection are both read-only.

[Serializable()] public class StaticList : ReadOnlyCollectionBase { } As with ReadOnlyBase , this object supports only the retrieval of data. It has no provision for updating data or handling changes to its data.

NameValueList

The NameValueList class is a specific implementation of ReadOnlyBase that provides support for read-only, name -value pairs. This reflects the fact that most applications use lookup tables or lists of read-only data such as categories, customer types, product types, and so forth.

Rather than forcing business developers to create read-only, name-value collections for every type of such data, they can just use the NameValueList class. It allows the developer to retrieve a name-value list by specifying the database name, table name, name column, and value column. The NameValueList class will use those criteria to populate itself from the database, resulting in a read-only collection of values.

We can use NameValueList to populate combo box or list box controls in the user interface, or to validate changes to property values in order to ensure that they match a value in the list.

| Note | As implemented for this book, this class only supports SQL Server. You can alter the code to work with other database types if required in your environment. |

SafeSqlDataReader

Most of the time, we don't care about the difference between a null value and an empty value (such as an empty string or a zero), but databases often do. When we're retrieving data from a database, we need to handle the occurrence of unexpected null values with code such as this:

if(dr.IsDBNull(idx)) myValue = string.Empty; else myValue = dr.GetString(idx);

Clearly, doing this over and over again throughout our application can get very tiresome. One solution is to fix the database so that it doesn't allow nulls where they provide no value, but this is often impractical for various reasons.

| Note | Here's one of my pet peeves. Allowing nulls in a column where we care about the difference between a value that was never entered and the empty value ("", or 0, or whatever) is fine. Allowing nulls in a column where we don't care about the difference merely complicates our code for no good purpose, thereby decreasing developer productivity and increasing maintenance costs. |

As a more general solution, we can create a utility class that uses SqlDataReader in such a way that we never have to worry about null values again. Unfortunately, the SqlDataReader class isn't inheritable, so we can't subclass it directly, but we can wrap it using containment and delegation. The result is that our data-access code works the same as always, except that we never need to write checks for null values. If a null value shows up, SafeDataReader will automatically convert it to an appropriate empty value.

Obviously, if we do care about the difference between a null and an empty value, we can just use a regular SqlDataReader to retrieve our data.

SmartDate

Dates are a perennial development problem. Of course, we have the DateTime data type, which provides powerful support for manipulating dates, but it has no concept of an "empty" date. The trouble is that many applications allow the user to leave date fields empty, so we need to deal with the concept of an empty date within our application.

On top of this, date formatting is problematic . Or rather, formatting an ordinary date value is easy, but again we're faced with the special case whereby an "empty" date must be represented by an empty string value for display purposes. In fact, for the purposes of data binding, we often want any date properties on our objects to be of type string , so the user has full access to the various data formats as well as the ability to enter a blank date into the field.

Dates are also a challenge when it comes to the database: The date values in our database don't understand the concept of an empty date any more than .NET does. To resolve this, date columns in a database typically do allow null values, so a null can indicate an empty date.

| Note | Technically, this is a misuse of the null value, which is intended to differentiate between a value that was never entered, and one that's empty. Unfortunately, we're typically left with no choice, because there's no way to put an empty date value into a date data type. |

The SmartDate class is an attempt to resolve this issue. Repeating our problem with SqlDataReader , the DateTime data type isn't inheritable, so we can't just subclass DateTime and create a more powerful data type. We can, however, use containment and delegation to create a class that provides the capabilities of the DateTime data type while also supporting the concept of an empty date.

This isn't as easy at it might at first appear, as we'll see when we implement this class in Chapter 4. Much of the complexity flows from the fact that we often need to compare an empty date to a real date, but an empty date might be considered very small, or very large. If we have a MinimumDate property on an object, and the object's value is empty, then it probably represents the smallest possible date. If we have a MaximumDate property on an object, its empty value property represents the largest possible value.

The SmartDate class is designed to support these concepts, and to integrate with the SafeSqlDataReader so that it can properly interpret a null database value as an empty date.

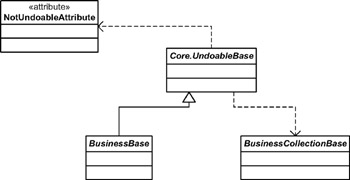

n-Level undo Functionality

The implementation of n-Level undo functionality is quite complex, and involves heavy use of reflection. Fortunately, we can use inheritance to place the implementation in a base class, so that no business object needs to worry about the undo code. In fact, to keep things cleaner, this code is in its own base class, separate from any other business object behaviors as shown in Figure 2-15.

Figure 2-15: Separating n-Level undo into Core.UndoableBase

At first glance, it might appear that we could use .NET serialization to implement undo functionality: What easier way to take a snapshot of an object's state than to serialize it into a byte stream? Unfortunately, this isn't as easy as it might sound, at least when it comes to restoring the state of our object.

Taking a snapshot of a [Serializable()] object is easy ”we can do it with code similar to this:

[Serializable()] public class Customer { public Object Snapshot() { MemoryStream m = new MemoryStream(); BinaryFormatter f = new BinaryFormatter(); f.Serialize(m, this); m.Position = 0; return m.ToArray(); } } This converts the object into a byte stream, returning that byte stream as an array of type Byte . That part is easy ”it's the restoration that's tricky. Suppose that the user now wants to undo his changes, requiring us to restore the byte stream back into our object. The code that deserializes a byte stream looks like this:

[Serializable()] public class Customer { public Customer Deserialize(byte[] state) { MemoryStream m = new MemoryStream(state); BinaryFormatter f = new BinaryFormatter(); return (Customer)f.Deserialize(m); } } Notice that this function returns a new customer object . It doesn't restore our existing object's state; it creates a new object. Somehow, it would be up to us to tell any and all code that has a reference to our existing object to use this new object. In some cases, that might be easy to do, but it isn't always trivial. In complex applications, it's hard to guarantee that other code elsewhere in the application doesn't have a reference to our object ”and if we don't somehow get that code to update its reference to this new object, it will continue to use the old one.

What we really want is some way to restore our object's state in place , so that all references to our current object remain valid, but the object's state is restored. This is the purpose of the UndoableBase class.

UndoableBase

Our BusinessBase class inherits from UndoableBase , and thereby gains n-Level undo capabilities. Because all business objects subclass BusinessBase , they too gain n-Level undo. Ultimately, the n-Level undo capabilities are exposed to the business object and to UI developers via three methods:

-

BeginEdit() tells the object to take a snapshot of its current state, in preparation for being edited. Each time BeginEdit() is called, a new snapshot is taken, allowing us to trap the state of the object at various points during its life. The snapshot will be kept in memory so that we can easily restore that data to the object if CancelEdit() is called.

-

CancelEdit() tells the object to restore the object to the most recent snapshot. This effectively performs an undo operation, reversing one level of changes. If we call CancelEdit() the same number of times as we called BeginEdit() , we'll restore the object to its original state.

-

ApplyEdit() tells the object to discard the most recent snapshot, leaving the object's current state untouched. It accepts the most recent changes to the object. If we call ApplyEdit() the same number of times that we called BeginEdit() , we'll have discarded all the snapshots, essentially making any changes to the object's state permanent.

We can combine sequences of BeginEdit() , CancelEdit() , and ApplyEdit() calls to respond to the user's actions within a complex Windows Forms UI. Alternatively, we can totally ignore these methods, taking no snapshots of the object's state, and therefore not support the ability to undo changes. This is common in web applications in which the user typically has no option to cancel changes. Instead, they simply navigate away to perform some other action or view some other data.

Supporting Child Collections

As shown in Figure 2-15, the UndoableBase class also uses BusinessCollectionBase . As it traces through our business object to take a snapshot of the object's state, it may encounter collections of child objects. For n-Level undo to work for complex objects as well as simple objects, any snapshot of object state must extend down through all child objects as well as the parent object.

We discussed this earlier with our Invoice and LineItem example. When we BeginEdit() on an Invoice , we must also take snapshots of the states of all LineItem objects, because they're technically part of the state of the Invoice object itself. To do this while preserving encapsulation, we make each individual object take a snapshot of its own state, so that no object data is ever made available outside the object.

Thus, if our code in UndoableBase encounters a collection of type BusinessCollectionBase , it will call a method on the collection to cascade the BeginEdit() , CancelEdit() , or ApplyEdit() call to the child objects within that collection.

NotUndoableAttribute

The final concept we need to discuss regarding n-Level undo is the idea that some of our data might not be subject to being in a snapshot. Taking a snapshot of our object's data takes time and consumes memory, so if our object includes read-only values, there's no reason to take a snapshot of them. Because we can't change them, there's no value in restoring them to the same value in the course of an undo operation.

To accommodate this scenario, the framework includes a custom attribute named NotUndoableAttribute , which we can apply to variables within our business classes, as follows:

[NotUndoable()] string readonlyData;

The code in UndoableBase simply ignores any variables marked with this attribute as the snapshot is created or restored, so the variable will always retain its value regardless of any calls to BeginEdit() , CancelEdit() , or ApplyEdit() on the object.

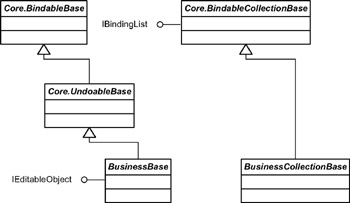

Data-Binding Support

As we discussed earlier in the chapter, the .NET data-binding infrastructure directly supports the concept of data binding to objects and collections. However, we can help to provide more complete behaviors by implementing a couple of interfaces and raising some events.

The IBindingList interface is a well-defined interface that raises a single event to indicate that the contents of a collection have changed. We'll create a class to implement the event, marking it as [field: NonSerialized()] .

For the property "Changed" events, however, things are a bit more complex. It turns out that having an event declared for every property on an object is complex and hard to maintain. Fortunately, there's a generic solution that simplifies the matter.

Earlier, when we discussed the property "Changed" events, we mentioned how the raising of a single such event triggers the data-binding infrastructure to refresh all bound controls. This means that we only need one property "Changed" event as long as we can raise that event when any property is changed. One of the elements of our framework is the concept of tracking whether an object has changed or is "dirty" ” all editable business objects will expose this status as a read-only IsDirty property, along with a protected MarkDirty() method that our business logic can use to set it. This value will be set any time a property or value on the object is changed, so it's an ideal indicator for us.

| Note | Looking forward, Microsoft has indicated that they plan to change data binding to use an IPropertyChanged interface in the future. This interface will define a single event that a data source (such as a business object) can raise to indicate that property values have changed. In some ways Microsoft is formalizing the scheme I'm putting forward in this book. |

If we implement an IsDirtyChanged event on all our editable business objects, our UI developers can get full data-binding support by binding a control to the IsDirty property. When any property on our object changes, the IsDirtyChanged event will be raised, resulting in all data-bound controls being refreshed so that they can display the changed data.

| Note | Obviously, we won't typically want to display the value of IsDirty to the end user, so this control will typically be hidden from the user, even though it's part of the form. |

Next we'll implement an IsDirtyChanged event in the BindableBase class using the [field: NonSerialized()] attribute. BusinessBase will subclass from the BindableBase class, and therefore all editable business objects will support this event.

Ultimately, what we're talking about here is creating one base class ( BindableBase ) to raise the IsDirtyChanged event, and another ( BindableCollectionBase ) to implement the IBindingList interface, so that we can fire the ListChanged event. These classes are linked to our core BusinessBase and BusinessCollectionBase classes as shown in Figure 2-16.

Figure 2-16: Class diagram with BindableBase and BindableCollectionBase

Combined with implementing IEditableObject in BusinessBase , we can now fully support data binding in both Windows Forms and Web Forms. IEditableObject and IBindingList are both part of the .NET Framework, as is the support for property Changed events. All we're doing here is designing our framework base classes to take advantage of this existing functionality, so the business developer doesn't have to worry about these details.

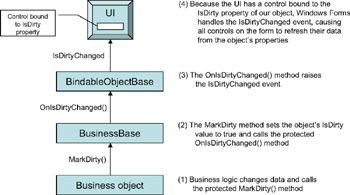

BindableBase

The BindableBase class just declares the IsDirtyChanged event and implements a protected method named OnIsDirtyChanged() that raises the event. BusinessBase will call this method any time its MarkDirty() method is called by our business logic. Our business logic must call MarkDirty() any time the internal state of the object changes. Any time the internal state of the object changes, it will raise the IsDirtyChanged event. The UI code can then use this event to trigger a data-binding refresh as shown in Figure 2-17.

Figure 2-17: The IsDirtyChanged event triggering a data-binding refresh

BindableCollectionBase

The BindableCollectionBase class only provides an implementation of the IBindingList interface, which includes a ListChanged event that's to be raised any time the content of the collection is changed. This includes adding, removing, or changing items in the list.

| Note | The interface also includes methods that support sorting, filtering, and searching within the collection. We won't be implementing these features in our framework. Obviously, they're supported concepts that we could implement as an extension to the framework. |

Again, when implementing BindableCollectionBase we must mark the ListChanged event as [field: NonSerialized()] , which will prevent the underlying delegate reference from being serialized.

IEditableObject

The IEditableObject interface is defined in the System.ComponentModel namespace of the .NET Framework; it defines three methods and no events. Respectively, the methods indicate that the object should prepare to be edited ( BeginEdit() ), that the edit has been canceled ( CancelEdit() ), and that the edit has been accepted ( EndEdit() ). This interface is automatically invoked by the Windows Forms data-binding infrastructure when our object is bound to any control on a form.

One of our requirements for BusinessBase is that it should support n-Level undo. The requirement for the IEditableObject interface, however, is a single level of undo. Obviously, we can use our n-Level undo capability to support a single level of undo, so implementing IEditableObject is largely a matter of "aiming" the three interface methods at the n-Level undo methods that implement this behavior.

The only complexity is that the BeginEdit() method from IEditableObject can (and will) be called many times, but it should only be honored on its first call. Our n-Level edit capability would normally allow BeginEdit() to be called multiple times, with each call taking a snapshot of the object's state for a later restore operation. We'll need to add a bit more code to handle the difference in semantics between these two approaches.

Business-Rule Tracking

As we discussed earlier, one of the framework's goals is to simplify the tracking of broken business rules ”or at least, those rules that are a "toggle," whereby a broken rule means that the object is invalid. An important side benefit of this is that the UI developer will have read-only access to the list of broken rules, which means that the descriptions of the broken rules can be displayed to the user in order to explain what's making the object invalid.

The support for tracking broken business rules will be available to all editable business objects, so it's implemented at the BusinessBase level in the framework. Because all business objects subclass BusinessBase , they'll all have this functionality.

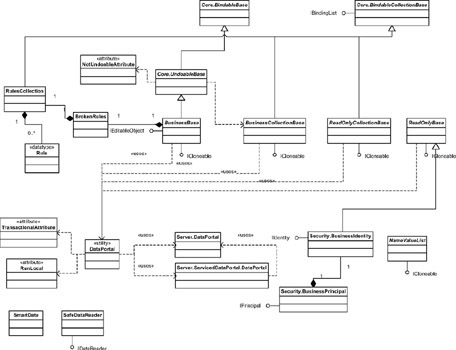

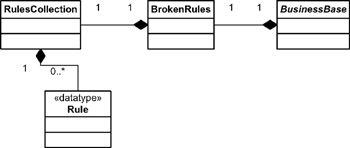

To provide this functionality, each business object will have an associated collection of broken business rules as shown in Figure 2-18.

Figure 2-18: Relationship between BusinessBase and BrokenRules

This is implemented so that the business object can mark rules as "broken," and a read-only list of the currently broken rules can be exposed to the UI code.

If all we wanted to do was keep a list of the broken rules, we could do that directly within BusinessBase . However, we also want to have a way of exposing this list of broken rules to the UI as a read-only list. For this reason, we'll implement a specialized collection object that our business object can change, but which the UI sees as being read-only. On top of that, we'll have the collection implement support for data binding so that the UI can display a list of broken-rule descriptions to the user by simply binding the collection to a list or grid control.

| Note | The reason why the list of broken rules is exposed to the UI is due to reader feedback from my previous business-objects books. Many readers wrote me to say that they'd adapted my COM framework to enhance the functionality of broken-rule tracking in various ways, the most common being to allow the UI to display the list of broken rules to the user. |

To use the broken-rule tracking functionality within a business object, we might write code such as this:

public int Quantity { get { return _quantity; } set { _quantity = value; BrokenRules.Assert("BadQuantity", "Quantity must be a positive number", _quantity < 0); BrokenRules.Assert("BadQuantity", "Quantity can't exceed 100", _quantity > 100); MarkDirty(); } } It's up to us to decide on the business rules, but the framework takes care of tracking which ones are broken, and whether the business object as a whole is valid at any given point in time. We're not creating a business-rule engine or repository here ” we're just creating a collection that we can use to track a list of the rules that are broken.

BrokenRules

Though the previous UML diagram displays the RulesCollection and the Rule structure as independent entities, they're actually nested within the BrokenRules class. This simplifies the use of the concept, because the BusinessBase code only needs to interact directly with BrokenRules , which uses the RulesCollection and Rule types internally.

The BrokenRules class includes methods for use by BusinessBase to mark rules as broken or not broken. Marking a rule as broken adds it to the RulesCollection object, though marking a rule as not broken removes it from the collection. To find out if the business object is valid, all we need to do is see if there are any broken rules in the RulesCollection by checking its Count property.

RulesCollection

The RulesCollection is a strongly typed collection that can only contain Rule elements. It's constructed so that the BrokenRules code can manipulate it, and yet be safely exposed to the UI code. There are no public methods that alter the contents of the collection, so the UI is effectively given a reference to a read-only object. All the methods that manipulate the object are scoped as internal , so they're unavailable to our business objects or to the UI.

Rule Structure

The Rule structure contains the name and description of a rule, and it's a bit more complex than a normal structure in order to support data binding in Web Forms. A typical struct consists of public fields (variables) that are directly accessible from client code, but it turns out that Web Forms data binding won't bind to public fields in a class or structure; it can only bind to public properties. Because of this, our structure contains private variables, with public properties to expose them. This allows both Windows Forms and Web Forms to use data binding to display the list of broken rules within the UI.

The reason why Rule is a struct instead of a class is because of memory management. As our application runs, we can expect that business rules will be constantly broken and "unbroken," resulting in the creation and destruction of Rule entities each time. There's a higher cost to creating and destroying an object than there is for a struct , so we're minimizing this effect as far as we can.

Data Portal

Supporting object persistence ”the ability to store and retrieve an object from a database ”can be quite complex. We discussed this earlier in the chapter, where we covered not only basic persistence, but also the concept of object-relational mapping (ORM).

In our framework, we'll encapsulate data-access logic within our business objects, allowing each object to handle its ORM logic along with any other business logic that might impact data access. This is the most flexible approach to handling object persistence. At the same time, however, we don't want to be in a position where a change to our physical architecture requires every business object in the system to be altered . What we do want is the ability to switch between having the data-access code run on the client machine and having it run on an application server, driven by a configuration file setting.

On top of this, if we're using an application server, we don't want to be in a position where every different business object in our application has a different remoting proxy exposed by the server. This is a maintenance and configuration nightmare, because it means that adding or changing a business object would require updating proxy information on all our client machines.

| Note | We'll discuss the technical details in Chapter 3 as part of our overview of remoting. This problem is also discussed more thoroughly in my MSDN article covering the data portal concept. [3] |

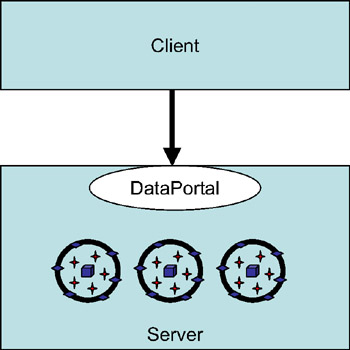

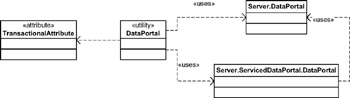

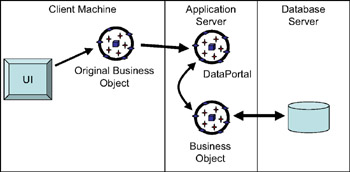

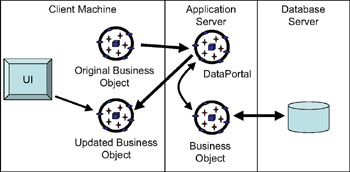

Instead, it would be ideal if there was one consistent entry point to the application server, so that we could simply export that proxy information to every client, and never have to worry about it again. This is exactly what the DataPortal class provides as shown in Figure 2-19.

Figure 2-19: The DataPortal provides a consistent entry point to the application server.

DataPortal provides a single point of entry and configuration for the server. It manages communication with our business objects while they're on the server running their data-access code. Additionally, the data-portal concept provides the following other key benefits:

-

Centralized security when calling the application server

-

A consistent object persistence mechanism (all objects persist the same way)

-

One point of control to toggle between running the data-access code locally, or via remoting

The DataPortal class is designed in two parts . There's the part that supports business objects on the client side, and there's the part that actually handles data access. Depending on our configuration file settings, this second part might run on the client machine, or on an application server.

In this part of the chapter, we'll focus on the client-side DataPortal behavior. In the next section, where we discuss transactional and nontransactional data access, we'll cover the server-side portion of the data-portal concept.

The client-side DataPortal is implemented as a class containing only static methods, which means that any public methods it exposes become available to our code without the need to create a DataPortal object. The methods it provides are Create() , Fetch() , Update() , and Delete() . Editable business objects and collections use all four of these methods, but our read-only objects and collections only use the Fetch() method as shown in Figure 2-20.

Figure 2-20: The client-side DataPortal is used by all business-object base classes.

As we create our business objects, we'll implement code that uses DataPortal to retrieve and update our object's information.

The Client-Side DataPortal

The core of the client-side functionality resides in the client-side DataPortal class, which supports the following key features of our data portal:

-

Local or remote data access

-

Table-based or Windows security

-

Transactional and nontransactional data access

Let's discuss how each feature is supported.

Local or Remote Data Access

When data access is requested (by calling the Create() , Fetch() , Update() , or Delete() methods), the client-side DataPortal checks the application's configuration file to see if the server-side DataPortal should be loaded locally (in process), or remotely via .NET remoting.

If the server-side DataPortal is configured to be local, an instance of the server-side DataPortal object is created and used to process the data-access method call. If the server-side DataPortal is configured to be remote, we use the GetObject() method of System.Activator to create an instance of the server-side DataPortal object on the server. Either way, the server-side DataPortal object is cached so that subsequent calls to retrieve or update data just use the object that has already been created.

| Note | The reality is that when the server-side DataPortal is on a remote server, we're only caching the client-side proxy. On the server, a new server-side DataPortal is created for each method call. This provides a high degree of isolation between calls, increasing the stability of our application. The server-side DataPortal will be configured as a SingleCall object in remoting. |

The powerful part of this is that the UI code always looks something like this:

Customer cust = Customer.GetCustomer(myID);

And the business object code always looks something like this:

public static Customer GetCustomer(string ID) { return (Customer)DataPortal.Fetch(new Criteria(ID)); } Neither of these code snippets changes, regardless of whether we've configured the server-side DataPortal to run locally, or on a remote server. All that changes is the application's configuration file.

Table-based or Windows Security

The client-side DataPortal also understands the table-based security mechanism in the business framework. We'll discuss this security model later in the chapter, but it's important to recognize that when we use table-based security, the client-side DataPortal includes code to pass the user's identity information to the server along with each call.

Because we're passing our custom-identity information to the server, the server-side DataPortal is able to configure the server environment to impersonate the user. This means that our business objects can check the user's identity and roles on both client and server, with assurance that both environments are the same.

If the application is configured to use Windows security, the DataPortal doesn't pass any security information. It's assumed in this case that we're using Windows' integrated security, and so Windows itself will take care of impersonating the user on the server. This requires that we configure the server to disallow anonymous access, thereby forcing the user to use her security credentials on the server as well as on the client.

Transactional or Nontransactional Data Access

Finally, the client-side DataPortal has a part to play in handling transactional and nontransactional data access. We'll discuss this further as we cover the server-side DataPortal functionality, but the actual decision point as to whether transactional or nontransactional behavior is invoked occurs in the client-side DataPortal code.

The business framework defines a custom attribute named TransactionalAttribute that we can apply to methods within our business objects. Specifically, we can apply it to any of the four data-access methods that our business object might implement to create, fetch, update, or delete data. This means that in our business object, we may have an update method (overriding the one in BusinessBase ) marked as being [Transactional()] :

[Transactional()] protected override void DataPortal_Update { // Data update code goes here } At the same time, we might have a fetch method in the same class that's not transactional:

protected override void DataPortal_Fetch(Object criteria) { // Data retrieval code goes here } This facility means that we can control transactional behavior at the method level, rather than at the class level. This is a powerful feature, because it means that we can do our data retrieval outside of a transaction to get optimal performance, and still do our updates within the context of a transaction to ensure data integrity.

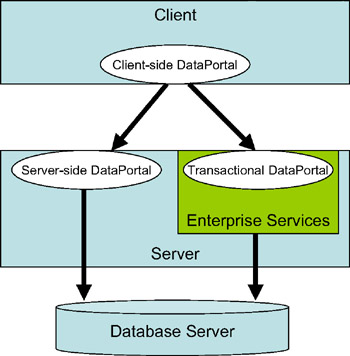

The client-side DataPortal examines the appropriate method on our business object before it invokes the server-side DataPortal object. If our method is marked as [Transactional()] , then the call is routed to the transactional version of the server-side object. Otherwise , it is routed to the nontransactional version as illustrated in Figure 2-21.

Figure 2-21: The client-side DataPortal routes requests to the appropriate server-side DataPortal.

The Server-Side DataPortal

In the previous section, we discussed the behavior of the client-side DataPortal . This is the code our business objects invoke as they create, fetch, update, or delete themselves . However, the client-side DataPortal is merely a front end that routes all data-access calls to the server-side DataPortal object.

| Note | I say "server-side" here, but keep in mind that the server-side DataPortal object may run either on the client workstation, or on a remote server. Refer to the previous section for a discussion on how this selection is made. |

There are actually two different server-side DataPortal objects. One is designed as a normal .NET class, though the other is a serviced component, which means that it runs within Enterprise Services (otherwise known as COM+) as shown in Figure 2-22.

Figure 2-22: Class diagram showing relationships between the DataPortal classes

The client-side DataPortal examines the methods of our business object to see if they are marked as [Transactional()] . When the client-side DataPortal 's Fetch() method is called, it checks our business object's DataPortal_Fetch() method; when the Update() method is called, it checks our business object's DataPortal_Update() method; and so forth. Based on the presence or absence of the [Transactional()] attribute, the client-side DataPortal routes the method call to either the transactional (serviced) or the nontransactional server-side DataPortal .

Transactional or Nontransactional

If our business object's method isn't marked as [Transactional()] , then the client-side DataPortal calls the corresponding method on our server-side DataPortal object. We can install the object itself on a server and expose it via remoting as a SingleCall object. This means that each method call to the object results in remoting creating a new server-side DataPortal object to run that method.

If our business object's method is marked as [Transactional()] , then the client-side DataPortal calls the corresponding method on our transactional server-side DataPortal , which we'll call ServicedDataPortal . ServicedDataPortal runs in Enterprise Services and is marked as requiring a COM+ transaction, so any data access will be protected by a two-phase distributed transaction.

ServicedDataPortal uses the [AutoComplete()] attribute (which we'll discuss in Chapter 3) for its methods, so any data access is assumed to complete successfully, unless an exception is thrown. This simplifies the process of writing the data-access code in our business objects. If they succeed, then great: The changes will be committed. To indicate failure, all we need to do is throw an exception, and the transaction will be automatically rolled back by COM+.

The ServicedDataPortal itself is just a shell that's used to force our code to run within a transactional context. It includes the same four methods as the client-side DataPortal ”all of which are marked with the [AutoComplete()] attribute, as noted earlier ”but the code in each method merely delegates the method call to a regular, server-side DataPortal object.

This regular, server-side DataPortal object is automatically loaded into the same process, application domain, and COM+ context as the ServicedDataPortal object. This means that it will run within the context of the transaction, as will any business object code that it invokes. We only have one component to install physically into COM+, and yet all of our components end up being protected by transactions!

The flexibility we provide by doing this is tremendous. We can create applications that don't require COM+ in any way by avoiding the use of the [Transactional()] attribute on any of our data-access methods. Alternatively, we can always use COM+ transactions by putting the [Transactional()] attribute on all our data-access methods. Or we can produce a hybrid whereby some methods use two-phase distributed transactions in COM+, and some don't.

All this flexibility is important, because Enterprise Services (COM+) has some drawbacks, such as the following:

-

Using two-phase distributed transactions will cause our data-access code to run up to 50 percent slower than if we had implemented the transactions directly through ADO.NET.

-

Enterprise Services requires Windows 2000 or higher, so if we use COM+, our data-access code can only run on a machine running Windows 2000 or higher.

-

We can't use no-touch deployment to deploy components that run in COM+.

On the other hand, if we're updating two or more databases and we need those updates to be transactional, then we really need COM+ to make that possible. Also, if we run our data-access code in COM+, we don't need to "begin," "commit," or "roll back" our transactions manually using ADO.NET.

Criteria Objects

Before we discuss the design of the server-side DataPortal object any further, we need to explore the mechanism that we'll use to identify our business objects when we want to create, retrieve, or delete an object.

It's impossible to predict ahead of time all the possible criteria that we might use to identify all the possible business objects we'll ever create. We might identify some objects by an integer, others by a string, others by a GUID, and so on. Others may require multiple parameters, and others still may have variable numbers of parameters. The permutations are almost endless.

Rather than expecting to be able to define Fetch() methods with all the possible permutations of parameters for selecting an object, we'll define a Fetch() method that accepts a single parameter. This parameter will be a Criteria object, consisting of the fields required to identify our specific business object. This means that for each of our business objects, we'll define a Criteria object that will contain specific data that's meaningful when selecting that particular object.

Having to create a Criteria class for each business object is a bit of an imposition on the business developer, but one way or another the business developer must pass a set of criteria data to the server in order to retrieve data for an object. Doing it via parameters on the Fetch() method is impractical, because we can't predict the number or types of the parameters ”we'd have to implement a Fetch() method interface using a parameter array or accepting an arbitrary array of values. Neither of these approaches provides a strongly typed way of getting the criteria data to the server.

Another approach would be to use the object itself as criteria ”a kind of query-by-example (QBE) scheme. The problem here is that we would have to create an instance of the whole business object on the client, populate some of its properties with data, and then send the whole object to the server. Given that we typically only need to send one or two fields of data as criteria, this would be incredibly wasteful ” especially if our business object has many fields that aren't criteria.

Typically then, a Criteria class will be a very simple class containing the variables that act as criteria, and a constructor that makes it easy to set their values. A pretty standard one might look something like this:

[Serializable()] public class Criteria { public string SSN; public Criteria(string SSN) { this.SSN = SSN; } } We can easily create and pass a Criteria object any time that we need to retrieve or delete a business object. This allows us to create a generic interface for retrieving objects, because all our retrieval methods can simply require a single parameter through which the Criteria object will be passed. At the same time, we have near-infinite options with regards to what the actual criteria might be for any given object.

There's another problem lurking here, though. If we have a generic DataPortal that we call to retrieve an object, and if it only accepts a single Criteria object as a parameter, how does the DataPortal know which type of business object to load with data? How does the server-side DataPortal know which particular business class contains the code for the object we're trying to retrieve? We could pass the assembly and name of our business class as data elements within the Criteria object, or we could use custom attributes to provide the assembly and class name, but in fact there's a simpler way.

If we nest the Criteria class within our business object's class, then the DataPortal can use reflection to determine the business object class by examining the Criteria object. This takes advantage of existing .NET technologies and allows us to use them, rather than developing our own mechanism to tell the DataPortal the type of business object we need as shown here:

[Serializable()] public class Employee : BusinessBase { [Serializable()] private class Criteria { public string SSN; public Criteria(string SSN) { this.SSN = SSN; } } } Now when the Criteria object is passed to our DataPortal in order to retrieve an Employee object, the server-side DataPortal code can simply use reflection to determine the class within which the Criteria class is nested. We can then create and populate an instance of that outer class ( Employee in this case) with data from the database, based on the criteria information in the Criteria object.

To do this, the server-side DataPortal will use reflection to ask the Criteria object for the type information (assembly name and class name) of the class it is nested within. Given the assembly and class names of the outer class, the server-side DataPortal code can use reflection to create an instance of that class, and it can then use reflection on that object to invoke its DataPortal_Fetch() method.

Note that the Criteria class is scoped as private. The only code that creates or uses this class is the business object within which the class is nested, and our framework's DataPortal code. Because the DataPortal code will only interact with the Criteria class via reflection, scope isn't an issue. By scoping the class as private, we reduce the number of classes the UI developer sees when looking at our business assembly, which makes our library of business classes easier to understand and use.

DataPortal Behaviors

Regardless of whether we're within a transaction, the server-side DataPortal object performs a simple series of generic steps that get our business object to initialize, load, or update its data. The code for doing this, however, is a bit tricky, because it requires the use of reflection to create objects and invoke methods that normally couldn't be created or invoked.

In a sense, we're cheating by using reflection. A more "pure" object-oriented approach might be to expose a set of public methods that the server-side DataPortal can use to interact with our object. Although this might be great in some ideal world, we mustn't forget that those same public methods would also then be available to the UI developer, even though they aren't intended for use by the UI. At best, this would lead to some possible confusion as the UI developer learned to ignore those methods. At worst, the UI developer might find ways to misuse those methods ”either accidentally or on purpose. Because of this, we're using reflection so that we can invoke these methods privately, without exposing them to the UI developer, or any other code besides the server-side DataPortal for which they're intended.

Fortunately, our architecture allows this complex code to be isolated into the DataPortal class. It exists in just one place in our framework, and no business object developer should ever have to look at or deal with it.

Security

Before we cover the specifics of each of the four data-access operations, we need to discuss security. As we stated earlier, the business framework will support either table-based or Windows' integrated security.

If we're using Windows' integrated security, the framework code totally ignores security concerns, and expects that we've configured our Windows environment and network appropriately. One key assumption here is that if our server-side DataPortal is being accessed via remoting, then Internet Information Services (IIS) will be configured to disallow anonymous access to the website that's hosting DataPortal . This forces Windows' integrated security to be active. Of course, this means that all users must have Windows accounts on the server or in the NT domain to which the server belongs.

| Note | This also implies that we're dictating that IIS should be used to host the server-side DataPortal if we're running it on a remote server. In fact, IIS is only required if we need to use Windows security. If we're using table-based security, it's possible to write a Windows service to host the DataPortal ”but even in that case, IIS is recommended as a host because it's easier to configure and manage. We'll discuss remoting host options a bit more in Chapter 3. |

We might instead opt to use the business framework's custom, table-based security, which we'll discuss in detail later in this chapter. In that case, the client-side DataPortal will pass our custom security object for the current user as a parameter to the server-side DataPortal object. The first thing the server-side DataPortal object does then is make this security object the current security object for the server-side thread on which the object is running.

The result of this is that our server-side code will always be using the same security object as the client-side code. Our business objects, in particular, will have access to the same user identity and list of roles on both client and server. Because of this, our business logic can use the same standard security and role checks regardless of where the code is running.

Create

The "create" operation is intended to allow our business objects to load themselves with values that must come from the database. Business objects don't need to support or use this capability, but if they do need to initialize default values, then this is the mechanism to use.

There are many types of applications where this is important. For instance, order-entry applications typically have extensive defaulting of values based on the customer. Inventory-management applications often have many default values for specific parts, based on the product family to which the part belongs. And medical records too often have defaults based on the patient and physician involved.

When the Create() method of the DataPortal is invoked, it's passed a Criteria object. As we've explained, the DataPortal will use reflection against the Criteria object to find out the class within which it's nested. Using that information, the DataPortal will then use reflection to create an instance of the business object itself. However, this is a bit tricky, because all our business objects will have private constructors to prevent direct creation by the UI developer:

[Serializable()] public class Employee : BusinessBase { private Employee() { // prevent direct creation } [Serializable()] private class Criteria { public string SSN; public Criteria(string SSN) { this.SSN = SSN; } } } To fix this, our business objects will expose static methods to create or retrieve objects, and those static methods will invoke the DataPortal . (We discussed this "class in charge" concept earlier in the chapter.) As an example, our Employee class may have a static factory method such as the following:

public static Employee NewEmployee() { return DataPortal.Create(new Criteria("")); } Notice that no Employee object is created on the client here. Instead, we ask the client-side DataPortal for the Employee object. The client-side DataPortal passes the call to the server-side DataPortal , so the business object is created on the server. Even though our business class only has a private constructor, the server-side DataPortal uses reflection to create an instance of the class.

Again, we're "cheating" by using reflection to create the object, even though it only has a private constructor. However, the alternative is to make the constructor public ” in which case the UI developer will need to learn and remember that they must use the static factory methods to create the object. By making the constructor private , we provide a clear and direct reminder that the UI developer must use the static factory method, thus reducing the complexity of the interface for the UI developer.

Once we've created the business object, the server-side DataPortal will call the business object's DataPortal_Create() method, passing the Criteria object as a parameter. At this point, we're executing code inside the business object, so the business object can do any initialization that's appropriate for a new object. Typically, this will involve going to the database to retrieve any configurable default values.

When the business object is done loading its defaults, the server-side DataPortal will return the fully created business object back to the client-side DataPortal . If the two are running on the same machine, this is a simple object reference; but if they're configured to run on separate machines, then the business object is automatically serialized across the network to the client (that is, it's passed by value), so the client machine ends up with a local copy of the business object.

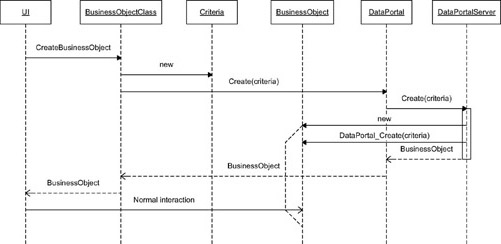

The UML sequence diagram in Figure 2-23 illustrates this process.

Figure 2-23: UML sequence diagram for the creation of a new business object

Here you can see how the UI interacts with the business-object class (the static factory method), which then creates a Criteria object and passes it to the client-side DataPortal . The DataPortal then delegates the call to the server-side DataPortal (which may be running locally or remotely, depending on configuration). The server-side DataPortal then creates an instance of the business object itself, and calls the business object's DataPortal_Create() method so it can populate itself with default values. The resulting business object is then returned ultimately to the UI.

In a physical n-tier configuration, remember that the Criteria object starts out on the client machine, and is passed by value to the application server. The business object itself is created on the application server, where it's populated with default values. It's then passed back to the client machine by value. Through this architecture we're truly taking advantage of distributed object concepts.

Fetch

Retrieving a preexisting object is very similar to the creation process we just discussed. Again, we make use of the Criteria object to provide the data that our object will use to find its information in the database. The Criteria class is nested within the business object class, so the generic server-side DataPortal code can determine the type of business object we want, and then use reflection to create an instance of the class.

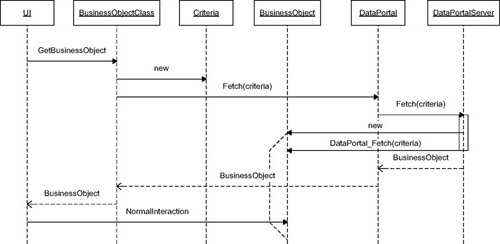

The UML sequence diagram in Figure 2-24 illustrates all of this.

Figure 2-24: UML sequence diagram for the retrieval of an existing business object

Here the UI interacts with the business-object class, which in turn creates a Criteria object and passes it to the client-side DataPortal code. The client-side DataPortal determines whether the server-side DataPortal should run locally or remotely, and then delegates the call to the server-side DataPortal .

The server-side DataPortal uses reflection to determine the assembly and type name for the business class and creates the business object itself. After that, it calls the business object's DataPortal_Fetch() method, passing the Criteria object as a parameter. Once the business object has populated itself from the database, the server-side DataPortal returns the fully populated business object to the UI.

As with the create process, in an n-tier physical configuration, the Criteria object and business object move by value across the network, as required. We don't have to do anything special beyond marking the classes as [Serializable()] ”the .NET runtime handles all the details on our behalf .

Update

The update process is a bit different from what we've seen so far. In this case, the UI already has a business object with which the user has been interacting, and we want this object to save its data into the database. To achieve this, our business object has a Save() method (as part of the BusinessBase class from which all business objects inherit). The Save() method calls the DataPortal to do the update, passing the business object itself, this , as a parameter.

The thing to remember when doing updates is that the object's data might change as a result of the update process (the most common scenario for this is that a new object might have its primary key value assigned by the database at the same time as its data is inserted into the database). This newly created key value must be placed back into the object.

This means that the update process is bidirectional . It isn't just a matter of sending the data to the server to be stored, but also a matter of returning the object from the server after the update has completed, so that the UI has a current, valid version of the object.

Due to the way .NET passes objects by value, it may introduce a bit of a wrinkle into our overall process. When we pass our object to the server that will be saved, .NET makes a copy of the object from the client onto the server, which is exactly what we want. However, after the update is complete, we want to return the object to the client. When we return an object from the server to the client, a new copy of the object is made on the client, which isn't the behavior we're looking for.

Figure 2-25 illustrates the initial part of the update process.

Figure 2-25: Sending a business object to the DataPortal to be inserted or updated

The UI has a reference to our business object, and calls its Save() method. This causes the business object to ask the DataPortal to save the object. The result is that a copy of the business object is made on the application server, where it can save itself to the database. So far, this is pretty straightforward.

| Note | Note that our business object has a Save() method, though the DataPortal infrastructure has methods named Update() . Although this is a bit inconsistent, remember that the business object is being called by UI developers, and I've found that it's more intuitive for the typical UI developer to call Save() than Update() . |

However, once this part is done, the updated business object is returned to the client, and the UI must update its references to use the newly updated object instead as shown in Figure 2-26.

Figure 2-26: DataPortal returning the inserted or updated business object to the UI

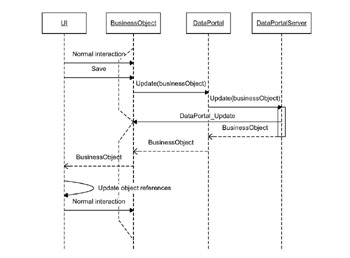

This is fine too, but it's important to keep in mind that we can't continue to use the old business object ”we must update our references to use the newly updated object. Figure 2-27 is a UML sequence diagram that shows the overall update process.

Figure 2-27: UML sequence diagram for the update of a business object

Here we can see how the UI calls the Save() method on the business object, which causes the latter to call the DataPortal 's Update() method, passing itself as a parameter. As usual, the client-side DataPortal determines whether the server-side DataPortal is running locally or remotely, and then delegates the call to the server-side DataPortal .

The server-side DataPortal then simply calls the DataPortal_Update() method on the business object, so that the object can save its data into the database. At this point, we have two versions of the business object: the original version on the client, and the newly updated version on the application server. However, the best way to view this is to think of the original object as being obsolete and invalid at this point. Only the newly updated version of the object is valid.

Once the update is done, the new version of the business object is returned to the UI; the UI can then continue to interact with the new business object as needed.

| Note | The UI must update any references from the old business object to the newly updated business object at this point. |

In a physical n-tier configuration, the business object is automatically passed by value to the server, and the updated version is returned by value to the client. If the server-side DataPortal is running locally, however, we're simply passing object references, and we don't incur any of the overhead of serialization and so forth.

Delete

The final operation, and probably the simplest, is to delete an object from the database ” although by the time we're done, we'll actually have two ways of doing this. One way is to retrieve the object from the database, mark it as deleted by calling a Delete() method on the business object, and then calling Save() to cause it to update itself to the database (thus actually doing the delete operation). The other way is to simply pass criteria data to the server, where the object is deleted immediately.

This second approach provides superior performance, because we don't need to load the object's data and return it to the client. We only need to mark it as deleted and send it straight back to the server again. Instead, we simply pass the criteria fields to the server, the server deletes the object's data, and we're done. Our framework will support both models, providing you with the flexibility to allow either or both in your object models, as you see fit.

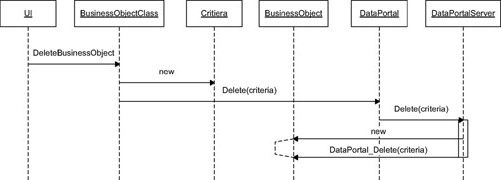

Because we've already looked at Save() , let's look at the second approach, in which the UI simply calls the business object class to request deletion of an object. A Criteria object is created to describe the object to be deleted, and the DataPortal is invoked to do the deletion. Figure 2-28 is a UML diagram that illustrates the process.

Figure 2-28: UML sequence diagram for direct deletion of a business object

Because the data has been deleted at this point, we have nothing to return to the UI, so the overall process remains pretty straightforward. As usual, the client-side DataPortal delegates the call to the server-side DataPortal . The server-side DataPortal creates an instance of our business object and invokes its DataPortal_Delete() method, providing the Criteria object as a parameter. The business logic to do the deletion itself is encapsulated within the business object, along with all the other business logic relating to the object.

Table-Based Security

As we discussed earlier in the chapter, many environments include users who aren't part of an integrated Windows domain or Active Directory. In such a case, relying on Windows' integrated security for our application is problematic at best, and we're left to implement our own security scheme. Fortunately, the .NET Framework includes several security concepts, along with the ability to customize them to implement our own security as needed.

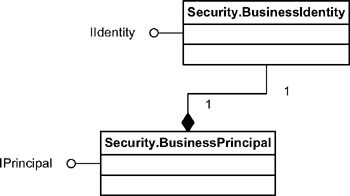

Custom Principal and Identity Objects

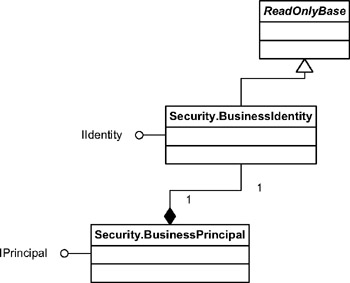

As you may know (and as we'll discuss in detail in the next chapter), the .NET Framework includes a couple of built-in principal and identity objects that support Windows' integrated security or generic security. We can also create our own principal and identity objects by creating classes that implement the IPrincipal and IIdentity . That's exactly what we'll do to implement our table-based security model. This is illustrated in Figure 2-29.

Figure 2-29: Relationship between BusinessPrincipal and BusinessIdentity

What we have here is a containment relationship in which the principal object contains an identity object. The principal object relies on the underlying identity object to obtain the user's identity. In many cases, the user's identity will incorporate the list of groups or roles to which the user belongs.

The .NET Framework includes GenericPrincipal and GenericIdentity classes that serve much the same purpose. However, by creating our own classes, we can directly incorporate them into our framework. In particular, our BusinessIdentity object will derive from ReadOnlyBase , and we can thus load it with data via the DataPortal mechanism just like any other business object.

Using DataPortal

In our implementation, we'll be making the identity object derive from ReadOnlyBase , so it will be a read-only business object that we can retrieve from the database using the normal DataPortal mechanism we discussed earlier in the chapter. This relationship is shown in Figure 2-30.

Figure 2-30: BusinessIdentity is a subclass of ReadOnlyBase.

Our framework will support the use of either this table-based security model, or Windows' integrated model, as provided by the .NET Framework. We can make the choice of models by altering the application's configuration file, as we'll see in Chapter 7 when we start to implement our business objects.

If the table-based model is chosen in the configuration file, then the UI must log in to our application before we can retrieve any business objects (other than our identity object) from the DataPortal . In other words, the DataPortal will refuse to operate until the user has been authenticated. Once the user is authenticated we'll have a valid identity object we can pass to the DataPortal . This is accomplished by calling a Login() method that we'll implement in our principal class. This method will accept a user ID and password that will be verified against a table in the database.

| Note | The model we're implementing in this book is overly simplistic. This is intentional, because it will allow for easy adaptation into your specific environment, whether that's table-based, encrypted, LDAP-based, or the use of any other back-end store for user ID and password information. |

Integration with Our Application

Once the login process is complete, the main UI thread will have our custom principal and identity objects as its security context. From that point forward, the UI code and our business object code can make use of the thread's security context to check the user's identity and roles, just as we would if we were using Windows' integrated security. Our custom security objects integrate directly into the .NET Framework, allowing us to employ normal .NET programming techniques.

Additionally, the principal and identity objects will be passed from the client-side DataPortal to the server-side DataPortal any time we create, retrieve, update, or delete objects. This allows the server-side DataPortal and our server-side objects all to run within the same security context as the UI and client-side business objects. Ultimately, we have a seamless security context for our application code, regardless of whether it's running on the client or server.

[3] Rockford Lhotka, "A Portal for my Data," MSDN, March 22, 2002. See http://msdn.microsoft.com/library/en-us/dnadvnet/html/vbnet03262002.asp

EAN: 2147483647

Pages: 111

If you may any questions please contact us: flylib@qtcs.net