Managing Business Logic

| | ||

| | | |

| | ||

At this point, you should have a good understanding of logical and physical architectures, and we've seen how a 5-tier logical architecture can be configured into various n-tier physical architectures. In one way or another all of these tiers will use or interact with our application's data. That's obviously the case for the data-management and data-access tiers, but the business-logic tier must validate, calculate, and manipulate data; the UI transfers data between the business and presentation tiers (often performing formatting or using the data to make navigational choices); and the presentation tier displays data to the user and collects new data as it's entered.

Similarly, it would be nice if all of our business logic would exist in the business-logic tier, but in reality this is virtually impossible to achieve. In a web-based UI, we often include validation logic in the presentation tier, so that the user gets a more interactive experience in the browser. Unfortunately, we can't rely on any validation that's done in the web browser, because it's too easy for a malicious user to bypass that validation. Thus any validation done in the browser must be rechecked in the business-logic tier as well.

Similarly, most databases enforce referential integrity, and often some other rules, too. Furthermore, the data-access tier will very often include business logic to decide when and how data should be stored or retrieved from databases and other data sources. In almost any application, to a greater or a lesser extent, business logic gets scattered across all the tiers.

There's one key truth here that's important: for each piece of application data, there's a fixed set of business logic associated with that data. If the application is to function properly, we must apply the business logic to that data at least once. Why "at least"? Well, in most applications, some of the business logic is applied more than once. For example, we can apply a validation rule in the presentation tier and then reapply it in the UI tier or business-logic tier before we're sent to the database for storage. In some cases, the database will include code to recheck the value as well.

Let's look at some of the more common options. We'll start with three popular (but flawed) approaches, and then discuss a compromise solution that's enabled through the use of distributed objects such as the ones we'll support in the framework we'll create later in the book.

Potential Business Logic Locations

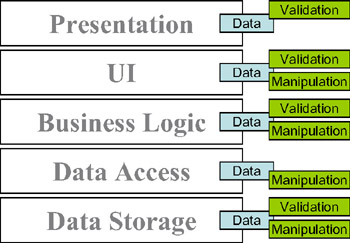

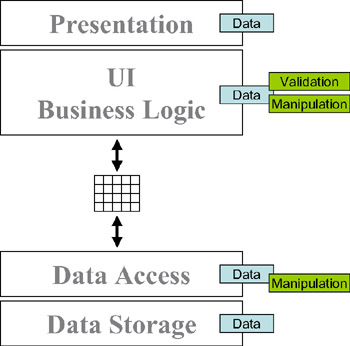

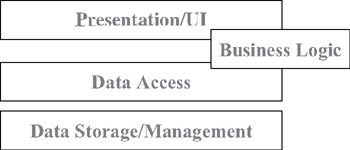

Figure 1-9 illustrates common locations for validation and manipulation business logic in a typical application. Most applications have the same logic in at least a couple of these locations.

Figure 1-9: Common locations for business logic in applications

We put business logic in a web-presentation tier to give the user a more interactive experienceand we put it into a Windows UI for the same reason. We recheck the business logic in the web UI (on the web server) because we don't trust the browser. And the database administrator puts the logic into the database (via stored procedures) because they don't trust any application developers!

The result of all this validation is a lot of duplicated code, all of which has to be debugged , maintained , and somehow kept in sync as the business needs (and thus logic) change over time. In the real world, the logic is almost never really kept in sync, and so we're constantly debugging and maintaining our code in a near-futile effort to make all of these redundant bits of logic agree with each other.

One solution is to force all of the logic into a single tier, thereby making the other tiers as "dumb" as possible. There are various approaches to this, although (as we'll see) none of them provide an optimal solution.

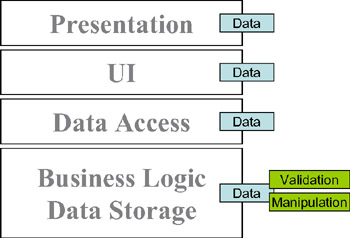

Business Logic in the Data-Management Tier

The classic approach is to put all logic into the database as the single, central repository. The presentation and UI then allow the user to enter absolutely anything (because any validation would be redundant), and the business-logic tier is essentially gone it's merged into the database. The data-access tier does nothing but move the data into and out of the database, as shown in Figure 1-10.

Figure 1-10: Validation and business logic in the data-management tier

The advantage of this approach is that the logic is centralized, but the drawbacks are plentiful. For starters, the user experience is totally noninteractive . Users can't get any results, or even confirmation that their data is valid, without round-tripping the data to the database for processing. The database server becomes a performance bottleneck, because it's the only thing doing any actual workand we have to write all of our business logic in SQL!

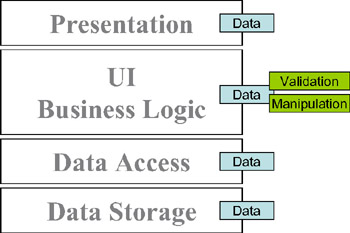

Business Logic in the UI Tier

Another common approach is to put all of the business logic into the UI. The data is validated and manipulated in the UI, and the data-storage tier just stores the data. This approach, as shown in Figure 1-11, is very common in both Windows and web environments, and has the advantage that the business logic is centralized into a single tier (and of course we can write our business logic in a language such as C# or VB .NET).

Figure 1-11: Business logic spread between the presentation and business-logic tiers

Unfortunately, in practice, the business logic ends up being scattered throughout the UI and intermixed with the UI code itself, thereby decreasing readability and making maintenance more difficult. Even more importantly, business logic in one form or page isn't reusable when we create subsequent pages or forms that use the same data. Furthermore, in a web environment, this architecture also leads to a totally noninteractive user experience, because no validation can occur in the browser. The user must transmit his data to the web server for any validation or manipulation to take place.

| Note | ASP.NET Web Forms' validation controls at least allow us to perform basic data validation in the UI, with that validation automatically extended to the browser by the Web Forms technology itself. Though not a total solution, this is a powerful feature that does help. |

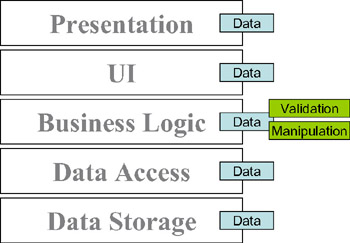

Business Logic in the Middle (Business and Data-Access) Tier

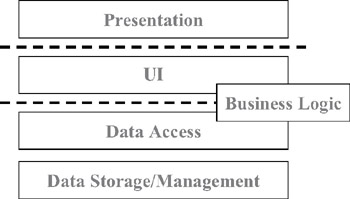

Still another option is the classic UNIX client-server approach, whereby the business logic and data-access tiers are merged, keeping the presentation, UI, and data-storage tiers as "dumb" as possible (see Figure 1-12).

Figure 1-12: Business logic spread between the business-logic and data-access tiers

Unfortunately, once again, this approach falls afoul of the noninteractive user-experience problem: We must round-trip the data to the data-access tier for any validation or manipulation. This is especially problematic if the data-access tier is running on a separate application server, because then we're faced with network latency and contention issues, too. Also, the central application server can become a performance bottleneck, because it's the only machine doing any work for all the users of the application.

Business Logic in a Distributed Business Tier

We wish this book included the secret that allowed us to write all our logic in one central location, thereby avoiding all of these awkward issues. Unfortunately, that's not possible with today's technology: putting the business logic in the UI (or presentation) tier, the data-access tier, or the data-storage tier is problematic, for all the reasons given earlier. But we need to do something about it, so what have we got left?

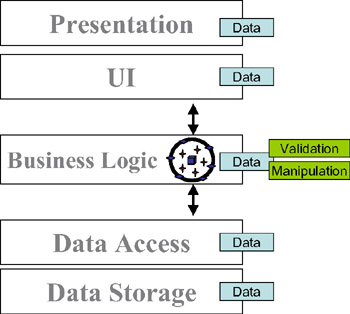

What's left is the possibility of centralizing the business logic in a business-logic tier that's accessible to the UI tier so that we can create the most interactive user experience possible. Also, the business-logic tier needs to be able to interact efficiently with the data-access tier in order to achieve the best performance when interacting with the database (or other data source), as shown in Figure 1-13.

Figure 1-13: Business logic centralized in the business-logic tier

Ideally, this business logic will run on the same machine as the UI code when interacting with the user, but on the same machine as the data-access code when interacting with the database. (As we discussed earlier, all of this could be on one machine or a number of different machines, depending on your physical architecture.) It must provide a friendly interface that the UI developer can use to invoke any validation and manipulation logic, and it must also work efficiently with the data-access tier to get data in and out of storage.

The tools for addressing this seemingly intractable set of requirements are business objects that encapsulate our data along with its related business logic. It turns out that a properly constructed business object can move around the network from machine to machine with almost no effort on our part. The .NET Framework itself handles the details, and we can focus on the business logic and data.

By properly designing and implementing our business objects, we allow the .NET Framework to pass our objects across the network by value , thereby automatically copying them from one machine to another. This means that with little effort, we can have our business logic and business data move to the machine where the UI tier is running, and then shift to the machine where the data-access tier is running when data access is required.

At the same time, if we're running the UI tier and data-access tier on the same machine, then the .NET Framework doesn't move or copy our business objects. They're used directly by both tiers with no performance cost or extra overhead. We don't have to do anything to make this happen, either.NET automatically detects that the object doesn't need to be copied or moved, and thus takes no extra action.

The business-logic tier becomes portable, flexible, and mobile, and adapts to the physical environment in which we deploy the application. Due to this, we're able to support a variety of physical n-tier architectures with one code base, whereby our business objects contain no extra code to support the various possible deployment scenarios. What little code we need to implement to support the movement of our objects from machine to machine will be encapsulated in our framework, leaving the business developer to focus purely on the development of business logic.

Business Objects

Having decided to use business objects and take advantage of .NET's ability to move objects around the network automatically, we need to take a little time to discuss business objects in more detail. We need to see exactly what they are, and how they can help us to centralize the business logic pertaining to our data.

The primary goal when designing any kind of software object is to create an abstract representation of some entity or concept. In ADO.NET, for example, a DataTable object represents a tabular set of data. DataTables provide an abstract and consistent mechanism by which we can work with any tabular data. Likewise, a Windows Forms TextBox control is an object that represents the concept of displaying and entering data. From our application's perspective, we don't need to have any understanding of how the control is rendered on the screen, or how the user interacts with it. We're just presented with an object that includes a Text property and a handful of interesting events.

Key to successful object design is the concept of encapsulation . This means that an object is a black box: It contains data and logic, but as the user of an object, we don't know what data or how the logic actually works. All we can do is interact with the object.

| Note | Properly designed objects encapsulate both data and any logic related to that data. |

If objects are abstract representations of entities or concepts that encapsulate both data and its related logic, what then are business objects ?

| Note | Business objects are different from regular objects only in terms of what they represent. |

When we create object-oriented applications, we're addressing problems of one sort or another. In the course of doing so, we use a variety of different objects. Though some of these will have no direct connection with the problem at hand (DataTable and TextBox objects, for example, are just abstract representations of computer concepts), there will be others that are closely related to the area or domain in which we're working. If the objects are related to the business for which we're developing an application, then they're business objects.

For instance, if we're creating an order-entry system, our business domain will include things such as customers, orders, and products. Each of these will likely become business objects within our order-entry applicationthe Order object, for example, will provide an abstract representation of the order being placed by a customer.

| Note | Business objects provide an abstract representation of entities or concepts that are part of our business or problem domain. |

Business Objects as Smart Data

We've already discussed the drawbacks of putting business logic into the UI tier, but we haven't thoroughly discussed the drawback of keeping our data in a generic representation such as a DataSet object. The data in a DataSet (or an array, or an XML document) is unintelligent, unprotected , and generally unsafe. There's nothing to prevent us from putting invalid data into any of these containers, and there's nothing to ensure that the business logic behind one form in our application will interact with the data in the same way as the business logic behind another form.

A DataSet or an XML document might ensure that we don't put text where a number is required, or that we don't put a number where a date is required. At best, it might enforce some basic relational-integrity rules. However, there's no way to ensure that the values match other criteria, or to ensure that calculations or other processing is done properly against the data, without involving other objects. The data in a DataSet, array, or XML document isn't self-awareit's not able to apply business rules or handle business manipulation or processing of the data.

The data in a business object, however, is what I like to call "smart data." The object not only contains the data, but also includes all the business logic that goes along with that data. Any attempt to work with the data must go through this business logic. In this arrangement, we have much greater assurance that business rules, manipulation, calculations, and other processing will be executed consistently everywhere in our application. In a sense, the data has become self-aware, and can protect itself against incorrect usage.

In the end, an object doesn't care whether it's used by a Windows Forms UI, a batch-processing routine, or a web service. The code using the object can do as it pleases; the object itself will ensure that all business rules are obeyed at all times.

Contrast this with the DataSet or an XML document, in which the business logic doesn't reside in the data container, but somewhere elsetypically, a Windows form or a web form. If multiple forms or pages use this DataSet, we have no assurance that the business logic is applied consistently. Even if we adopt a standard that says that the UI developer must invoke methods from a centralized class to interact with the data, there's nothing preventing them from using the DataSet directly. This may happen accidentally , or because it was simply easier or faster to use the DataSet than to go through some centralized routine.

| Note | With business objects, there's no way to bypass the business logic. The only way to the data is through the object, and the object always enforces the rules. |

So, a business object that represents an invoice will include not only the data pertaining to the invoice, but also the logic to calculate taxes and amounts due. The object should understand how to post itself to a ledger, and how to perform any other accounting tasks that are required. Rather than passing raw invoice data around, and having our business logic scattered throughout the application, we're able to pass an Invoice object around. Our entire application can share not only the data, but also its associated logic. Smart data through objects can dramatically increase our ability to reuse code, and can decrease software maintenance costs.

Anatomy of a Business Object

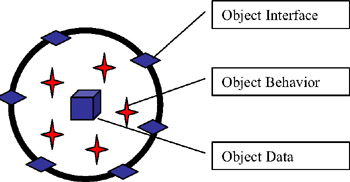

Putting all of these pieces together, we get an object that has an interface (a set of properties and methods), some implementation code (the business logic behind those properties and methods), and state (the data). This is illustrated in Figure 1-14.

Figure 1-14: Business object composed of state, implementation, and interface

The hiding of the data and the implementation code behind the interface are keys to the successful creation of a business object. If we allow the users of our object to "see inside" it, they will be tempted to cheat, and to interact with our logic or data in unpredictable ways. This danger is the reason why it will be important that we take care when using the public keyword as we build our classes.

Any property, method, event, or variable marked as public will be available to the users of objects created from the class. For example, we might create a simple class such as the following:

public class Project { Guid _id = Guid.NewGuid(); string _name = string.Empty; public Guid ID { get { return _id; } } public string Name { get { return _name; } set { if(value.Length > 50) Throw new Exception("Name too long"); _name = value; } } } This defines a business object that represents a project of some sort. All we know at the moment is that these projects have an ID value and a name. Notice though that the variables containing this data are privatewe don't want the users of our object to be able to alter or access them directly. If they were public, the values could be changed without our knowledge or permission. (The _Name variable could be given a value that's longer than the maximum of 50 characters , for example.)

The properties, on the other hand, are public. They provide a controlled access point to our object. The ID property is read-only, so the users of our object can't change it. The Name property allows its value to be changed, but enforces a business rule by ensuring that the length of the new value doesn't exceed 50 characters.

| Note | None of these concepts are unique to business objects they're common to all objects, and are central to object-oriented design and programming. |

Distributed Objects

Unfortunately, directly applying the kind of object-oriented design and programming we've been talking about so far is often quite difficult in today's complex computing environments. Object-oriented programs are almost always designed with the assumption that all the objects in an application can interact with each other with no performance penalty. This is true when all the objects are running in the same process on the same computer, but it's not at all true when the objects might be running in different processes, or worse stillon different computers.

Earlier in this chapter, we discussed various physical architectures in which different parts of our application might run on different machines. With a high-scalability- intelligent client architecture, for example, we'll have a client, an application server, and a data server. With a high-security web-client architecture, we'll have a client, a web server, an application server, and a data server. Parts of our application will run on each of these machines, interacting with each other as needed.

In these distributed architectures, we can't use a straightforward object-oriented design, because any communication between classic fine-grained objects on one machine and similar objects on another machine will incur network latency and overhead. This translates into a performance problem that we simply can't ignore. To overcome this problem, most distributed applications haven't used object-oriented designs. Instead, they consist of a set of procedural code running on each machine, with the data kept in a DataSet, an array, or an XML document that's passed around from machine to machine.

This isn't to say that object-oriented design and programming is irrelevant in distributed environmentsjust that it becomes complicated. To minimize the complexity, most distributed applications are object-oriented within a tier , but between tiers they follow a procedural or service-based model. The end result is that the application as a whole is neither object-oriented nor procedural, but a blend of both.

Perhaps the most common architecture for such applications is to have the data-access tier retrieve the data from the database into a DataSet. The DataSet is then returned to the client (or the web server), where we have code in our forms or pages that interacts with the DataSet directly as shown in Figure 1-15.

Figure 1-15: Passing a data table between the business-logic and data-access tiers

This approach has the flaws in terms of maintenance and code reuse that we've talked about, but the fact is that it gives pretty good performance in most cases. Also, it doesn't hurt that most programmers are pretty familiar with the idea of writing code to manipulate a DataSet, so the techniques involved are well understood , thus speeding up development.

If we do decide to stick with an object-oriented approach, we have to be quite careful. It's all too easy to compromise our object-oriented design by taking the data out of the objects running on one machine, thereby sending the raw data across the network, and allowing other objects to use that data outside the context of our objects and business logic. Distributed objects are all about sending smart data (objects) from one machine to another, rather than sending raw data and hoping that the business logic on each machine is being kept in sync.

Through its remoting, serialization, and autodeployment technologies, the .NET Framework contains direct support for the ability to have our objects move from one machine to another. (We'll discuss these technologies in more detail in Chapter 3, and make use of them throughout the remainder of the book.) Given this ability, we can have our data-access tier (running on an application server) create a business object and load it with data from the database. We can then send that business object to the client machine (or web server), where the UI code can use the object as shown in Figure 1-16.

Figure 1-16: Using a business object to centralize business logic

In this architecture, we're sending smart data to the client rather than raw data, so the UI code can use the same business logic as the data-access code. This reduces maintenance, because we're not writing some business logic in the data-access tier, and some other business logic in the UI tier. Instead, we've consolidated all of the business logic into a real, separate tier composed of business objects. These business objects will move across the network just like the DataSet did earlier, but they'll include the data and its related business logicsomething the DataSet can't offer.

| Note | In addition, our business objects will move across the network more efficiently than the DataSet. We'll be using a binary transfer scheme that transfers data in about 30 percent of the size of data transferred using the DataSet. Also, our objects will contain far less metadata than the DataSet, further reducing the number of bytes transferred across the network. |

Effectively, we're sharing the business-logic tier between the machine running the data-access tier and the machine running the UI tier. As long as we have support for moving data and logic from machine to machine, this is an ideal solution: it provides code reuse, low maintenance costs, and high performance.

A New Logical Architecture

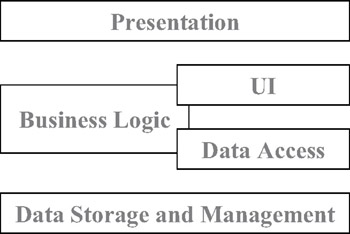

Sharing the business-logic tier between the data-access tier and the UI tier opens up a new way to view our logical architecture. Though the business logic tier remains a separate concept, it's directly used by and tied into both the UI and data-access tiers as shown in Figure 1-17.

Figure 1-17: The business-logic tier tied to the UI and data-access tiers

The UI tier can interact directly with the objects in the business-logic tier, thereby relying on them to perform all validation, manipulation, and other processing of the data. Likewise, the data-access tier can interact with the objects as the data is retrieved or stored.

If all the tiers are running on a single machine (such as an intelligent client), then these parts will run in a single process and interact with each other with no network or cross-processing overhead. In many of our other physical configurations, the business-logic tier will run on both the client and the application server as shown in Figures 1-18 and 1-19.

Figure 1-18: Business logic shared between the UI and data-access tiers

Figure 1-19: Business logic shared between the UI and data-access tiers

Local, Anchored, and Unanchored Objects

Normally, we think of objects as being part of a single application, running on a single machine in a single process. In a distributed application, we need to broaden our perspective. Some of our objects might only run in a single process on a single machine. Others may run on one machine, but may be called by code running on another machine. Others still may move from machine to machine.

Local Objects

By default, .NET objects are local . This means that ordinary objects aren't accessible from outside the process in which they were created. It isn't possible to pass them to another process or another machine (a procedure known as marshaling ), either by value or by reference. Because this is the default behavior for all .NET objects, we must take extra steps to allow any of our objects to be available to another process or machine.

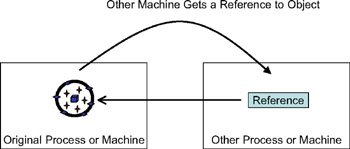

Anchored Objects

In many technologies, including COM, objects are always passed by reference . This means that when you "pass" an object from one machine or process to another, what actually happens is that the object remains in the original process, and the other process or machine merely gets a pointer, or reference, back to the object, as shown in Figure 1-20.

Figure 1-20: Calling an object by reference

By using this reference, the other machine can interact with the object. Because the object is still on the original machine, however, any property or method calls are sent across the network, and the results are returned back across the network. This scheme is only useful if we design our object so that we can use it with very few method callsjust one is ideal! The recommended designs for MTS or COM+ objects call for a single method on the object that does all the work for precisely this reason, thereby sacrificing "proper" design in order to reduce latency.

This type of object is stuck, or anchored , on the original machine or process where it was created. An anchored object never movesit's accessed via references. In .NET, we create an anchored object by having it inherit from MarshalByRefObject:

public class MyAnchoredClass: MarshalByRefObject { } From this point on, the .NET Framework takes care of the details. We can use remoting to pass an object of this type to another process or machine as a parameter to a method call, for example, or to return it as the result of a function.

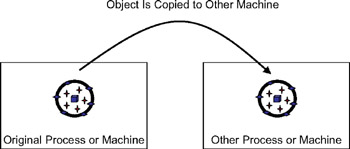

Unanchored Objects

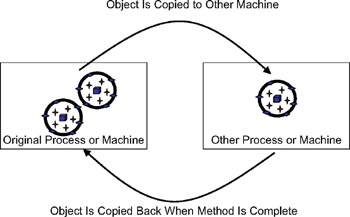

The concept of distributed objects relies on the idea that we can pass an object from one process to another, or from one machine to another, by value . This means that the object is physically copied from the original process or machine to the other process or machine as shown in Figure 1-21.

Figure 1-21: Passing a physical copy of an object across the network

Because the other machine gets a copy of the object, it can interact with the object locally. This means that there's effectively no performance overhead involved in calling properties or methods on the objectthe only cost was in copying the object across the network in the first place.

| Note | One caveat here is that transferring a large object across the network can cause a performance problem. As we all know, returning a DataSet that contains a great deal of data can take a long time. This is true of all unanchored objects, including our business objects. We need to be careful in our application design in order to ensure that we avoid retrieving very large data sets. |

Objects that can move from process to process or from machine to machine are unanchored . Examples of unanchored objects include the DataSet, and the business objects we'll create in this book. Unanchored objects aren't stuck in a single place, but can move to where they're most needed. To create one in .NET, we use the [Serializable()] attribute, or we implement the ISerializable interface. We'll discuss this further in Chapter 2, but the following illustrates the start of a class that's unanchored:

[Serializable()] public class MyUnanchoredClass { } Again, the .NET Framework takes care of the details, so we can simply pass an object of this type as a parameter to a method call or as the return value from a function. The object will be copied from the original machine to the machine where the method is running.

When to Use Which Mechanism

The .NET Framework supports all three of the mechanisms we just discussed, so that we can choose to create our objects as local, anchored, or unanchored, depending on the requirements of our design. As you might guess, there are good reasons for each approach.

Windows Forms and Web Forms objects are all localthey're inaccessible from outside the processes in which they were created. The assumption is that we don't want other applications just reaching into our programs and manipulating the UI objects.

Anchored objects are important, because we can guarantee that they will always run on a specific machine. If we write an object that interacts with a database, we'll want to ensure that the object always runs on a machine that has access to the database. Because of this, we'll typically use anchored objects on our application server.

Many of our business objects, on the other hand, will be more useful if they can move from the application server to a client or web server, as needed. By creating our business objects as unanchored objects, we can pass smart data from machine to machine, thereby reusing our business logic anywhere we send the business data.

Typically, we use anchored and unanchored schemes in concert. We'll use an anchored object on the application server in order to ensure that we can call methods that run on that server . Then we'll pass unanchored objects as parameters to those methods, which will cause those objects to move from the client to the server. Some of the anchored server-side methods will return unanchored objects as results, in which case the unanchored object will move from the server back to the client.

Passing Unanchored Objects by Reference

There's a piece of terminology here that can get confusing. So far, we've loosely associated anchored objects with the concept of "passing by reference," and unanchored objects as being "passed by value." Intuitively, this makes sense, because anchored objects provide a reference, though unanchored objects provide the actual object (and its values). However, the terms "by reference" and "by value" have come to mean other things over the years .

The original idea of passing a value "by reference" was that there would be just one set of dataone objectand any code could get a reference to that single entity. Any changes made to that entity by any code would therefore be immediately visible to any other code.

The original idea of passing a value "by value" was that a copy of the original value would be made. Any code could get a copy of the original value, but any changes made to that copy weren't reflected in the original value. That makes sense, because the changes were made to a copy, not to the original value.

In distributed applications, things get a little more complicated, but the previous definitions remain true: We can pass an object by reference so that all machines have a reference to the same object on some server. And we can pass an object by value, so that a copy of the object is made. So far, so good. However, what happens if we mark an object as [Serializable()] (that is, mark it as an unanchored object), and then intentionally pass it by reference? It turns out that the object is passed by value, but the .NET Framework attempts to give us the illusion that it was passed by reference.

To be more specific, in this scenario the object is copied across the network just as if it were being passed by value. The difference is that the object is then returned back to the calling code when the method is complete, and our reference to the original object is replaced with a reference to this new version, as shown in Figure 1-22.

Figure 1-22: Passing a copy of the object to the server and getting a copy back

This is potentially very dangerous, since other references to the original object continue to point to that original objectonly our particular reference is updated. We can potentially end up with two different versions of the same object on our machine, with some of our references pointing to the new one, and some to the old one.

| Note | If we pass an unanchored object by reference, we must always make sure to update all our references to use the new version of the object when the method call is complete. |

We can choose to pass an unanchored object by value, in which case it's passed one way, from the caller to the method. Or we can choose to pass an unanchored object by reference, in which case it's passed two ways, from the caller to the method and from the method back to the caller. If we want to get back any changes the method makes to the object, we'll use "by reference." If we don't care about or don't want any changes made to the object by the method, then we'll use "by value."

Note that passing an unanchored object by reference has performance implications it requires that the object be passed back across the network to the calling machine, so it's slower than passing by value.

Complete Encapsulation

Hopefully, at this point, your imagination is engaged by the potential of distributed objects. The flexibility of being able to choose between local, anchored, and unanchored objects is very powerful, and opens up new architectural approaches that were difficult to implement using older technologies such as COM.

We've already discussed the idea of sharing the business-logic tier across machines, and it's probably obvious that the concept of unanchored objects is exactly what we need to implement such a shared tier. But what does this all mean for the design of our tiers? In particular, given a set of unanchored or distributed objects in the business tier, what's the impact on the UI and data-access tiers with which the objects interact?

Impact on the User-Interface Tier

What it means for the UI tier is simply that the business objects will contain all the business logic. The UI developer can code each form or page using the business objects, thereby relying on them to perform any validation or manipulation of the data. This means that the UI code can focus entirely on displaying the data, interacting with the user, and providing a rich, interactive experience.

More importantly, because the business objects are distributed (unanchored), they'll end up running in the same process as the UI code. Any property or method calls from the UI code to the business object will occur locally without network latency, marshaling, or any other performance overhead.

Impact on the Data-Access Tier

The impact on the data-access tier is more profound. A traditional data-access tier consists of a set of methods or services that interact with the database, and with the objects that encapsulate data. The data-access code itself is typically outside the objects, rather than being encapsulated within the objects. If we now encapsulate the data-access logic inside each object, what is left in the data-access tier itself?

The answer is twofold. First, for reasons we'll examine in a moment, we need an anchored object on the server. Second, we'll eventually want to utilize Enterprise Services (COM+), and we need code in the data-access tier to manage interaction with Enterprise Services.

| Note | We'll discuss Enterprise Services in more detail in Chapters 2 and 3, and we'll use them throughout the rest of the book. |

Our business objects, of course, will be unanchored so that they can move freely between the machine running the UI code and the machine running the data-access code. However, if the data-access code is inside the business object, we'll need to figure out some way to get the object to move to the application server any time we need to interact with the database.

This is where the data-access tier comes into play. If we implement it using an anchored object, it will be guaranteed to run on our application server. Then we can call methods on the data-access tier, thereby passing our business object as a parameter. Because the business object is unanchored, it will physically move to the machine where the data-access tier is running. This gives us a way to get the business object to the right machine before the object attempts to interact with the database.

| Note | We'll discuss this in much more detail in Chapter 2, when we lay out the specific design of the distributed object framework, and in Chapter 5, as we build the data access tier itself. |

| | ||

| | | |

| | ||

EAN: 2147483647

Pages: 111

If you may any questions please contact us: flylib@qtcs.net