4. No-Reference and Reduced-Reference Quality Assessment

4. No-Reference and Reduced-Reference Quality Assessment

The quality metrics presented so far assume the availability of the reference video to compare the distorted video against. This requirement is a serious impediment to the feasibility of video quality assessment metrics. Reference videos require tremendous amounts of storage space, and, in many cases, are impossible to provide for most applications.

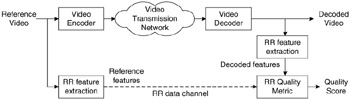

Reduced-reference (RR) quality assessment does not assume the complete availability of the reference signal, only that of partial reference information that is available through an ancillary data channel. Figure 41.12 shows how a RR quality assessment metric may be deployed. The server transmits side-information with the video to serve as a reference for an RR quality assessment metric down the network. The bandwidth available to the RR channel depends upon the application constraints. The design of RR quality assessment metrics needs to look into what information is to be transmitted through the RR channel so as to provide minimum prediction errors. Needless to say, the feature extraction from the reference video at the server would need to correspond to the intended RR quality assessment algorithm.

Figure 41.12: Deployment of a reduced-reference video quality assessment metric. Features extracted from the reference video are sent to the receiver to aid in quality measurements. The video transmission network may be lossy but the RR channel is assumed to be lossless.

Perhaps the earliest RR quality assessment metric was proposed by Webster et al. [71] and is based on extracting localized spatial and temporal activity features. A spatial information (SI) feature measures the standard deviation of edge-enhanced frames, assuming that compression will modify the edge statistics in the frames. A temporal information (TI) feature is also extracted, which is the standard deviation of difference frames. Three comparison metrics are derived from the SI and TI features of the reference and the distorted videos. The features for the reference video are transmitted over the RR channel. The metrics are trained on data obtained from human subjects. The size of the RR data depends upon the size of the window over which SI & TI features are calculated. The work was extended in [72], where different edge enhancement filters are used, and two activity features are extracted from 3D windows. One feature measures the strength of the edges in the horizontal/vertical directions, while the second feature measures the strength of the edges over other directions. Impairment metric is defined using these features. Extensive subjective testing is also reported.

Another approach that uses side-information for quality assessment is described in [73], in which marker bits composed of random bit sequences are hidden inside frames. The markers are also transmitted over the ancillary data channel. The error rate in the detection of the marker bits is taken as an indicator of the loss of quality. In [74], a watermarking based approach is proposed, where a watermark image is embedded in the video, and it is suggested that the degradation in the quality of the watermark can be used to predict the degradation in the quality of the video. Strictly speaking, both these methods are not RR quality metrics since they do not extract any features from the reference video. Instead, these methods gauge the distortion processes that occur during compression and the communication channel to estimate the perceptual degradation incurred during transmission in the channel.

Given the limited success that FR quality assessment has achieved, it should come as no surprise that designing objective no-reference (NR) quality measurement algorithms is very difficult indeed. This is mainly due to the limited understanding of the HVS and the corresponding cognitive aspects of the brain. Only a few methods have been proposed in the literature [75–80] for objective NR quality assessment, yet this topic has attracted a great deal of attention recently. For example, the video quality experts group (VQEG) [81] considers the standardization of NR and RR video quality assessment methods as one of its future working directions, where the major source of distortion under consideration is block DCT-based video compression.

The problem of NR quality assessment (sometimes called blind quality assessment) is made even more complex due to the fact that many unquantifiable factors play a role in human assessment of quality, such as aesthetics, cognitive relevance, learning, visual context, etc., when the reference signal is not available for MOS evaluation. These factors introduce variability among human observers based on each individual's subjective impressions. However, we can work with the following philosophy for NR quality assessment: all images and videos are perfect unless distorted during acquisition, processing or reproduction. Hence, the task of blind quality measurement simplifies into blindly measuring the distortion that has possibly been introduced during the stages of acquisition, processing or reproduction. The reference for measuring this distortion would be the statistics of "perfect" natural images and videos, measured with respect to a model that best suits a given distortion type or application. This philosophy effectively decouples the unquantifiable aspects of image quality mentioned above from the task of objective quality assessment. All "perfect images" are treated equally, disregarding the amount of cognitive information in the image, or its aesthetic value [82,83].

The NR metrics cited above implicitly adhere to this philosophy of quantifying quality through blind distortion measurement. Assumptions regarding statistics of "perfect natural images" are made such that the distortion is well separated from the "expected" signals. For example, natural images do not contain blocking artifacts, and any presence of periodic edge discontinuity in the horizontal and vertical directions with a period of 8 pixels, is probably a distortion introduced by block-DCT based compression techniques. Some aspects of the HVS, such as texture and luminance masking, are also modelled to improve prediction. Thus NR quality assessment metrics need to model not only the HVS but also natural scene statistics.

Certain types of distortions are quite amenable to blind measurement, such as blocking artifacts. In wavelet-based image coders, such as the JPEG2000 standard, the wavelet transform is often applied to the entire image (instead of image blocks), and the decoded images do not suffer from blocking artifact. Therefore, NR metrics based on blocking artifacts would obviously fail to give meaningful predictions. The upcoming H.26L standard incorporates a powerful de-blocking filter. Similarly post-processing may reduce blocking artifacts at the cost of introducing blur. In [82], a statistical model for natural images in the wavelet domain is used for NR quality assessment of JPEG2000 images. Any NR metric would therefore need to be specifically designed for the target distortion system. More sophisticated models of natural images may improve the performance of NR metrics and make them more robust to various distortion types.

EAN: 2147483647

Pages: 393