4. Environment Description

4. Environment Description

The description of a usage environment is a key component for Universal Multimedia Access. This section focuses on standardization activities in this area. First, an overview of the MPEG-21 Multimedia Framework is provided. Then, the specific part of this standard, Part 7: Digital Item Adaptation, which actually targets the description of usage environments, is introduced. This follows with a more detailed overview of the specific usage environment descriptions under development by this part of the standard. We conclude this section with references to related standardization activities and an overview of the issues in capability understanding.

4.1 MPEG-21 Multimedia Framework

Moving Picture Experts Group (MPEG) is a working group of ISO/IEC in charge of the development of standards for coded representation of digital audio and video. Established in 1988, the group has produced four important standards. Among them are MPEG-1, the standard on which such products as Video CD and MP3 are based, MPEG-2, the standard on which such products as Digital Television set top boxes and DVD are based, MPEG-4, the standard for multimedia for the fixed and mobile web and MPEG-7, the standard for description and search of audio and visual content. Work on the new standard, MPEG-21, formally referred to as ISO/IEC 21000 "Multimedia Framework," was started in June 2000. So far a Technical Report has been produced [62] and several normative parts of the standard have been developed. An overview of the parts that have been developed or are under development can be found in [63].

Today, many elements exist to build an infrastructure for the delivery and consumption of multimedia content. There is, however, no 'big picture' to describe how these elements, either in existence or under development, relate to each other. The aim for MPEG-21 is to describe how these various elements fit together. Where gaps exist, MPEG-21 will recommend which new standards are required. MPEG will then develop new standards as appropriate, while other bodies may develop other relevant standards. These specifications will be integrated into the multimedia framework through collaboration between MPEG and these bodies.

The result is an open framework for multimedia delivery and consumption, with both the content creator and content consumer as focal points. This open framework provides content creators and service providers with equal opportunities in the MPEG-21 enabled open market. This will also be to the benefit of the content consumer providing them access to a large variety of contents in an interoperable manner.

The vision for MPEG-21 is to define a multimedia framework to enable transparent and augmented use of multimedia resources across a wide range of networks and devices used by different communities. MPEG-21 introduces the concept of Digital Item, which is an abstraction for a multimedia content, including the various types of relating data. For example, a musical album may consist of a collection of songs, provided in several encoding formats to suit the capabilities (e.g., bitrate, CPU, etc.) of the device on which they will be played. Furthermore, the album may provide the lyrics, some bibliographical information about the musicians and composers, a picture of the album, information about the rights associated to the songs and pointers to a web site where other related material can be purchased. All this aggregation of content is considered by MPEG-21 as a Digital Item, where Descriptors (e.g., the lyrics) are associated to the Resources, i.e., the songs themselves.

Conceptually, a Digital Item is defined as a structured digital object with a standard representation, identification and description. This entity is also the fundamental unit of distribution and transaction within this framework.

Seven key elements within the MPEG-21 framework have been defined. One of these elements is Terminals and Networks. Details on the other elements in this framework can be found in [62,63]. The goal of the MPEG-21 Terminals and Networks is to achieve interoperable transparent access to advanced multimedia content by shielding users from network and terminal installation, management and implementation issues. This will enable the provision of network and terminal resources on demand to form user communities where multimedia content can be created and shared. Furthermore, the transactions should occurs with an agreed/contracted quality, while maintaining reliability and flexibility, and also allowing the multimedia applications to connect diverse sets of Users, such that the quality of the user experience will be guaranteed.

4.2 Digital Item Adaptation

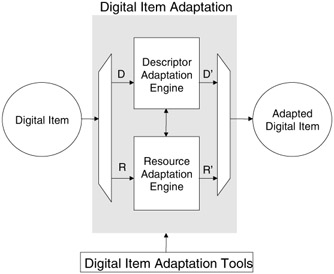

Universal Multimedia Access is concerned with the access to any multimedia content from any type of terminal or network and thus it is closely related to the target mentioned above of "achieving interoperable transparent access to (distributed) advanced multimedia content." Toward this goal, and in the context of MPEG-21, we target the adaptation of Digital Items. This concept is illustrated in Figure 36.10. As shown in this conceptual architecture, a Digital Item is subject to a resource adaptation engine, as well as a descriptor adaptation engine, which together produce the modified Digital Item.

Figure 36.10: Concept of Digital Item Adaptation.

The adaptation engines themselves are non-normative tools of Digital Item Adaptation. However, tools that provide support for Digital Item Adaptation in terms of resource adaptation, descriptor adaptation and/or Quality of Service management are within the scope of the Requirements [64].

With this goal in mind, it is essential to have available not only the description of the content, but also a description of its format and of the usage environment. In this way, content adaptation may be performed to provide the user the best content experience for the content requested with the conditions available. The dimensions of the usage environment are discussed in more detail below, but it essentially includes the description of terminal and networks resources, as well as user preferences and characteristics of the natural environment. While the content description problem has been addressed by MPEG-7, the description of content format and usage environments has not been addressed and it is now the target of Part 7 of the MPEG-21 standard, Digital Item Adaptation.

It should be noted that there are other tools besides the usage environment description that are being specified by this part of the standard; however they are not covered in this section. Please refer to [65] for complete information on the tools specified by Part 7, Digital Item Adaptation.

4.3 Usage Environment Descriptions

Usage environment descriptions include the description of terminal and networks resources, as well as user preferences and characteristics of the natural environment. Each of these dimensions, according to the latest Working Draft (WD) v2.0 released in July 2002 [65], are elaborated on further below. This part of the standard will be finalized in July 2003; so changes to the information provided below are expected.

4.3.1 User Characteristics

The specification of a User's characteristics is currently divided into five subcategories, including User Type, Content Preferences, Presentation Preferences, Accessibility Characteristics and Mobility Characteristics.

The first two subcategories, User Type and Content Preferences, have adopted Description Schemes (DS's) from the MPEG-7 specification as starting points [66]. Specifically, the Agent DS specifies the User type, and the UserPreferences DS and the UsageHistory DS specify the content preferences. The Agent DS describes general characteristics of a User such as name and contact information. A User can be a person, a group of persons or an organization. The UserPreferences descriptor is a container of various descriptors that directly describe the preferences of an End User. Specifically, these include descriptors of preferences related to the:

-

creation of Digital Items (e.g., created when, where, by whom)

-

classification of Digital Items (e.g., form, genre, languages)

-

dissemination of Digital Items (e.g., format, location, disseminator)

-

type and content of summaries of Digital Items (e.g., duration of an audiovisual summary)

The UsageHistory descriptor describes the history of actions on Digital Items by an End User (e.g., recording a video program, playing back a music piece); as such, it describes the preferences of an End User indirectly.

The next subcategory, Presentation Preferences, defines a set of user preferences related to the means by which audio-visual information is presented or rendered for the user. For audio, descriptions for preferred audio power, equaliser settings, and audible frequencies and levels are specified. For visual information, display preferences, such as the preferred color temperature, brightness, saturation and contrast, are specified.

Accessibility Characteristics provide descriptions that would enable one to adapt content according to certain auditory or visual impairments of the User. For audio, an audiogram is specified for the left and right ear, which specifies the hearing thresholds for a person at various frequencies in the respective ears. For visual related impairments, colour vision deficiencies are specified, i.e., the type and degree of the deficiency. For example, given that a User has a severe green-deficiency, an image or chart containing green colours or shades may be adapted accordingly so that the User can distinguish certain markings. Other types of visual impairments are being explored, such as the lack of visual acuity.

Mobility Characteristics aim to provide a concise description of the movement of a User over time. In particular, the directivity and location update intervals are specified. Directivity is defined to be the amount of angular change in the direction of the movement of a User compared to the previous measurement. The location update interval defines the time interval between two consecutive location updates of a particular User. Updates to the location are received when the User crosses a boundary of a pre-determined area (e.g., circular, elliptic, etc.) centered at the coordinate of its last location update. These descriptions can be used to classify Users, e.g., as pedestrians, highway vehicles, etc, in order to provide adaptive location-aware services.

4.3.2 Terminal Capabilities

The description of a terminal's capabilities is needed in order to adapt various forms of multimedia for consumption on a particular terminal. There are a wide variety of attributes that specify a terminal's capabilities; however we limit our discussion here to some of the more significant attributes that have been identified. Included in this list are encoding and decoding capabilities, display and audio output capabilities, as well as power, storage and input-output characteristics.

Encoding and decoding capabilities specify the format a particular terminal is capable of encoding or decoding, e.g., MPEG-2 MP@ML video. Given the variety of different content representation formats that are available today, it is necessary to be aware of the formats that a terminal is capable of.

Regarding output capabilities of the terminal, display attributes, such as the size or resolution of a display, its refresh rate, as well as if it is colour capable or not, are specified. For audio, the frequency range, power output, signal-to-noise ratio and number of channels of the speakers are specified.

Power characteristics describe the power consumption, battery size and battery time remaining of a particular device. Storage characteristics are defined by the transfer rate and a Boolean variable that indicates whether the device can be written to or not. Also, the size of the storage is specified. Input-Output characteristics describe bus width and transfer speeds, as well as the minimum and maximum number of devices that can be supported.

4.3.3 Network Characteristics

Two main categories are considered in the description of network characteristics, Network Capabilities and Network Conditions. Network Capabilities define static attributes of a particular network link, while Network Conditions describe more dynamic behaviour. This characterisation enables multimedia adaptation for improved transmission efficiency.

Network Capabilities include the maximum capacity of a network and the minimum guaranteed bandwidth that a particular network can provide. Also specified are attributes that indicate if the network can provide in-sequence packet delivery and how the network deals with erroneous packets, e.g., does it forward them on to the next node or discard them.

Network Conditions specify error, delay and utilisation. The error is specified in terms of packet loss rate and bit error rate. Several types of delay are considered including one-way and two-way packet delay, as well as delay variation. Utilisation includes attributes to describe the instantaneous, maximum and average utilisation of a particular link.

4.3.4 Natural Environment Characteristics

The description of the natural environment is currently defined by Location, Time and Audio-Visual Environment Characteristics.

In MPEG-21, Location refers to the location of usage of a Digital Item, while Time refers to the time of usage of a Digital Item. Both are specified by MPEG-7 DS's, the Place DS for Location and the Time DS for Time [66]. The Place DS describes existing, historical and fictional places and can be used to describe precise geographical location in terms of latitude, longitude and altitude, as well as postal addresses or internal coordinates of the place (e.g., an apartment or room number, the conference room, etc.). The Time DS describes dates, time points relative to a time base and the duration of time.

Audio-Visual Environment Characteristics target the description of audio-visual attributes that can measured from the natural environment and affect the way content is delivered and/or consumed by a User in this environment. For audio, the description of the noise levels and a noise frequency spectrum is specified. With respect to the visual environment, illumination characteristics that may affect the perceived display of visual information are specified.

4.4 Related Standardization Activities

The previous sections have focused on the standardization efforts of MPEG in the area of environment description. However, it was the Internet Engineering Task Force (IETF) that initiated some of the earliest work in this area. The result of their work provided many of the ideas for future work. Besides MPEG, the World Wide Web Consortium (W3C) and Wireless Application Protocol (WAP) Forum have also been active in this area. The past and current activity of each group is described further below.

4.4.1 CONNEG - Content Negotiation

The first work on content negotiation was initiated by the IETF and resulted in the Transparent Content Negotiation (TCN) framework [67]. This first work supplied many of the ideas for future work within the IETF and by other bodies. The CONNEG working group was later established by the IETF to develop a protocol-independent content negotiation framework (referred to as CONNEG), which was a direct successor of the TCN framework. In CONNEG, the entire process of content negotiation is specified, keeping the independence between the protocol performing the negotiation and the characteristics concerning the server and the User. The CONNEG framework addresses three elements:

-

Description of the capabilities of the server and contents

-

Description of the capabilities of the user terminal

-

Negotiation process by which capabilities are exchanged

Within CONNEG, expressing the capabilities (Media Features) of server and user terminal is covered by negotiation metadata. Media Feature names and data types are implementation-specific. The protocol for exchanging descriptions is covered by the abstract negotiation framework and is necessary for a specific application protocol. Protocol independence is addressed by separating the negotiation process and metadata from concrete representations and protocol bindings. The CONNEG framework specifies the following three elements:

-

Abstract negotiation process: This consists of a series of information exchanges (negotiations) between the sender and the receiver that continue until either party determines a specific version of the content to be transmitted by the server to the user terminal.

-

Abstract negotiation metadata: Description tools to describe the contents/server and the terminal capabilities/characteristics.

-

Negotiation metadata representation: A textual representation for media features names and values, for media feature set descriptions and for expressions of the media feature combining algebra, which provide a way to rank feature sets based upon sender and receiver preferences.

A format for a vocabulary of individual media features and procedures for feature registration are presented in [68]. It should be noted that the vocabulary is mainly limited to some basic features of text and images.

4.4.2 CC/PP - Composite Capabilities / Profile Preferences

The W3C has established a CC/PP working group with the mission to develop a framework for the management of device profile information. Essentially, CC/PP is a data structure that lets you send device capabilities and other situational parameters from a client to a server. A CC/PP profile is a description of device capabilities and user preferences that can be used to guide the adaptation of content presented to that device.

CC/PP is based on RDF, the Resource Description Framework, which was designed by the W3C as a general-purpose metadata description language. The foundation of RDF is a directed labelled graph used to represent entities, concepts and relationships between them. There exists a specification that describes how to encode RDF using XML [69], and another that defines an RDF schema description language using RDF [70].

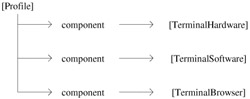

The structure in which terminal characteristics are organized is a tree-like data model. Top-level branches are the components described in the profile. Each major component may have a collection of attributes or preferences. Possible components are the hardware platform, the software platform and the application that retrieves and presents the information to the user, e.g., a browser. A simple graphical representation of the CC/PP tree based on these three components is illustrated in Figure 36.11.

Figure 36.11: Graphical representation of CC/PP profile components.

The description of each component is a sub-tree whose branches are the capabilities or preferences associated with that component. RDF allows modelling a large range of data structures, including arbitrary graphs.

A CC/PP profile contains a number of attribute names and associated values that are used by a server to determine the most appropriate form of a resource to deliver to a client. It is structured to allow a client and/or proxy to describe their capabilities by reference to a standard profile, accessible to an origin server or other sender of resource data, and a smaller set of features that are in addition to or different than the standard profile. A set of CC/PP attribute names, permissible values and associated meanings constitute a CC/PP vocabulary. More details on the CC/PP structure and vocabulary can be found in [71].

4.4.3 UAProf - User Agent Profile

The WAP Forum has defined an architecture optimized for connecting terminals working with very low bandwidths and small message sizes. The WAP Forum architecture is based on a proxy server, which acts as a gateway to the optimised protocol stack for the mobile environment. The mobile terminal connects to this proxy. On the wireless side of the communication, it uses an optimised protocol to establish service sessions via the Wireless Session Protocol (WSP), and an optimised transmission protocol, Wireless Transaction Protocol (WTP). On the fixed side of the connection, HTTP is used, and the content is marked up using the Wireless Markup Language (WML), which is defined by the WAP Forum.

The User Agent Profile (UAProf) defined by the WAP Forum is intended to supply information that shall be used to format the content according to the terminal. CC/PP is designed to be broadly compatible with the UAProf specification [72]. That is, any valid UAProf profile is intended to be a valid CC/PP profile.

The following areas are addressed by the UAProf specification:

-

HardwarePlatform: A collection of properties that adequately describe the hardware characteristics of the terminal device. This includes the type of device, model number, display size, input and output methods, etc.

-

SoftwarePlatform: A collection of attributes associated with the operating environment of the device. Attributes provide information on the operating system software, video and audio encoders supported by the device and user's preference on language.

-

BrowserUA: A set of attributes to describe the HTML browser.

-

NetworkCharacteristics: Information about the network-related infrastructure and environment such as bearer information. These attributes can influence the resulting content, due to the variation in capabilities and characteristics of various network infrastructures in terms of bandwidth and device accessibility.

-

WapCharacteristics: A set of attributes pertaining to WAP capabilities supported on the device. This includes details on the capabilities and characteristics related to the WML Browser, Wireless Telephony Application (WTA), etc.

-

PushCharacteristics: A set of attributes pertaining to push specific capabilities supported by the device. This includes details on supported MIME-types, the maximum size of a push-message shipped to the device, the number of possibly buffered push-messages on the device, etc.

Further details on the above can be found in [72].

4.5 Capability Understanding

For a given multimedia session, it is safe to assume that the sending and the receiving terminals have fixed media handling capabilities. The senders can adapt content in a fixed number of ways and can send content in a fixed number of formats. Similarly, the receivers can handle only a fixed number of content formats. The resources that vary during a session are available bandwidth, QoS, computing resources (CPU and memory), and battery capacity of the receiver. During the session set up, the sending and receiving terminals have to understand each other's capabilities and agree upon a content format for the session.

Content adaptation has two main components: 1) capability understanding and 2) content format negotiation. Capability understanding involves having knowledge of a client's media handling capabilities and the network environment and content format negotiation involves agreeing on a suitable content format based on the receiver's capabilities and content playback environment. From a content delivery point of view, the receiver capabilities of interest are the ability to handle content playback. The sender and content adaptation engines have little or no use for client device specifications such as CPU speed and available memory.

Capability understanding and content adaptation is performed during session set up and may also continue during the session. The capability understanding is typically happens in two ways: 1) receiver submits its capabilities to the sender and the sender determines the suitable content (suitable content request) 2) sender proposes a content description that the receiver can reject until the server sends an acceptable content description or a session is disconnected (suitable content negotiation).

In suitable Content Request method, receivers submit content requests along with their capability description. A sender has to understand the capabilities and adapt the content to fit the receiver's capabilities. Receiver capabilities vary from device to device and a sender has to understand all of the receivers' capabilities and adapt the content suitably. In Suitable Content Negotiation method, receiver requests specific content and sender responds with a content description that it can deliver. The receiver then has to determine if the content description (including format, bitrate, etc.) proposed by the sender is acceptable. The receiver can then counter with a content description that it can handle to which sender responds and so on.

The disadvantage of the first method is that a sender has to understand receivers' capabilities. The advantage is that the sender has sufficient knowledge to perform adaptation and avoid negotiation with the receiver. The advantage of the second method is that the sender has to know what it can deliver and doesn't have to know receiver capabilities. Receivers describe their capabilities in terms of the content they can handle and let the sender determine if it can deliver in an acceptable format. When a client requests content (e.g., by clicking on a URL), the receiver does not know what media is part of the requested content. The receiver does not have knowledge of the content adaptation the sender employs and may have to communicate all of its capabilities. This imposes a burden on both the sender and receiver processing unnecessary information.

4.5.1 Retrieving Terminal Capabilities

To adapt content for a particular device, a sender only needs to know the media the receiver can handle and the media formats the server can support for that particular piece of content. When a receiver requests content (e.g., by clicking on a URL), the receiver does not know what media is part of the requested content. The sender has complete knowledge of the content and the possible adaptations. The sender can selectively request whether the receiver supports certain media types and purpose the content accordingly. The alternative of a client communicating its capabilities is expensive, as the server doesn't need to know of and may not understand all of the client's capabilities.

A solution that addresses these issues and is extensible is using a Terminal Capability Information Base (TCIB). TCIB is a virtual database of receiver capabilities that is readable by a sender. TCIB would contain parameters such as media decoding capabilities, software and hardware available in the terminal. Senders can selectively query the capabilities they are interested in using a protocol such as SNMP. [1] The senders can also set session traps to receive messages when an event happens (e.g., battery level falls below a threshold). The advantage of this approach is that the protocol is simple and scalable and can be extended as server needs and terminal capabilities change. The protocol also allows easy to extend private object identifiers to define implementation-specific variables. The strategy implicit in this mechanism is that understanding client's capabilities to any significant level of detail is accomplished primarily by polling for appropriate information.

[1]Simple Network Management Protocol is used to manage network elements on the Internet.

EAN: 2147483647

Pages: 393