1. Introduction

1. Introduction

Animations of 3D models are an interesting type of media that are increasingly used in multimedia presentation such as streaming of 3D worlds in advertisements as well as in education material. The most popular way to generate animations of 3D models is motion capture through the use of motion-capture devices and 3D digitizers. In this method, 3D models are first digitized using 3D scanners. Then, applying automatic process and user-computer interactions, the moving structures of the models (e.g., skeletons and joints) and their motion parameters (e.g., translation, rotation, sliding, and deformation) are defined. Third, motion sequences are captured using the optical or magnetic motion capture devices. Finally, animations, the motion sequences and 3D models, are stored in animation databases for further use.

For reusing models and motion sequences, the captured motion sequences may need to be transformed (e.g., changing the speed, time) or may need to be applied to other 3D models. Reusing animations is a research area to devise the systems and methods to transform the existing animations for the new requirements. Few techniques have been proposed for transforming animations. In [2], motions are treated as signals so that the traditional signals processing method can be applied, while preserving the flavor of the original motion. Similar techniques have been described in [11, 4, 5].

1.1 Database Approach to Reusing Animations

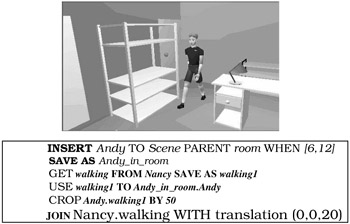

In this chapter, we consider the issue of generating new animation sequences based on existing animation models and motion sequences. We use an augmented scene graph based database approach for this purpose of reusing models and motions. For this augmented scene graph based animation database, we provide a set of spatial, temporal, and motion adjustment operations. These operations include reusing existing models in a new scene graph, applying motion of a model to another one, and retargeting motion sequence of a model to meet new constraints. Using the augmented scene graph model and the proposed operations, we have developed an animation toolkit that helps users to generate new animation sequences based on existing models and motion sequences. For instance, we may have two different animations where a man is walking and another where (the same or a different) man is waving his hands. We can reuse the walking and waving motion, and generate a new animation of a walking man with a waving hand. The SQL-like operations on the databases and the generated animation using the toolkit are shown in Figure 17.1.

Figure 17.1: An animation reuse example applying a walking sequence of a woman to a man

This toolkit provides an exhaustive set of Graphic User Interfaces (GUIs) that help users to manipulate scene graph structures, apply motion sequences to models, and to edit motion as well as model features. Hence, users of the toolkit do not have to remember the syntax of the SQL-like database operations. We use existing solutions in the literature for applying motion sequences to different models and for retargeting motion sequences to meet different constraints. The current implementation of our animation toolkit uses the motion mapping technique proposed in [8] for applying motion of a model to a different model. It (the toolkit) uses the inverse kinematics technique proposed in [13] for retargeting motion sequences to meet new constraints.

The toolkit can handle animations represented in Virtual Reality Modeling Language (VRML). Apart from VRML, animations are also represented in different standard formats such as MPEG-4 (Moving Pictures Experts Group), SMIL (Synchronized Multimedia Integration Language), and in proprietary formats such as Microsoft PowerPoint. MPEG-4 includes a standard for the description of 3D scenes called BInary Format for Scenes (BIFS). BIFS is similar to VRML with some additional features for compressed representation and streaming of the animated data. SMIL, a recommendation of the World Wide Web Consortium (W3C), incorporates features for describing animations in a multimedia presentation. Microsoft PowerPoint also supports animations. Reusing animations is more general and challenging when animations are represented in different formats, stored in different databases, and the resulting new animation needs to be in a specific format for the presentation. We use a mediator type of approach for handling multiple databases of animation formats. This mediator is an eXtensible Markup Language (XML-) based Document Type Definition (DTD) that describes the features of models and motions in a uniform manner. The animations in VRML, MPEG-4 BIFS, SMIL, and Microsoft PowerPoint are mapped onto this XML DTD. The XML DTD is then mapped onto a relational database for querying and reusing the animation models and motion sequences.

1.2 Motion Manipulation

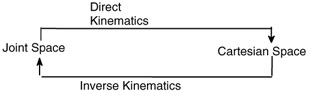

Database approach for reusing motions and models is to adjust the query results from animation databases for new situations while, at the same time, keeping the desired properties of the original models and motions. Manipulation of motion sequences can also be done by using a technique called inverse kinematics, originally developed for robotics control [20]. Inverse kinematics is a motion editing technique especially for articulated figures. An articulated figure is a structure consisting of multiple components connected by joints, e.g., human and robot arms and legs. An end effecter is the last piece of a branch of the articulated figures, e.g., a hand for an arm. Its location is defined in Cartesian space, three parameters for position and another three for orientation. At each joint, there are a number of degrees of freedom (DOFs). All DOFs form a joint (or configuration) space of the articulated figure (Figure 17.2). Given all the values of DOFs in joint space, the kinematics method to compute the position and orientation of end effecter in Cartesian space is called direct kinematics. Inverse kinematics is its opposite. Section 3.2 discusses this issue in broader detail.

Figure 17.2: Direct and inverse kinematics

EAN: 2147483647

Pages: 393