Planning a Testing Strategy

To produce applications that are reliable and do not fail when your users are depending on them, you must make sure that all code is thoroughly tested before releasing it. The best testing strategy requires that code be tested in various ways throughout the development phase and not just when the application is completed and ready to be deployed. By testing early, you can often catch defects while they are still easy to fix and do not affect other parts of the application code. Many organizations prefer to defer testing to the end of a project. They look at testing as an activity that adds a burden of time to the project schedule (and money to the project budget), when they would prefer to move quickly ahead with the coding. Most experts in the field of software project management disagree with this viewpoint and point out that it is several times more costly to wait until the application is complete to begin identifying defects and fixing them.

If your project team has done a good job of analyzing the requirements for the project and writing a good functional specification, then that information can be used directly when designing your test strategy. Each item in a functional specification should be documented in such as way that the resulting code can be tested to determine that it does, in fact, satisfy the requirements set forth in the functional specification.

Design goals for a software project often include specifications for performance, reliability, and other desirable characteristics. When testing your application, you should keep these goals in mind. Here are some testing recommendations for common design goals:

Availability Availability means that the application is available when users need it and that it does not experience downtime resulting in a loss of time, money, or opportunity for the business. Testing for availability should include tests of external resources (such as database servers and network bandwidth) to make sure they can handle the demands of your application. You should also test maintenance procedures and disaster recovery procedures to determine how long the application will be offline.

Manageability Manageability means that maintenance and ongoing monitoring of application performance can be carried out easily. Testing for manageability should include testing on different hardware configurations and testing any code in the application that provides instrumentation for performance monitoring.

Performance Performance measures include response times or number of transactions performed per time unit that were part of the original functional specifications for your application. Testing for performance includes determining baseline performance and then “stress testing” your application to see at what point greater levels of demand will cause your application to fail.

Reliability Reliability means that your application produces consistent results under any conditions. Testing for reliability includes testing each component with a variety of input data and with peak usage demands. Equally important is testing the system as a whole with the same type of stresses. Reliability testing requires testing in a real-world environment, reflecting actual use conditions. Reliability tests are often designed to find a way to make the application fail.

Scalability Scalability describes the application’s ability to serve increasing numbers of users or to perform increasing numbers of transactions, while still maintaining acceptable performance measurements. Testing for scalability includes many of the same activities as performance testing.

Securability Securability addresses your application’s resistance to exploitation by those who are interested in breaking into your systems. Testing for securability includes making sure that code runs at the lowest level of privilege necessary, that user input is validated, and that your code cannot be used to perform destructive operations, such as overwriting disk files.

With these larger goals in mind, you can begin writing test cases for your application. Because it is good practice to test throughout application development, in this section you will look at three types of testing that you can include at different phases of the application development cycle: unit testing, integration testing, and regression testing. In addition, you will also learn about how to test for globalization.

Unit Testing

The application developer typically carries out unit testing on his or her own code. Unit testing determines whether a single set of code, perhaps a single class or a component that contains a few related classes, is correctly performing its tasks. Code should be tested with a range of data, representing both valid input values and invalid ones. The code should return consistent results on valid data and handle error conditions caused by the invalid test data.

After you have created a test application that can test your modules by calling the methods with all of the different test data, this test can be reused, and tests should be run each time the module is changed in any way. This way, you can be sure that subsequent changes to the module do not cause new errors.

The functional specifications for the application should provide information for generating test data. The specifications should include information about valid input and output values for each method that you code.

Unit testing is cost-effective because it will catch defects at the very earliest point in the development cycle. Defects are less costly and easier to fix when you are focusing on only one small section of code at a time.

Integration Testing

After individual modules or components have been verified as working to specification, they can be put into service by other developers who are working on other parts of the application. For example, you might develop a component to calculate tax information that will be used by ASP.NET web developers. The ASP.NET developers are mostly concerned with creating a user interface but will call your component, and others, to perform complex calculations. Integration testing makes sure that calls are being made correctly to your component and that the return results are in the correct format.

As your application becomes more complex, data might be passed through several components to achieve the final results. Integration testing should begin by testing the interaction between each pair of components. After that has been verified as working correctly, you can test the interactions between multiple components as they will actually occur when the application is in production.

Regression Testing

Regression testing is done when changes or additions are made to your application. In addition to testing the code that was changed or is new, regression testing tests all of the previously tested parts of the application to make sure the new code has not inadvertently caused an error to occur in another part of the system.

Regression testing can be automated and will most likely consist of running the test cases developed during unit and integration testing. The goal of regression testing is to make sure that all code that was working correctly before the change is still working correctly afterwards.

Testing for Globalization

You might be required to run your application in an environment that uses different locale settings from those that it was originally developed with. In other scenarios, you might be exchanging data that was created on a computer running under a different locale. In these cases, it is important to test your application with multicultural test data to make sure that those items that vary from culture to culture, such as dates, currency, and separator characters in numbers, are interpreted correctly by your application.

If your application’s user interface is going to be localized, you also need to make sure that all text string information is contained in a resource file and that strings that will be displayed to the user are not coded into the source code. Be aware that the length of string data might change greatly when the text is translated into another language, so make sure that your code and your user interface can accommodate strings of varying lengths.

You are a software developer for an organization that is cautiously moving to the .NET platform. Your manager is concerned that inexperience with the platform will lead to mistakes in design. Management is also concerned that developers will overlook important considerations that will cause problems down the road, such as security vulnerabilities or problems interoperating with existing applications. Standard testing and debugging procedures can provide confidence that your code is performing correctly, but they can’t tell you if you are missing important features.

Your manager also wants the team to do a better job of following a set of standard naming conventions across all projects. After all, because everyone is learning a new programming platform and languages, this is a perfect time to instill some good habits.

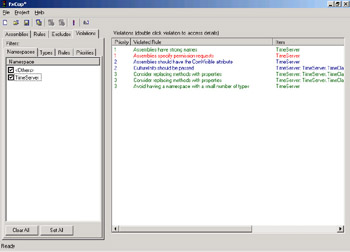

You have been assigned the tasks of researching standards and best practices for developing on the .NET platform, and recommending procedures that your team can use to make sure that their first attempts are successful, and ensuring that best practices and coding standards are enforced. Your web research pays off quickly when you read some comments on a developer forum about FxCop. FxCop is tool from Microsoft that checks your assemblies and verifies the code against a set of rules based on the Microsoft .NET Framework Design Guidelines. Each of these rules verifies that your code includes important .NET Framework features, such as security permission requests, or does not include common errors that could slow performance. The FxCop program includes a comprehensive set of rules that cover such areas as:

-

COM interoperability

-

Class design

-

Globalization

-

Naming conventions

-

Performance

-

Security

You can also create new rules that apply to your own projects, or choose to exclude some of the existing rules when analyzing your code. Here is a screen shot of the FxCop analysis provided for the TimeServer.dll that was a part of the Chapter 3, “Creating and Managing .NET Remoting Objects”, exercise.

FxCop supports many other features that will help you to create an automated process to make sure that all of your team’s code is checked regularly. You can save sets of rules and exclusions on a per-project basis. You can also save analysis reports as XML (or plain text) files, so management can review them.

FxCopis available for free download on the www.gotdotnet.com site: http://www.gotdotnet.com/team/libraries/.

If you want to learn more about the .NET Framework Design Guidelines, you can find that information at http://msdn.microsoft.com/library/default.asp?url=/library/en-us/cpgenref/html/cpconnetframeworkdesignguidelines.asp.

EAN: 2147483647

Pages: 153