Search Administration

Search administration is now conducted entirely within the SSP. The portal (now known as the Corporate Intranet Site) is no longer tied directly to the search and indexing administration. This section discusses the administrative tasks that you'll need to undertake to effectively administrate search and indexing in your environment. Specifically, it discusses how to create and manage content sources, configure the crawler, set up site path rules, and throttle the crawler through the crawler impact rules. This section also discusses index management and provides some best practices along the way.

Creating and Managing Content Sources

The index can hold only that information that you have configured Search to crawl. We crawl information by creating content sources.The creation and configuration of a content source and associated crawl rules involves creating the rules that govern where the crawler goes to get content, when the crawler gets the content, and how the crawler behaves during the crawl.

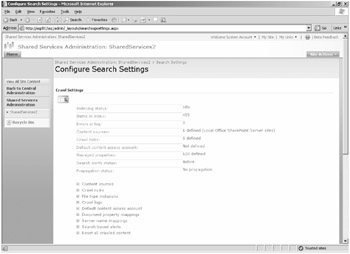

To create a content sources, we must first navigate to the Configure Search Settings page. To do this, open your SSP administrative interface and click the Search Settings link under the Search section. Clicking on this link will bring you to the Configure Search Settings page (shown in Figure 16-2).

Figure 16-2: The Configure Search Settings page

Notice that you are given several bits of information right away on this page, including the following:

-

Indexing status

-

Number of items in the index

-

Number errors in the crawler log

-

Number of content sources

-

Number of crawl rules defined

-

Which account is being used as the default content access account

-

The number of managed properties that are grouping one or more crawled properties

-

Whether search alerts are active or deactivated

-

Current propagation status

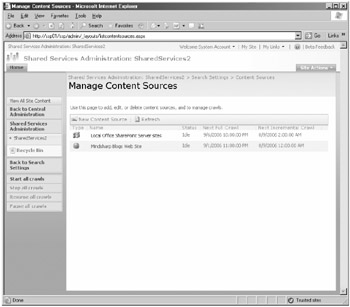

This list can be considered a search administrator's dashboard to instantly give you the basic information you need to manage search across your enterprise. Once you have familiarized yourself with your current search implementation, click the Content Sources link to begin creating a new content source. When you click this link, you'll be taken to the Manage Content Sources page (shown in Figure 16-3). On this page, you'll see a listing of all the content sources, the status of each content source, and when the next full and incremental crawls are scheduled.

Figure 16-3: Manage Content Sources administration page

Note that there is a default content source that is created in each SSP: Local Office SharePoint Server Sites. By default, this content source is not scheduled to run or crawl any content. You'll need to configure the crawl schedules manually. This source includes all content that is stored in the sites within the server or server farm. You'll need to ensure that if you plan on having multiple SSPs in your farm, only one of these default content sources is scheduled to run. If more than one are configured to crawl the farm, you'll unnecessarily crawl your farm's local content multiple times, unless users in different SSPs all need the farm content in their indexes, which would then beg the question as to why you have multiple SSPs in the first place.

If you open the properties of the Local Office SharePoint Server Sites content source, you'll note also that there are actually two start addresses associated with this content source and they have two different URL prefixes: HTTP and SPS3. By default, the HTTP prefix will point to the SSP's URL. The SPS3 prefix is hard-coded to inform Search to crawl the user profiles that have been imported into that SSP's user profile database.

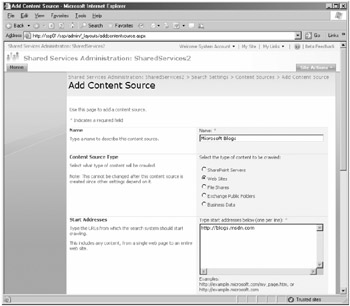

To create a new content source, click the New Content Source button. This will bring you to the Add Content Source dialog page (Figure 16-4). On this page, you'll need to give the content source a name. Note that this name must be unique within the SSP, and it should be intuitive and descriptive-especially if you plan to have many content sources.

Figure 16-4: Add Content Source page-upper half

| Note | If you plan to have many content sources, it would be wise to develop a naming convention that maps to the focus of the content source so that you can recognize the content source by its name. |

Notice also, as shown in the figure, that you'll need to select which type of content source you want to create. Your selections are as follows:

-

SharePoint Servers This content source is meant to crawl SharePoint sites and simplifies the user interface so that some choices are already made for you.

-

Web Sites This content source type is intended to be used when crawling Web sites.

-

File Shares This content source will use traditional Server Message Block and Remote Procedure Calls to connect to a share on a folder.

-

Exchange Public Folders This content source is optimized to crawl content in an Exchange public folder.

-

Business Data Select this content source if you want to crawl content that is exposed via the Business Data Catalog.

| Note | You can have multiple start addresses for your content source. This improvement over SharePoint Portal Server 2003 is welcome news for those who needed to crawl hundreds of sites and were forced into managing hundreds of content sources. Note that while you can enter different types of start addresses into the start address input box for a give content source, it is not recommended that you do this. Best practice is to enter start addresses that are consistent with the content source type configured for the content source. |

Assume you have three file servers that host a total of 800,000 documents. Now assume that you need to crawl 500,000 of those documents, and those 500,000 documents are exposed via a total of 15 shares. In the past, you would have had to create 15 content sources, one for each share. But today, you can create one content source with 15 start addresses and schedule one crawl and create one set of site path rules for one content source. Pretty nifty!

Planning your content sources is now easier because you can group similar content targets into a single content source. Your only real limitation is the timing of the crawl and the length of time required to complete the crawl. For example, performing a full crawl of blogs.msdn.com will take more than two full days. So grouping other blog sites with this site might be unwise.

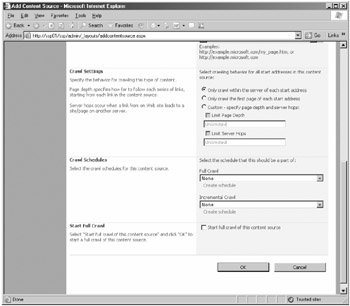

The balance of the Add Content Source page (shown in Figure 16-5) involves specifying the crawl settings and the crawl schedules and deciding whether you want to start a full crawl manually.

Figure 16-5: Add Content Source page-lower half (Web site content source type is illustrated)

The crawl settings instruct the crawler how to behave relative to depth and breadth given the different content source types. Table 16-2 lists each of these types and associated options.

| Type of crawl | Crawler setting options | Notes |

|---|---|---|

| SharePoint site |

| This will crawl all site collections at this start address, not just the root site in the site collection. In this context, hostname means URL namespace. This option includes new site collections inside a managed path. |

| Web site |

| In this context, Server means URL namespace (for example, contoso.msft). This means that only a single page will be crawled. Page depth" refers to page levels in a Web site hierarchy. Server hops refers to changing the URL namespace-that is, changes in the Fully Qualified Domain Name (FQDN) that occur before the first "/" in the URL. |

| File shares |

| |

| Exchange public folders |

| What is evident here is that you'll need a different start address for each public folder tree. |

| Business Data Catalog |

|

The crawl schedules allow you to schedule both full and incremental crawls. Full index builds will treat the content source as new. Essentially, the slate is wiped clean and you start over crawling every URL and content item and treating that content source as if it has never been crawled before. Incremental index builds update new or modified content and remove deleted content from the index. In most cases, you'll use an incremental index build.

You'll want to perform full index builds in the following scenarios because only a full index build will update the index to reflect the changes in these scenarios:

-

Any changes to crawl inclusion/exclusion rules.

-

Any changes to the default crawl account.

-

Any upgrade to a Windows SharePoint Services site because an upgrade action deletes the change log and a full crawl must be initiated because there is no change log to reference for an incremental crawl.

-

Changes to .aspx pages.

-

When you add or remove an iFilter.

-

When you add or remove a file type.

-

Changes to property mappings will happen on a document-by-document as each affected document is crawled, whether the crawl is an incremental or full crawl. A full crawl of all content sources will ensure that document property mapping changes are applied consistently throughout the index.

Now, there are a couple of planning issues that you need to be aware of. The first has to do with full index builds, and the second has to do with crawl schedules. First, you need to know that subsequent full index builds that are run after the first full index build of a content source will start the crawl process and add to the index all the content items it finds. Only after the build process is complete will the original set of content items in the index be deleted. This is important to note because the index can be anywhere from 10 percent to 40 percent of the size of the content (also referred to as the corpus) you're crawling, and for a brief period of time, you'll need twice the amount of disk space that you would normally need to host the index for that content source.

For example, assume you are crawling a file server with 500,000 documents, and the total amount of disk space for these documents is 1 terabyte. Then assume that the index is roughly equal to 10 percent of the size of these documents, or 100 GB. Further assume that you completed a full index build on this file server 30 days ago, and now you want to do another full index build. When you start to run that full index build, several things will be true:

-

A new index will be created for that file server during the crawl process.

-

The current index of that file server will remain available to users for queries while the new index is being built.

-

The current index will not be deleted until the new index has successfully been built.

-

At the moment in time when the new index has successfully finished and the deletion of the old index for that file server has not started, you will be using 200 percent of disk space to hold that index.

-

The old index will be deleted item by item. Depending on the size and number of content items, that could take from several minutes to many hours.

-

Each deletion of a content item will result in a warning message for that content source in the Crawl Log. Even if you delete the content source, the Crawl Log will still display the warning messages for each content item for that content source. In fact, deleting the content source will result in all the content items in the index being deleted, and the Crawl Log will reflect this too.

The scheduling of when indexes should be run is a planning issue. "How often should I crawl my content sources?" The answer to this question is always the same: The frequency of content changes combined with the level of urgency for the updates to appear in your index will dictate how often you crawl the content. Some content-such as old, reference documents that rarely, if ever, change might be crawled once a year. Other documents, such as daily or hourly memo updates, can be crawled daily, hourly, or every 10 minutes.

Administrating Crawl Rules

Formerly known as site path rules, crawl rules help you understand how to apply additional instructions to the crawler when it crawls certain sites.

For the default content source in each SSP-the Local Office SharePoint Server Sites content source-Search provides two default crawl rules that are hard coded and can't be changed. These rules are applied to every http://ServerName added to the default content source and do the following:

-

Exclude all .aspx pages within http://ServerName

-

Include all the content displayed in Web Parts within http://ServerName

For all other content sources, you can create crawl rules that give additional instructions to the crawler on how to crawl a particular content source. You need to understand that rule order is important, because the first rule that matches a particular set of content is the one that is applied. The exception to this is a global exclusion rule, which is applied regardless of the order in which the rule is listed. The next section runs through several common scenarios for applying crawl rules.

| Note | Do not use rules as another way of defining content sources or providing scope. Instead, use rules to specify more details about how to handle a particular set of content from a content source. |

Specifying a Particular Account to Use When Crawling a Content Source

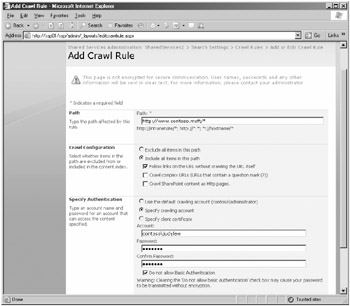

The most common reason people implement a crawl rule is to specify an account that has at least Read permissions (which are the minimum permissions needed to crawl a content source) on the content source so that the information can be crawled. When you select the Specify Crawling Account option (shown in Figure 16-6), you're enabling the text boxes you use to specify an individual crawling account and password. In addition, you can specify whether to allow Basic Authentication. Obviously, none of this means anything unless you have the correct path in the Path text box.

Figure 16-6: Add Crawl Rule configuration page

Crawling Complex URLs

Another common scenario that requires a crawl rule is when you want to crawl URLs that contain a question mark (?). By default, the crawler will stop at the question mark and not crawl any content that is represented by the portion of the URL that follows the question mark. For example, say you want to crawl the URL http://www.contoso.msft/default.aspx?top=courseware. In the absence of a crawl rule, the portion of the Web site represented by "top=courseware" would not be crawled and you would not be able to index the information from that part of the Web site. To crawl the courseware page, you need to configure a crawl rule.

So how would you do this, given our example here? First, you enter a path. Referring back to Figure 16-6, you'll see that all the examples given on the page have the wildcard character "*" included in the URL. Crawl rules cannot work with a path that doesn't contain the "*" wildcard character. So, for example, http://www.contoso.msft would be an invalid path. To make this path valid, you add the wildcard character, like this: http://www.contoso.msft/*.

Now you can set up site path rules that are global and apply to all your content sources. For example, if you want to ensure that all complex URLs are crawled across all content sources, enter a path of http://*/* and select the Include All Items In This Path option plus the Crawl Complex URLs check box. That is sufficient to ensure that all complex URLs are crawled across all content sources for the SSP.

Crawler Impact Rules

Crawler Impact Rules are the old Site Hit Frequency Rules that were managed in Central Administration in the previous version; although the name has changed to Crawler Impact Rules, they are still managed in Central Administration in this version.

Crawler Impact Rules is a farm-level setting, so whatever you decide to configure at this level will apply to all content sources across all SSPs in your farm. To access the Crawler Impact Rules page from the Application Management page, perform these steps.

-

Click Manage Search Service.

-

Click Crawler Impact Rules.

-

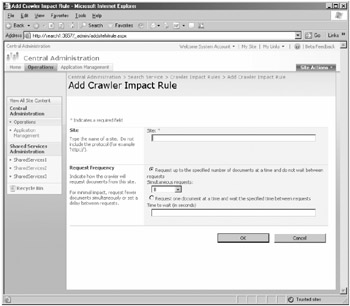

To add a new rule, click the Add Rule button in the navigation bar. The Add Crawler Impact Rule page will appear (shown in Figure 16-7).

Figure 16-7: Add Crawler Impact Rule page

You'll configure the page based on the following information. First, the Site text box is really not the place to enter the name of the Web site. Instead, you can enter global URLs, such as http://* or http://*.com or http://*.contoso.msft. In other words, although you can enter a crawler impact rule for a specific Web site, sometimes you'll enter a global URL.

Notice that you then set a Request Frequency rule. There are really only two options here: how many documents to request in a single request and how long to wait between requests. The default behavior of the crawler is to ask for eight documents per request and wait zero seconds between requests. Generally, you input a number of seconds between requests to conserve bandwidth. If you enter one second, that will have a noticeable impact on how fast the crawler crawls the content sources affected by the rule. And generally, you'll input a lower number of documents to process per request if you need to ensure better server performance on the part of the target server that is hosting the information you want to crawl.

SSP-Level Configurations for Search

When you create a new SSP, you'll have several configurations that relate to how search and indexing will work in your environment. This section discusses those configurations.

-

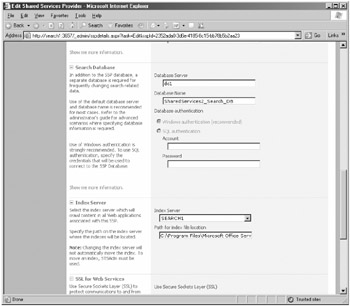

First, you'll find these configurations on the Edit Shared Services Provider configuration page (not illustrated), which can be found by clicking the Create Or Configure This Farm's Shared Services link in the Application Management tab in Central Administration.

-

Click the down arrow next to the SSP you want to focus on, and click Edit from the context list.

-

Scroll to the bottom of the page (as shown in Figure 16-8), and you'll see that you can select which Index Server will be the crawler for the all the content sources created within this Web application. You can also specify the path on the Index Server where you want the indexes to be held. As long as the server sees this path as a local drive, you'll be able to use it. Remote drives and storage area network (SAN) connections should work fine as long as they are mapped and set up correctly.

Figure 16-8: Edit Shared Services Provider page-lower portion

Managing Index Files

If you're coming from a SharePoint Portal Server 2003 background, you'll be happy to learn that you have only one index file for each SSP in SharePoint Server 2007. As a result, you don't need to worry anymore about any of the index management tasks you had in the previous version.

Having said that, there are some index file management operations that you'll want to pay attention to. This section outlines those tasks.

Continuous Propagation

The first big improvement in SharePoint Server 2007 is the Continuous Propagation feature. Essentially, instead of copying the entire index from the Index server to the Search server (using SharePoint Portal Server 2003 terminology here) every time a change is made to that index, now you'll find that as information is written to the Content Store on the Search server (using SharePoint Server 2007 terminology now), it is continuously propagated to the Query server.

Continuous propagation is the act of ensuring that all the indexes on the Query servers are kept up to date by copying the indexes from the Index servers. As the indexes are updated by the crawler, those updates are quickly and efficiently copied to the Query servers. Remember that users query the index sitting on the Query server, not the Index server, so the faster you can update the indexes on the Query server, the faster you'll be able to give updated information to users in their result set.

Continuous propagation has the following characteristics:

-

Indexes are propagated to the Query servers as they are updated within 30 seconds after the shadow index is written to the disk.

-

The update size must be at least 4 KB. There is no maximum size limitation.

-

Metadata is not propagated to the query servers. Instead, it is directly written to the SSP's Search SQL database.

-

There are no registry entries to manage, and these configurations are hard-coded.

Propagation uses the NetBIOS name of query servers to connect. Therefore, it is not a best practice to place a firewall between your Query server and Index server in SharePoint Server 2007 due to the number of ports you would need to open on the firewall.

Resetting Index Files

Resetting the index file is an action you'll want to take only when necessary. When you reset the index file, you completely clean out all the content and metadata in both the property and content stores. To repopulate the index file, you need to re-crawl all the content sources in the SSP. These crawls will be full index builds, so they will be both time consuming and resource intensive.

The reason that you would want to reset the index is because you suspect that your index has somehow become corrupted, perhaps due to a power outage our power supply failur and needs to be rebuilt.

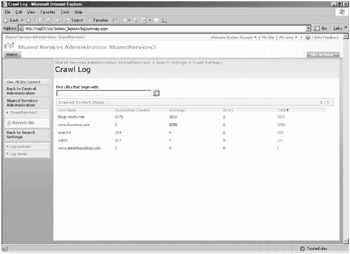

Troubleshooting Crawls Using the Crawl Logs

If you need to see why the crawler isn't crawling certain documents or certain sites, you can use the crawl logs to see what is happening. The crawl logs can be viewed on a per-content-source basis. They can be found by clicking on the down arrow for the content source in the Manage Content Sources page and selecting View Crawl Log to open the Crawl Log page (as shown in Figure 16-9). You can also open the Crawl Log page by clicking on the Log Viewer link in the Quick Launch bar of the SSP team site.

Figure 16-9: Crawl Log page

After this page is opened, you can filter the log in the following ways:

-

By URL

-

By date

-

By content source

-

By status type

-

By last status message

The status message of each document appears below the URL along with a symbol indicating whether or not the crawl was successful. You can also see, in the right-hand column, the date and time that the message was generated.

There are three possible status types:

-

Success The crawler was able to successful connect to the content source, read the content item, and pass the content to the Indexer.

-

Warning The crawler was able to connect to the content source and tried to crawl the content item, but it was unable to for one reason or another. For example, if your site path rules are excluding a certain type of content, you might receive the following error message (note that the warning message uses the old terminology for crawl rules):

The specified address was excluded from the index. The site path rules may have to be modified to include this address.

-

Error The crawler was unable to communicate with the content source. Error messages might say something like this:

The crawler could not communicate with the server. Check that the server is available and that the firewall access is configured correctly.

Another very helpful element in the Crawl Log page (refer back to Figure 16-9 if needed) is the Last Status Message drop-down list. The list that you'll see is filtered by which status types you have in focus. If you want to see all the messages that the crawler has produced, be sure to select All in the Status Type drop-down list. However, if you want to see only the Warning messages that the crawler has produced, select Warning in the Status Type drop-down list. Once you see the message you want to filter on, select it and you'll see the results of all the crawls within the date range you've specified appear in the results list. This should aid troubleshooting substantially. This feature is very cool.

If you want to get a high-level overview of the successes, warnings, and error messages that have been produced across all your content sources, the Log Summary view of the Crawl Log page is for you. To view the log summary view, click on the Crawl Logs link from the Configure Search Settings page. The summary view should appear. If it does not, click the Log Summary link in the left pane and it will appear (as shown in Figure 16-10). Each of the numbers on the page represents a link to the filtered view of the log. So if you click on one of the numbers in the page, you'll find that the log will have already filtered the view based on the status type without regard to date or time.

Figure 16-10: Log Summary view of the Crawl Log

Working with File Types

The file type inclusions list specifies the file types that the crawler should include or exclude from the index. Essentially, the way this works is that if the file type isn't listed on this screen, search won't be able to crawl it. Most of the file types that you'll need are already listed along with an icon that will appear in the interface whenever that document type appears.

To manage file types, click on the File Type Inclusions link on the Configure Search Settings page. This will bring you to the Manage File Types screen, as illustrated in Figure 16-11.

Figure 16-11: Manage File Types screen

To add a new file type, click on the New File Type button and enter the extension of the file type you want to add. All you need to enter are the file type's extension letters, such as "pdf" or "cad." Then click OK. Note that even though the three-letter extensions on the Mange File Types page represent a link, when you click the link, you won't be taken anywhere.

Adding the file type here really doesn't buy you anything unless you also install the iFilter that matches the new file type and the icon you want used with this file type. All you're doing on this screen is instructing the crawler that if there is an iFilter for these types of files and if there is an associated icon for these types of files, then go ahead and crawl these file types and load the file's icon into the interface when displaying this particular type of file.

Third-party iFilters that need to be added here will usually supply you with a .dll to install into the SharePoint platform, and they will usually include an installation routine. You'll need to ensure you've installed their iFilter into SharePoint in order to crawl those document types. If they don't supply an installation program for their iFilter, you can try running the following command from the command line:

regsvr32.exe <path\name of iFilter .dll>

This should load their iFilter .dll file so that Search can crawl those types of documents. If this command line doesn't work, contact the iFilter's manufacturer for information on how to install their iFilter into SharePoint.

To load the file type's icon, upload the icon (preferably a small .gif file) to the drive:\program files\common files\Microsoft shared\Web server extensions\12\template\images directory. After uploading the file, write down the name of the file, because you'll need to modify the docicon.xml file to include the icon as follows:

<Mapping Key="<doc extension>" Value="NameofIconFile.gif"/>

After this, restart your server and the icon should appear. In addition, you should be able to crawl and index those file types that you've added to your SharePoint deployment.

Even if the iFilter is loaded and enabled, if you delete the file type from the Manage File Types screen, search will not crawl that file type. Also, if you have multiple SSPs, you'll need to add the desired file types into each SSP's configurations, but you'll only need to load the .dll and the icon one time on the server.

Creating and Managing Search Scopes

A search scope provides a way to logically group items in the index together based on a common element. This helps users target their query to only a portion of the overall index and gives them a more lean, relevant result set. After you create a search scope, you define the content to include in that search scope by adding scope rules, specifying whether to include or exclude content that matches that particular rule. You can define scope rules based on the following:

-

Address

-

Property query

-

Content source

You can create and define search scopes at the SSP level or at the individual site-collection level. SSP-level search scopes are called shared scopes, and they are available to all the sites configured to use a particular SSP.

Search scopes can be built off of the following items:

-

Managed properties

-

Any specific URL

-

A file system folder

-

Exchange public folders

-

A specific host name

-

A specific domain name

Managed properties are built by grouping one or more crawled properties. Hence, there are really two types of properties that form the Search schema: crawled properties and managed properties. The crawled properties are properties that are discovered and created "on the fly" by the Archiving plug-in. When this plug-in sees new metadata that it has not seen before, it grabs that metadata field and adds the crawled property to the list of crawled properties in the search schema. Managed properties are properties that you, the administrator, create.

The behavior choices are to include any item that matches the rule, require that every item in the scope match this rule, or exclude items matching this rule.

| Note | Items are matched to their scope via the scope plug-in during the indexing and crawl process. Until the content items are passed through the plug-in from a crawl process, they won't be matched to the scope that you've created. |

Creating and Defining Scopes

To create a new search scope, you'll need to navigate to the Configure Search Settings page, and then scroll down and click the View Scopes link. This opens the View Scopes page, at which point you can click New Scope.

On the Create Scope page (shown in Figure 16-12), you'll need to enter a title for the scope (required) and a description of the scope (optional). The person creating the scope will be the default contact for the scope, but a different user account can be entered if needed. You can also configure a customized results page for users who use this scope, or you can leave the scope at the default option to use the default search results page. Configure this page as needed, and then click OK. This procedure only creates the scope. You'll still need to define the rules that will designate which content is associated with this scope.

Figure 16-12: Create Scope page

After the scope is created on the View Scopes page, the update status for the scope will be "Empty - Add Rules." The "Add Rules" will be a link to the Add Scope Rule page (shown in Figure 16-13), where you can select the rule type and the behavior of that rule.

Figure 16-13: Add Scope Rule default page

Each rule type has its own set of variables. This section discusses the rule types in the order in which they appear in the interface.

Web Address Scope Type

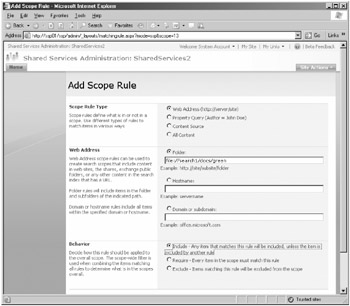

First, the Web Address scope type will allow you to set scopes for file system folders, any specific URL, a specific host name, a specific domain name, and Exchange public folders.

Here are a couple of examples. Start with a folder named "Docs" on a server named "Search1." Suppose that you have the following three folders inside Docs: "red", "green" and "blue." You want to scope just the docs in the green folder. The syntax you would enter, as illustrated in Figure 16-14, would be file://search1/docs/green. You would use the Universal Naming Convention (UNC) sequence in a URL format with the "file" prefix. Even though this isn't mentioned in the user interface (UI), it will work.

Figure 16-14: Configuring a folder search scope

Another example is if you want all content sources related to a particular domain, such as contoso.msft, to be scoped together. All you have to do is click to select the Domain Or Subdomain option and type contoso.msft.

Property Query Scope Type

The Property Query scope type allows you to add a managed property and make it equal to a particular value. The example in the interface is Author=John Doe. However, the default list is rather short and likely won't have the managed property you want to scope in the interface. If this is the case for you, you'll need to configure that property to appear in this interface.

To do this, navigate to the Managed Properties View by clicking the Document Managed Properties link on the Configure Search Settings page. This opens the Document Property Mappings page (shown in Figure 16-15).

Figure 16-15: Document Property Mappings page

On this page, there are several columns, including the property name, the property type, whether or not it can be deleted, the actual mapping of the property (more on this in a moment), and whether or not it can be used in scopes. All you need to do to get an individual property to appear in the Add Property Restrictions drop-down list on the Add Scope Rule page is edit the properties of the managed property and then select the Allow This Property To Be Used In Scopes check box.

If you need to configure a new managed property, click New Managed Property (refer back to Figure 16-15), and you will be taken to the New Managed Property page (Figure 16-16). You can configure the property's name, its type, its mappings, and whether it should be used in scope development.

Figure 16-16: New Managed Property page

In the Mappings To Crawled Properties section, you can look at the actual schema of your deployment and select an individual or multiple metadata to group together into a single managed property. For example, assume you have a Word document with a custom metadata labeled AAA with a value of BBB. After crawling the document, if you click Add Mapping, you'll be presented with the Crawled Property Selection dialog box. For ease of illustration, select the Office category from the Select A Category drop-down list. Notice that in the Select A Crawled Property selection box, the AAA(Text) metadata was automatically added by the Archival plug-in. (See Figure 16-17.)

Figure 16-17: Finding the AAA metadata in the schema

Once you click OK in the Crawled Property Selection dialog box, you'll find that this AAA property appears in the list under the Mappings To Crawled Properties section. Then select the Allow This Property To Be Used In Scopes check box (property here refers to the Managed Property, not the crawled property), and you'll be able to create a scope based on this property.

If you want to see all the crawled properties, from the Document Property Mappings page, you can select the Crawled Properties link in the left pane. This takes you to the various groupings of crawled property types. (See Figure 16-18.) Each category name is a link. When invoked, the link gives you all the properties that are associated with that category. There is no method of creating new categories within the interface.

Figure 16-18: Listing of the document property categories

Content Source and All Content Scopes

The Content Source Scope rule type allows you to tether a scope directly to a content source. An example of when you would want to do this might be when you have a file server that is being crawled by a single content source and users want to be able to find files on that files server without finding other documents that might match their query. Anytime you want to isolate queries to the boundaries of a given content source, select the Content Source Rule type.

The All Content rule type is a global scope that will allow you to query the entire index. You would make this selection if you've removed the default scopes and need to create a scope that allows a query of the entire index.

Global Scopes

Thus far, we've been discussing what would be known as Global Scopes, which are scopes that are created at the SSP level. These scopes are considered to be global in nature because they are available to be used across all the site collections that are associated with the SSP via the Web applications. However, creating a scope at the SSP level does you no good unless you enable that scope to be used at the site-collection level.

| Note | Remember that scopes created at the SSP level are merely available for use in the site collections. They don't show up by default until the Site Collection Administration has manually chosen them to be displayed and used within the site collection. |

Site Collection Scopes

Scopes that are created at the SSP level can be enabled or disabled at the site-collection level. This gives site-collection administrators some authority over which scopes will appear in the search Web Parts within their site collection. The following section describes the basic actions to take to enable a global scope at the site-collection level.

First, open the Site Collection administration menu from the root site in your site collection. Then click on the Search Scopes link under the Site Collection Administration menu to open the View Scopes page. On this page, you can create a new scope just for this individual site collection and/or create a new display group in which to display the scopes that you do create. The grouping gives the site collection administrator a method of organizing search scopes. The methods used to create a new search scope at the site-collection level are identical to those used at the SSP level.

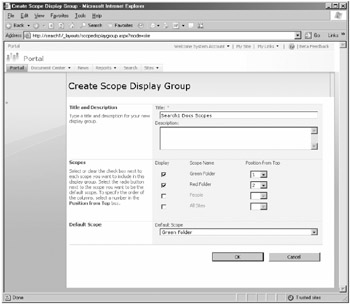

To add a scope created at the SSP level, click New Display Group (as shown in Figure 16-19), and then you'll be taken to the Create Scope Display Group page (shown in Figure 16-20).

Figure 16-19: The View Scopes page

Figure 16-20: The Create Scope Display Group page

On the Create Scope Display Group page, although you can see the scopes created at the SSP level, it appears they are unavailable because they are grayed out. However, when you select the Display check box next to the scope name, the scope is activated for that group. You can also set the order in which the scopes will appear from top to bottom.

Your configurations at the site-collection level will not appear until the SSP's scheduled interval for updating scopes is completed. You can manually force the scopes to update by clicking the Start Update Now link on the Configure Search Settings page in the SSP.

If you want to remove custom scopes and the Search Center features at the site-collection level, you can do so by clicking the Search Settings link in the Site Collection Administration menu and then clearing the Enable Custom Scopes And Search Center Features check box. Note also that this is where you can specify a different page for this site collection's Search Center results page.

Removing URLs from the Search Results

At times, you'll need to remove individual content items or even an entire batch of content items from the index. Perhaps one of your content sources has crawled inappropriate content, or perhaps there are just a couple of individual content items that you simply don't want appearing in the result set. Regardless of the reasons, if you need to remove search results quickly, you can do so by navigating to the Search Result Removal link in the SSP under the Search section.

When you enter a URL to remove the results, a crawl rule excluding the specific URLs will also be automatically created and enforced until you manually remove the rule. Deleting the content source will not remove the crawl rule.

Use this feature as a way to remove obviously spurious or unwanted results. But don't try to use this feature to "carve out" large portions of Web sites unless you have some time to devote to finding each unwanted URL on that page.

Understanding Query Reporting

Another feature that Microsoft has included in Search is the Query Reporting tool. This tool is automatically built in to the SSP and gives you the opportunity to view all the search results across your SSP. Use this information to help you understand better what scopes might be useful to your users and what searching activities they are engaging in.

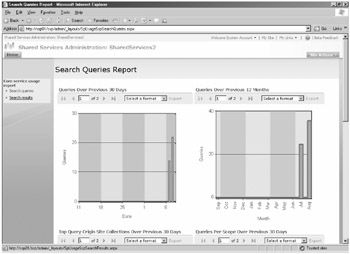

There are two types of reports you can view. The first is the Search Queries Report, which includes basic bar graphs and pie charts. (See Figure 16-21.) On this page, you can read the following types of reports:

-

Queries over previous 30 days

-

Queries over previous 12 months

-

Top query origin site collections over previous 30 days

-

Queries per scope over previous 30 days

-

Top queries over previous 30 days

Figure 16-21: Search Queries Report page

These reports can show you which group of users is most actively using the Search feature, the most common queries, and the query trends for the last 12 months. For each of these reports, you can export the data in either a Microsoft Excel or Adobe Acrobat (PDF) format.

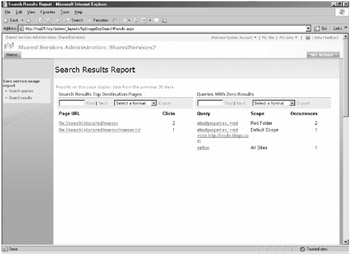

In the left pane, you can click the Search Results link, which will invoke two more reports (as shown in Figure 16-22):

-

Search results top destination pages

-

Queries with zero results

Figure 16-22: Search Results Report page

| Note | These reports can help you understand where your users are going when they execute queries and inform not only your scope development, but also end-user training about what queries your users should use to get to commonly accessed locations and which queries not to use. |

EAN: 2147483647

Pages: 299