4.4 Logical Volume Manager (LVM)

|

| < Day Day Up > |

|

4.4 Logical Volume Manager (LVM)

| Note | This section contains text derived from Configuring Logical Volume Management (LVM) on Linux for zSeries, TIPS0128, and from the following site:

|

Disks cannot span more than one physical DASD volume. Therefore, for most applications, you have to combine several physical volumes into one larger logical volume.

When considering the layout of a filesystem to hold Domino data, it is often desirable to logically group databases with a common use in the same directory. On a mail server, you may want break up 1,000 mail users hosted on a server into five directories of 200 mail databases each. On an application server, create a separate directory of each of a number of applications.

In either case, these subdirectories may be very large, far exceeding the size of a single DASD volume. Without a volume management system, this limits the size of a filesystem on Linux for zSeries to the size of a DASD volume; for a 3390 model 3, this translates to 2.3 GB of available space for a Linux filesystem. Volume management systems allow multiple physical DASD devices to be combined into a single logical volume which can be used for a Linux filesystem, thereby overcoming the single DASD volume size limit.

In this section, we describe the concept of Logical Volume Manager (LWM), and how to configure it for Linux on zSeries. But first, let's review the special LVM terminology:

-

A DASD volume is called a physical volume (PV), because that is the volume where the data is physically stored.

-

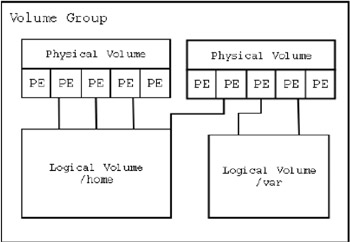

The PV is divided into several physical extents (PEs) of the same size. The PEs are like blocks on the PV.

-

Several PVs make up a volume group (VG), which becomes a pool of PEs available for the logical volume (LV).

The LVs appear as normal devices in /dev/ directory. You can add or delete PVs to or from a VG, and increase or decrease your LVs. The connections between the single elements defined above are shown in Figure 4-2.

Figure 4-2: LVM elements

Logical Volume Manager provides a fundamental way to manage UNIX storage systems in a scalable, forward-thinking manner. Implementations of the logical volume management concept are available for most UNIX operating systems. These often differ greatly, but all are based on the same fundamental goals and assumptions.

Logical Volume Manager (LVM) abstracts disk devices into pools of storage space called volume groups (VGs). These volume groups may then be subdivided into virtual disks called logical volumes (LVs). These may be used just like regular disks, with filesystems created on them and mounted in the UNIX filesystem tree.

As mentioned, there are many different implementations of logical volume management. One, created by the Open Software Foundation (OSF), was integrated into many UNIX operating systems, and it also serves as a base for the Linux implementation of LVM that is covered here. Note that many other vendors offer logical volume management that is substantially different from the OSF LVM presented here (for example, Sun ships an LVM from Veritas with its Solaris system).

Benefits of logical volume management

Logical volume management provides benefits in the areas of disk management and scalability.

| Note | It is not intended to provide fault-tolerance or extraordinary performance (for this reason, it is often run in conjunction with RAID, which can provide both of those benefits). |

By creating virtual pools of space, an administrator can assign chunks of space based on the needs of a system's users. For instance, an administrator can create dozens of small filesystems for different projects and add space to them as needed with very little disruption.

When a project ends, the administrator can remove the space and return it into the pool of free space—and even create a logical volume and filesystem that spans multiple disks. (Contrast this with just "slicing up" a hard disk into partitions and placing filesystems on them; they cannot be resized, nor can they span disks.)

Costs of logical volume management

Logical volume management does extract a penalty because of the complexity and system overhead it occurs; it adds an additional logical layer or two between the storage device and the applications.

Volume groups

A volume group should be thought of as a pool of small chunks of available storage. It is made up of one or more physical volumes (partitions or whole disks, called PVs). When it is created, it is divided into a number of same-size chunks called physical extents (PEs). A volume group must be contain at least one entire physical volume, but other volumes may be added and removed in real-time as needed.

Logical volumes

Logical volumes are virtual disk devices made up of logical extents (LEs). LEs are abstract chunks of storage mapped by the LVM to physical extents in a volume group. A logical volume must always contain at least one LE, but more can be added and removed in real-time.

4.4.1 LVM for Linux

The OSF LVM was implemented on Linux and is now extremely usable and full-featured. There is a home page for this Linux LVM implementation. This LVM is quite similar to the LVM found on HP/UX, Digital, and AIX. It serves as an excellent model and "sandbox" for learning about LVM on those platforms. LVM will probably be integrated into future Linux kernels, but for now it must be added manually.

4.4.2 How to use LVM

LVM can be exercised either from the command line, or by using the setup tool YaST. Note, however, that if the YaST implementation does not complete successfully, your new logical volume could be left in an inconsistent state that may be difficult to correct. Therefore, we list the manual LVM steps.

-

Creating physical volumes for LVM

-

Registering physical volumes

-

Creating a volume group

-

Creating a logical volume

-

Creating a filesystem on the logical volume

-

Adding a disk to the volume group

-

Creating a striped logical volume

-

Moving data within a volume group

-

Removing a logical volume from a volume group

-

Removing a disk from the volume group

We give more detail in 6.5.9, "Set up logical volumes" on page 116.

4.4.3 Our example

These are the steps we used to create the filesystem for one of our Linux systems. During the workload tests, we had to rearrange some of the directories on our DASD, so this example shows you one way to create LVM and directories.

root@linuxa:/ > vgscan vgscan -- reading all physical volumes (this may take a while...) vgscan -- "/etc/lvmtab" and "/etc/lvmtab.d" successfully created vgscan -- WARNING: This program does not do a VGDA backup of your volume group root@linuxa:/ > cd domserva/notesdata root@linuxa:/domserva/notesdata > df . Filesystem 1K-blocks Used Available Use% Mounted on /dev/dasdb1 2259188 1101640 1157548 49% / root@linuxa:/domserva/notesdata > ll total 0 drwxr-xr-x 5 domserva notes 120 Jul 31 19:08 . drwxr-xr-x 3 domserva notes 80 Jul 31 19:08 .. drwxr-xr-x 2 domserva notes 48 Jul 31 19:08 mail1 drwxr-xr-x 2 domserva notes 48 Jul 31 19:08 mail2 drwxr-xr-x 2 domserva notes 48 Jul 31 19:08 translog root@linuxa:/domserva/notesdata > df Filesystem 1K-blocks Used Available Use% Mounted on /dev/dasdb1 2259188 1101640 1157548 49% / /dev/dasdc1 2403184 1735800 667384 73% /opt shmfs 257140 0 257140 0% /dev/shm root@linuxa:/domserva/notesdata > pvcreate dasd[f-l]1 pvcreate -- invalid physical volume name "dasd[f-l]1" pvcreate [-d|--debug] [-f[f]|--force [--force]] [-h|--help] [-s|--size PhysicalVolumeSize[kKmMgGtT]] [-y|--yes] [-v|--verbose] [--version] PhysicalVolume [PhysicalVolume...] root@linuxa:/domserva/notesdata > for i in d e f g h i j k l m n o p q r s t u > do > fdasd -a /dev/dasd$i > done auto-creating one partition for the whole disk... writing volume label... writing VTOC... rereading partition table... ... auto-creating one partition for the whole disk... writing volume label... writing VTOC... rereading partition table... root@linuxa:/domserva/notesdata > pvcreate /dev/dasd[f-l]1 pvcreate -- physical volume "/dev/dasdf1" successfully created pvcreate -- physical volume "/dev/dasdg1" successfully created pvcreate -- physical volume "/dev/dasdh1" successfully created pvcreate -- physical volume "/dev/dasdi1" successfully created pvcreate -- physical volume "/dev/dasdj1" successfully created pvcreate -- physical volume "/dev/dasdk1" successfully created pvcreate -- physical volume "/dev/dasdl1" successfully created root@linuxa:/domserva/notesdata > pvscan pvscan -- reading all physical volumes (this may take a while...) pvscan -- inactive PV "/dev/dasdf1" is in no VG [2.29 GB] pvscan -- inactive PV "/dev/dasdg1" is in no VG [2.29 GB] pvscan -- inactive PV "/dev/dasdh1" is in no VG [2.29 GB] pvscan -- inactive PV "/dev/dasdi1" is in no VG [2.29 GB] pvscan -- inactive PV "/dev/dasdj1" is in no VG [2.29 GB] pvscan -- inactive PV "/dev/dasdk1" is in no VG [2.29 GB] pvscan -- inactive PV "/dev/dasdl1" is in no VG [2.29 GB] pvscan -- total: 7 [16.04 GB] / in use: 0 [0] / in no VG: 7 [16.04 GB] root@linuxa:/domserva/notesdata > vgcreate mail1 /dev/dasd[f-l]1 vgcreate -- INFO: using default physical extent size 4 MB vgcreate -- INFO: maximum logical volume size is 255.99 Gigabyte vgcreate -- doing automatic backup of volume group "mail1" vgcreate -- volume group "mail1" successfully created and activated root@linuxa:/domserva/notesdata > vgdisplay mail1 --- Volume group --- VG Name mail1 VG Access read/write VG Status available/resizable VG # 0 MAX LV 256 Cur LV 0 Open LV 0 MAX LV Size 255.99 GB Max PV 256 Cur PV 7 Act PV 7 VG Size 16 GB PE Size 4 MB Total PE 4095 Alloc PE / Size 0 / 0 Free PE / Size 4095 / 16 GB VG UUID lUDs5e-5ROE-mo6C-dMR8-YLS1-jKpq-r0zS7s root@linuxa:/domserva/notesdata > lvcreate --extents 4095 -n mail1 /dev/mail1 lvcreate -- doing automatic backup of "mail1" lvcreate -- logical volume "/dev/mail1/mail1" successfully created root@linuxa:/domserva/notesdata > mke2fs /dev/mail1/mail1 mke2fs 1.28 (31-Aug-2002) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) 2097152 inodes, 4193280 blocks 209664 blocks (5.00%) reserved for the super user First data block=0 128 block groups 32768 blocks per group, 32768 fragments per group 16384 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000 Writing inode tables: done Writing superblocks and filesystem accounting information: done This filesystem will be automatically checked every 37 mounts or 180 days, whichever comes first. Use tune2fs -c or -i to override. root@linuxa:/domserva/notesdata > cp /etc/fstab /etc/fstab.org root@linuxa:/domserva/notesdata > vi /etc/fstab /dev/dasdb1 / reiserfs defaults 1 1 /dev/dasdc1 /opt reiserfs defaults 1 2 /dev/dasda1 swap swap pri=42 0 0 /dev/mail1/mail1 /domserva/notesdata/mail1 ext2 defaults 0 0 devpts /dev/pts devpts mode=0620,gid=5 0 0 proc /proc proc defaults 0 0 root@linuxa:/domserva/notesdata > mount /domserva/notesdata/mail1 root@linuxa:/domserva/notesdata > df -h Filesystem Size Used Avail Use% Mounted on /dev/dasdb1 2.2G 1.1G 1.2G 49% / /dev/dasdc1 2.3G 1.7G 652M 73% /opt shmfs 252M 0 252M 0% /dev/shm /dev/mail1/mail1 16G 20K 15G 1% /domserva/notesdata/mail1

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 162