Automatic Memory Management

The most heralded productivity and correctness enhancing feature of the CLR is its automatic memory management. More specifically, the CLR manages memory entirely on your behalf, using straightforward and performant allocation mechanisms, and using a Garbage Collection (GC) algorithm to eliminate all explicit deallocation of memory from your code. Gone are the days of free and delete! The GC understands when it is legal (and profitable) to reclaim an object's memory when there are no live references to it in the program. Furthermore, it does it intelligently to avoid unnecessary CPU cycles spent on reclaiming unused memory.

You can of course write managed code without understanding how the GC functions, but many of the common pitfalls developers run into are easily avoided with some base-level knowledge of the GC. This section will take you through the allocation, management, and deallocation of your code's memory. We'll also see how the GC provides features for resource lifetime management through a process called finalization.

Allocation

There are three primary areas of memory that the CLR manages: the stack, the small object GC heap, and the large object GC heap. Each method call utilizes more stack space for its arguments and locals, called the activation frame. This is a segment of memory whose current position is tracked with a pointer (stored in the ESP register), which grows and shrinks as frames get pushed and popped off the stack. Any memory inside a frame lives only so long as that method is active. Each managed stack frame does, however, report back to the GC which objects are referred to from within it to ensure that the GC doesn't deallocate an object still in use. The stack is mostly managed by the OS, although the CLR works closely with it.

The CLR GC also manages two per-process Windows dynamic heaps inside the process's address space — each of which is a range of virtual memory addresses — growing and shrinking them as needed. The large object heap is for objects over 80,000 bytes in size, while the small object heap is for all others. They are shared among AppDomains in a single process. These heaps contain instances able to survive longer than the stack frame in which they were allocated. Other heaps may exist inside a Windows process. For example, there is a default heap that is used for unmanaged code, for example C Runtime Library (CRT) allocations; others may be allocated using the HeapCreate Win32 API. The CLR handles reservation, committing, and freeing of pages as your heap grows and shrinks.

In the days of C, you had to use malloc and free to manage your own heap-based memory. C++ added the keyword new to handle the allocation and zeroing of bytes but still mandated explicit freeing memory through delete (or delete[] for arrays). To make matters worse, typical malloc implementations are actually rather expensive. Nearly all CRT implementations manage the free segments of its heap using a linked list. When a block of memory is requested, malloc must walk the free list, searching for a segment large enough to satisfy the allocation. It then splits the block into two segments: one to hold the exact size requested and the other the remainder. Not only does this process involve housekeeping and dereferencing of pointers upon each allocation, but it also causes fragmentation as a result of the constant splitting. Fragmentation can cause large allocations to fail simply because there weren't enough contiguous bytes found (among other problems). We'll explore fragmentation momentarily and see how the GC avoids this problem by using a process called compaction.

When the CLR Allocates Memory

There are a few specific and obvious sources of memory allocations:

-

Allocating new objects with the newobj instruction, that is, using the new keyword in C#.

-

Allocating new arrays with the newarr instruction.

-

Transforming values on the stack into heap objects with the box instruction.

In general, however, there are hidden allocations in numerous places. For example, throw must allocate for wrapping purposes (as described above), and even unbox can allocate if it needs to create a new Nullable<T> instance. Because most of these are undocumented or implementation details, I won't detail all of them here.

Inside the Allocation Process

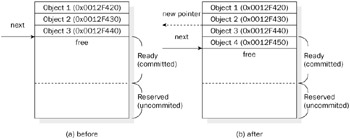

The CLR abstracts allocation and heap management from the developer. To understand this in detail, consider the layout of the small object GC heap, illustrated in Figure 3-3. Because most allocations will occur from the small object heap, large objects are described briefly in less detail below.

Figure 3-3: Heap (a) before and (b) after allocation of a new 16-byte object.

The heap contains a range of virtual addresses; these are pages (units of allocation on Windows, typically 4KB, sometimes 16KB, for example, on IA-64), and are grouped together into segments (units of management by the CLR's GC, typically 16MB). Segments are reserved by the GC using the VirtualAlloc Win32 API and are acquired as the heap grows. Reserved pages are not backed by the process's page-file and are not part of a process's working set. When the GC needs to allocate memory on a reserved segment it must first commit it, again using VirtualAlloc (albeit with different arguments than reservation). Once the GC has committed a segment, it can allocate objects within its address range. As the GC collects objects, it may decommit segements (marking the uncommitted), and finally it could unreserve segments altogether as it no longer needs them, all accomplished using the VirtualFree API.

When new memory is allocated, the GC utilizes a very efficient algorithm to satisfy the request. Namely, when an allocation for n bytes is requested, the GC simply advances the allocation pointer by n bytes (ensuring n is properly aligned), zeroes out the bytes between the old and new pointer value (unless this range came from a page on Windows's zero page list, in which case they have already been zeroed), and returns a pointer to the old location. A full initialization for a newly constructed object using the newobj instruction also involves executing the constructor to initialize state. Of course, there are other types of allocations that don't require such initialization, such as boxing an object or using the initobj IL instruction. If the object is finalizable (i.e., it overrides System.Object.Finalize), the GC registers the new object for finalization at this point.

If the address space is exhausted when the CLR attempts to allocate new memory, or if it fails to commit memory as a result of insufficient physical resources to back memory, it will perform a collection in hopes of reclaiming some free space. If this attempt fails (i.e., there is still not enough memory to satisfy the request after the collection), an OutOfMemoryException is generated by the execution engine. Note that small allocations can still fail as a result of the CLR failing to reserve and commit an entire segment.

Generations

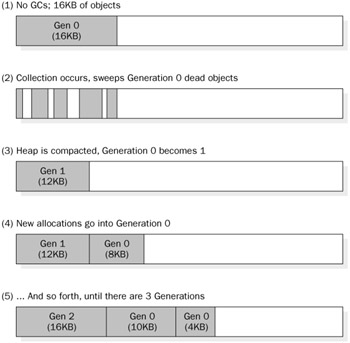

The picture of the world painted above is actually a bit nave. The GC partitions segments of the small object heap into three generations. Generations 0 and 1 are called the ephermal generations, and 2 is called the eldest generation. The ephermal generations can hold only up to a single segment (i.e., usually 16MB), while the eldest can grow unbounded (given enough virtual address space). (As noted above, there is a separate heap for large objects, but this does not get partitioned into generations.) Each generation contains a group of objects that are roughly the same age. Once an object survives a collection, meaning that it was reachable when a collection occurred, it gets promoted to the next generation assuming that it's not in the eldest generation already.

Objects allocated recently usually die young, and thus a lot of memory can be reclaimed just by collecting the generations in the ephermal segment. Full collections — spanning both the ephermal and eldest generations — are often significantly more expensive due to the large ratio of live to dead objects in older generations. The number of object references the GC must traverse to determine liveness in the ordinary eldest generation is disproportional to the amount of memory actually reclaimed when compared to ephermal collections. In simple terms: if an object has already lived a long time, the probability that it has died recently is low. The CLR only performs full collections as absolutely necessary, that is, if ephemeral generations don't contain enough trash.

After a collection happens, the GC may compact the heap. This entails sliding objects downward into the next generation, eliminating any empty space in the heap. The boundaries of each generation are recorded and updated if necessary. Objects previously in generation 0 move into 1, and those previously in 1 move into 2; all new allocations will then go into 0. This means that objects near each other remain near each other but might move around in memory to free up more contiguous memory in the committed segments. Generation 2 is the bit bucket where all old objects end up. Figure 3-4 demonstrates this process.

Figure 3-4: Allocation, collection, and compaction of GC generations.

Locality of Reference

Objects allocated closely have locality as a result of the way the GC allocates in a contiguous address space. Contrast this with the CRT malloc routine, which must traverse a linked list, where each node is likely in separate pages of the address space. When objects are closer together, they can fit together in levels of your processor's cache hierarchy. It often takes less main memory accesses on average to work with items that are closer in memory, leading to significant performance benefits. As we move toward multi-core machines in the future, where cache hierarchies are per-processor and per-core, locality will be of increasing importance to performance.

Unexpected Allocation Failures

There are two related memory problems of significant interest that can occur in any program: Stack Overflow (SO) and Out of Memory (OOM). Both occur when memory cannot be committed due to lack of physical resources or when a reservation cannot be satisfied due to process space exhaustion. It's worth-while to briefly take a look at how the CLR deals with these situations.

Stack Overflow (SO)

SO occurs when committing of stack space cannot be satisfied due to physical resource availability. The CLR works with Windows to handle stack reservation and committing for you as a managed developer. Each managed thread in a process reserves 1MB of stack space. Hosts can set policies for stack reservation that are appropriate to them; for example, SQL Server reserves only 500KB per thread.

Methods in IL indicate their maximum stack requirements in terms of bytes so that the CLR can ensure allocations on the stack inside a single function do not overflow. This essentially has the effect of making available up front the amount of stack necessary to accommodate a single stack frame of a known size. Thus, the CLR can ensure that a SO will occur at the beginning of a method frame rather than an undetermined point within. But of course a very deep call stack can still overcommit more stack space than is available. In the most extreme case, an infinite recursion will easily cause stack overflow; most cases are more accidental than that.

| Note | Of course, the number of times it can recurse before overflowing depends on some implementation details; for example, the CLR might overreserve stack space for some frames, make native code transitions where the stack is determined dynamically, and so forth. If a single stack frame were 8 bytes, and we were dealing with a 1MB reserved stack, it could execute roughly 130,000 times. Your mileage will vary. |

The CLR responds to an overflow differently in 2.0 than previous versions. Namely, it immediately terminates the process using a fail fast. We described this process above. In short, the shutdown process is much like an unhandled exception: the debugger is given a chance to attach, a Windows Event Log entry is written, and a Watson dump is extracted. The reasoning for this behavior is that it's nearly impossible to guarantee correct recovery from a SO condition; attempting to do so can often lead to stack corruption, which can consequently lead to security and reliability problems.

Out of Memory

An OOM condition simply means that an allocation request could not be satisfied. This can happen for a number of reasons (which we will examine in just a moment). The CLR 2.0 has been hardened in the face of OOM failures, meaning it has been audited to ensure all of its code reliably deals with OOM conditions. Every single method inside the CLR that allocates memory checks to ensure that the allocation succeeded, and if it did not, it propagates the failure accordingly. In unmanaged C++, this would mean checking the return code of every single functions call, a difficult process to guarantee across an entire codebase. And furthermore, the CLR ensures that critical resources may be released without performing allocations. This should raise your confidence in the reliability of the CLR.

OOMs can occur as a result of one of many circumstances:

-

Exhaustion of the process's address space and failure to reserve additional necessary segments. On 32-bit Windows, this is only 2GB (unless the Windows /3GB switch is specified, in which case it's 3GB), and thus is a real possibility. On 64-bit machines, the address space is massive and thus virtually infinite.

-

Available physical memory (including the page-file) has been exhausted. In other words, although the address space is large enough to take more allocations, additional segments cannot be committed due to lack of physical resources.

-

In some cases, a host will reject a request for memory allocation as a result of its own policy. For example, SQL Server has a goal of using up all of the available memory on the machine, and not a byte more. If your allocation would cause it to page to disk, it will likely refuse the request and instead inject an OOM into your program.

Of course, the CLR will attempt to collect memory in most situations in order to prevent an OOM from happening, but the reality is that in some circumstances it won't succeed. The occurrence of an OOM is not nearly as catastrophic as a SO. Thus, the CLR does not fail fast for OOM, but rather throws an ordinary exception of type OutOfMemoryException. This exception unwinds the stack and can be caught and swallowed just as with any other exception. Doing so might lead to additional OOMs (and a gradually worsening environment).

The degree to which applications can reasonably react to OOMs varies greatly. For example, you can imagine exhausting address space and/or physical memory is a bad situation, a situation in which even finally blocks won't be able to run correctly (because they might allocate). Thankfully, critical finalizers might be able to if they have been written to avoid allocating memory entirely. But the situation in which some code tried to reserve a ridiculously large amount of memory doesn't indicate that anything else is wrong with the process, so code could reasonably attempt to continue executing. (For example, consider if somebody did an int[] a = new int[int.MaxValue].) Unfortunately, you can't determine which of these cases triggered the OOM.

Memory Gates

To mitigate the chance of an OOM happening midway through a set of operations, you might consider using a memory gate. A memory gate has the effect of asking the GC whether a specific amount of memory is available, and if it isn't, fails with an OutOfMemoryException. Memory isn't actually reserved, however; thus, this inquiry is subject to race conditions. You might ask for a certain amount of memory, proceed because you were told it was OK to do so, and another thread might get scheduled and request a large amount of memory, for example, in which case an OOM could then ensue. Of course, clever applications could synchronize access to a central memory gate for hot sections of code that allocate large quantities of memory.

To use a memory gate, you instantiate a new System.Runtime.MemoryFailPoint, for example:

using (MemoryFailPoint gate = new MemoryFailPoint(100)) { // Some operation that actually uses the 100MB of memory... } The constructor takes an integer representing the number of megabytes of GC heap space to ask for. The GC simply responds yes or no to the MemoryFailPoint constructor, which responds by throwing an OOM if it said no. Otherwise, the body executes.

Garbage Collection

It should be obvious by now that the memory management subsystem does much more than just collect garbage. It helps create it, too! Although GC is a general term for the entire memory management subsystem of the CLR, the primary benefit is not its smart allocation strategy — although that's a blessing in disguise — but rather that it takes care of collecting unused memory for the developer. No frees, deletes, or delete[]s are necessary. This section will walk through some of the details of how GC works inside the CLR.

When a Collection Occurs

As noted briefly a few times, a collection can occur as the result of a number of conditions. This includes the following:

-

Any of the above OOM-inducing conditions mentioned previously.

-

Exceeding the threshold for the ephermal generations segment, that is, usually 16MB. This number has been chosen to fit inside your processor's cache.

-

Some APIs like GC.Collect permit developers to manually induce the collection process. Heavy use of these APIs interferes with the natural heuristics of the GC, so relying on them in production code is not advised; in fact, it can lead to objects being unnecessarily promoted to older generations. A much better way of interacting with the GC's collection policy is to use the GC.AddMemoryPressure and RemoveMemoryPressure APIs, detailed further in Chapter 5. In addition to that, classes like HandleCollector use the GC.Collect API in a controlled manner to induce GCs for resource management purposes.

Inside the Collection Process

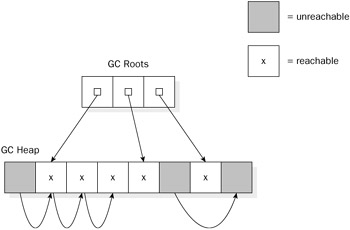

When memory must be collected, the GC knows how to determine what is and is not in use. Once it figures that out, it can get rid of unused waste. It does so by tracking a set of roots and forming a transitive closure of object references that are held by those roots. A root is an object that is directly and actively accessible to your program. This means that static variables, things that are on any current activation frames on managed threads, objects pinned via handles for unmanaged interoperability, and items stored in Thread Local Storage, for instance. Figure 3-5 demonstrates what a reachability graph conceptually looks like.

Figure 3-5: An example root-based reachability graph.

Because the roots might be shifting as the GC occurs, the first step is to pause all managed threads momentarily. The GC then recursively walks all references that those roots hold, traversing the entire reachability graph. It marks any object it touches during the process, and when it's complete, any objects on the heap that it did not visit are garbage and eligible for collection. They are marked as such, and the heap might be compacted. Managed threads are then resumed. This is often referred to as a mark and sweep collection algorithm because anything not marked during the traversal is swept away.

After performing a collection using the reachability graph shown above, the heap will have "holes" between several objects. That is, free memory will be sprinkled in between used memory. The CLR GC is, furthermore, a compacting collector, meaning that it manages fragmentation through the process of compaction — something we take a look at shortly. There are a variety of other GC algorithms; please see the "Further Reading" section for some related books.

Fragmentation and Compaction

If a collection notices that objects in between sets of other objects are dead, it compacts the heap. This is the process of sliding objects toward the bottom of the heap, eliminating all gaps in between. Sometimes objects cannot move as a result of pinning. We discuss pinning in Chapter 11, in the context of unmanaged interoperability, but in summary: it is often necessary to ensure that objects are not moved by the GC, that is, when passing pointers to GC heap objects to unmanaged code. Thus, if an object has been pinned, the GC cannot move it during a collection. Too many pinned objects can result in lots of fragmentation, which can result in unnecessary OOM conditions and increased working set. This is because although the total free memory is sufficient to satisfy an allocation not enough contiguous memory can be secured.

Collection Variants

While the above discussion painted the world as though it were managed by a single unified collection algorithm, this is not entirely the case. The CLR ships with two primary collectors: workstation and server; moreover, there are two subtly different flavors of the workstation collector.

Workstation Garbage Collection

The workstation collector (contained within mscorwks.dll) is used in all cases by default. A host may override this choice and instead use the server collector. We discuss the differences momentarily. However, on multiprocessor machines, the CLR will use a variant of the workstation collector called the concurrent collector unless this default has been overridden using configuration or hosting settings. (Note that prior to 2.0, the concurrent collector existed in mscorsvr.dll; it now lives in mscorwks.dll.)

When an ordinary collection occurs, all managed threads must be paused for the duration of the collection (as noted above). They are resumed only after collection has been completed. This includes the analysis phase, where roots and their graphs are visited, and the collection phase, where trash is swept away. But this pauses all threads of execution, permitting only the GC to make forward progress, sometimes for a noticeable period of time. The concurrent collector optimizes execution on multiprocessor machines in two primary ways:

-

Suspension and resumption of managed threads occurs multiple times during a single collection. This permits other threads of execution to make progress (including performing allocations) several times during a collection, resulting in a fairer execution of the program and better overall responsiveness.

-

GC runs on an entirely separate thread, enabling the thread that caused the collection to occur to make progress during a midway resumption of execution. Furthermore, it means the GC can perform low-priority analysis while other threads make progress. This avoids unfair favoring of threads that didn't happen to cause the GC to occur. It also means that there will be an additional GC thread in your managed process when the concurrent collector is turned on.

Note that you can opt out of the concurrent collector by default by adding the <gcConcurrent enabled=" false" /> to the <runtime /> section of your application's configuration file, for example:

<configuration> <runtime> <gcConcurrent enabled="false" /> </runtime> </configuration>

Server Garbage Collection

The server collector (contained within mscorsvr.dll) is only used if the configuration or hosting settings specifies to do so. It is optimized for server applications; these applications characteristically must deal with high throughput and less stringent working set constraints. For example, ASP.NET uses server collection to improve its throughput performance.

The server collector differs even more from the standard workstation collector than the concurrent collector does. It manages a separate small and large object heap per CPU per process. Furthermore, it has a single GC thread per CPU, each running at the highest possible priority. This enables processes to reclaim memory local to a thread in a very efficient and highly performant manner. As is the case with the nonconcurrent collector, all threads are paused for the duration of a collection.

You can turn on the concurrent collector via the <gcServer enabled="true" /> tag in the <runtime /> section of your application's configuration file, or via the CLR hosting API.

Finalization

Many objects assert ownership over resources outside of the control of the GC managed heap. Classes that hold COM references, interoperate with unmanaged code to hold on to raw chunks of memory, or perform some form of paired operation on a system-wide OS kernel object, for example, must ensure that cleanup occurs deterministically. This is ordinarily accomplished through the use of try/finally blocks to perform resource release inside the finally block. Unfortunately, developers might forget to call the corresponding release operation, for example, or the finally blocks might not run as a result of process crash (as described above). Finalization ensures that objects that own such resources have a last chance to perform cleanup before they are reclaimed by the GC.

A finalizable object is an instance of any type that overrides the Object.Finalize method. Such objects are added to a special finalization queue upon allocation. They are then moved to a ready-for-finalization queue once the GC notices that they are unreachable during collection. Note that the entire reachability graph using the finalizable object as a root must survive such that the Finalize method can reference these objects if necessary. This has the effect of promoting the finalizable object and its entire graph of reachable objects to the next generation during a collection.

There is a single finalizer thread that then walks this ready-for-finalization queue and invokes each object's Finalize method. The object can then release whatever resource it had held on to. Once the finalizer has been executed, the finalizer thread dequeues the object and moves on to the next item in the queue. That object will now be finalized during the next collection of whatever generation it now happens to live in. Note that prior to 2.0 if Finalize threw an exception, the CLR swallowed it. As noted earlier in this Chapter, as of 2.0 a Finalize method that leaks an unhandled exception will cause the process to come tumbling down.

A tad more on finalization, the Finalize method, and the IDisposable pattern is covered in Chapter 5. The GC class is also discussed in that chapter, which covers how you can deregister or reregister an object for the finalization queue.

Ordering of Finalize Methods

Finalization is unordered. This means that you make use of any other finalizable objects during execution of your object's Finalize method, you must be very careful. The object you are attempting to access might have already been finalized. Remember: there is no guaranteed ordering. If you try to call a method on it which results in an exception — which it will rightfully do if you're trying to invoke an operation on an object whose resources have been released — this could quite easily cause an unhandled exception to rip down the process.

Resurrection

An object can be made reachable again during finalization. This occurs because the Finalize method executing on the finalizer thread has access to things like static variables and Thread Local and AppDomain Local Storage. If that code happens to set one of these locations to itself or one of the objects it refers to, that object is immediately reachable by other code. And not only that, but that other object's entire graph of reachable objects also becomes reachable. This is called resurrection.

There are some valid uses of resurrection — for example object pooling — but for the most part it is a dangerous practice. This is especially true due to the lack of ordering guarantees we just spoke about. If you republish a finalizable object, you're guaranteed to lose in either direction. In one direction, it could have already been finalized. That is, your object's Finalize method is called after the other object. In this case, its state is likely in an unacceptable state to be called from the outside world. In the other direction, you might publish a finalizable object that remains in the queue to be finalized. This can introduce subtle race conditions (and ensuing security problems) where other code is calling the object at the same time its finalizer is being run!

Critical Finalization

Some hosts — such as SQL Server — will isolate components from each other using AppDomains to ensure reliability. They also rudely rip down such components with the assumption that doing so will not compromise the state of the remainder of the process. Unfortunately, finalization isn't always run to completion when an AppDomain is being shutdown; thus, if any process-wide (or machine-wide) state has been mutated, a simple Finalize method might not be sufficient to guarantee cleanup.

To ensure that any such state has been given a chance to clean up, a new construct called critical finalization has been added in 2.0. Implementing a critical finalizer is much like implementing a Finalize method. You must derive from System.Runtime.ConstrainedExecution.CriticalFinalizerObject and override its Finalize method. Hosts will treat such objects with more patience during shutdown of AppDomains. In return, you must abide by a set of constraints imposed by constrained execution regions, a topic discussed in more detail in Chapter 11.

EAN: 2147483647

Pages: 116

- Structures, Processes and Relational Mechanisms for IT Governance

- Linking the IT Balanced Scorecard to the Business Objectives at a Major Canadian Financial Group

- Technical Issues Related to IT Governance Tactics: Product Metrics, Measurements and Process Control

- Managing IT Functions

- Governance in IT Outsourcing Partnerships