9.7 Guarded Call Pattern

| Sometimes asynchronous communication schemes, such as the message queuing pattern [8], do not provide adequately timely response across a thread boundary. An alternative is to synchronously invoke a method of an object nominally running in another thread. This is the guarded call pattern. It is a simple pattern, although care must be taken to ensure data integrity and to avoid synchronization and deadlock problems. 9.7.1 AbstractThe message queuing pattern enforces an asynchronous rendezvous between two threads, modeled as «active» objects. In general, this approach works very well, but it means a rather slow exchange of information because the receiving thread does not process the information immediately. The receiving thread will process it the next time the thread executes. This can be problematic when the synchronization between the threads is urgent (e.g., tight time constraints). An obvious solution is to call the method of the appropriate object in the other thread, but this can lead to mutual exclusion problems if the called object is currently doing something else. The guarded call pattern handles this case through the use of a mutual exclusion semaphore. 9.7.2 ProblemThe problem this pattern addresses is the need for a timely synchronization or data exchange between threads. In such cases, it may not be possible to wait for an asynchronous rendezvous. A synchronous rendezvous can be made more timely, but this must be done carefully to avoid data corruption and erroneous computation. 9.7.3 Pattern StructureThe guarded call pattern solves this problem by using a semaphore to guard access to the resource across the thread boundary. If another thread attempts to access the resource while it is locked, the latter thread is blocked and must allow the previous thread to complete its execution of the operation. This simple solution is to guard all the relevant operations (relevant as defined to be accessing the resource) with a single mutual exclusion semaphore. The structure of the pattern is shown in Figure 9-12. Figure 9-12. Guarded Call Pattern Structure

9.7.4 Collaboration Roles

9.7.5 ConsequencesThe guarded call pattern provides a means by which a set of services may be safely provided across a thread boundary. This is done in such a way that even if several internal objects within the called thread share a common resource, that resource remains protected from corruption due to mutual exclusion problems. This is a synchronous rendezvous, providing a timely response, unless the services are currently locked. If the services are currently locked, the resource is protected, but timely response cannot be guaranteed unless analysis is done to show that the service is schedulable [6,7]. The situation may be even simpler than required for this pattern. If the Server objects don't interact with respect to the SharedResource, the SharedResource itself may be contained directly within the Server object. In this case, the Server objects themselves can be the Boundary Objects or the BoundaryObjects can participate in a façade pattern (also called an interface pattern). Then there is simply one Mutex object for every Server object. This is a simpler case of this more general pattern. 9.7.6 Implementation StrategiesBoth the ClientThread and ServerThread are «active» objects. It is typical to create an OS thread in which they run in their constructors and destroy that thread in their destructors. They both contain objects via the composition relationship. For the most part, this means that the «active» objects execute an event or message loop, looking for events or messages that have been queued for later asynchronous processing. Once an event or message is taken out of queue, it is dispatched to objects contained within it via composition, calling the appropriate operations on those objects to handle the event or message. For the synchronous rendezvous, the «active» object allows other objects visibility to the BoundaryObjects (i.e., they are in its public interface) and their public operations. When a BoundaryObject is created, it creates a Mutex object. Ports can be used to delegate the services of internal parts and make them explicitly visible across the thread boundary, but the technique works and is implemented in exactly the same way. 9.7.7 Sample ModelFigure 9-13a shows the model structure for the example. Three active objects encapsulate the semantic objects in this system. The View Thread object contains a view of the reactor temperature on the user interface. The Alarming Thread manages alarms in a different thread. And the Processing Thread manages the acquisition and filtering of the data itself. Stereotypes indicate the pattern roles. Figure 9-13a. Guarded Call Pattern Structure

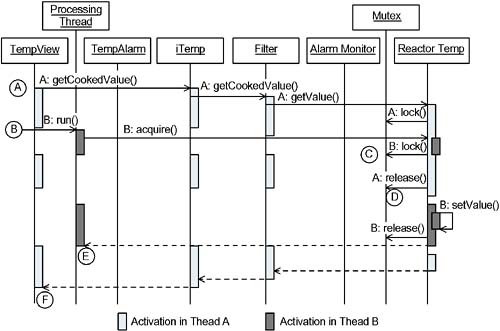

Figure 9-13b walks through a scenario. Note that the messages use a thread prefix ("A:" or "B:") and the activation lines on the sequence diagram are coded with different fill patterns to indicate in which thread they belong. This is an alternative way to show concurrent message threads, other than the PAR sequence diagram operator. This form is used because we want to clearly see the synchronization points between the threads. Points of special interest are annotated with circled letters. Figure 9-13b. Guarded Call Pattern Scenario

The scenario shows how the collision of two threads is managed in a thread-safe fashion. The first thread (A) starts up to get the value of the reactor temperature for display on the user interface. While that thread is being processed, the higher-priority thread (B) begins to acquire the data. However, it finds the ReactorTemp object locked, and so it is suspended from execution until the Mutex is released. Once the Mutex is released, the higher-priority thread can now continue the lock() operation succeeds and the B thread continues until completion. Once thread B has completed, thread A can now continue, returning the (old) value to the TempView object for display. The points of interest for the scenario are as follows:

|

EAN: 2147483647

Pages: 127