Recipe 9.7. Coordinating Site Updates and Testing

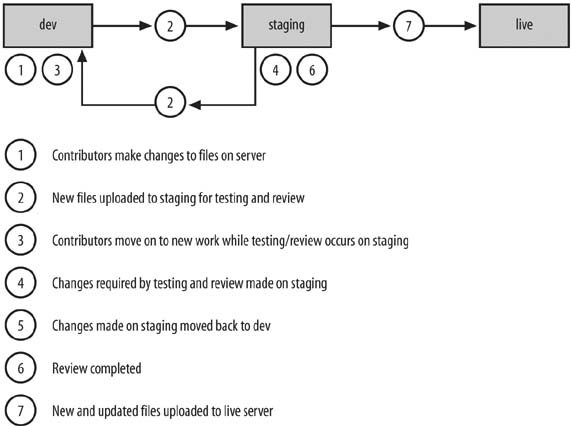

ProblemYou need to manage the testing and publishing of site updates created by multiple people. SolutionSet up a basic development-staging-live web server configuration that allows contributors and developers to continuously work on the site, while testers and clients preview changes set to go live on a separate, password-protected copy of the site. DiscussionA three-web server system provides an extra layer of protection against inadvertent site updates and data loss, while improving the efficiency of your development and testing efforts. In a typical setup, site contributors make all their file changes to copies on the development server. When a site update is ready for testing, proofreading, and/or client review, the lead site builder (or webmaster) moves the changed files to the staging server (see Figure 9-6). Figure 9-6. Site update process with a dev-staging-live server setup Contributors and developers can move on to other work on the development server without having to wait for the testing and review process on the previous update. Any changes needed at this point are made to files on the staging server, and then those changed files are copied back down to the development server.

After everyone reviews and approves the update, the new files from staging are copied over to the live, or production, server.

Ideally, all three sitesand at least the staging and production sitesshould be hosted on machines with identical configurations. You might even be able to keep both on the same physical server if your web hosting provider allows you to add sub-domains to your account with their own root directories. Use that feature to create development and staging sites with host names like this:

Then copy all the files from your live site to the development and staging sites. Next, be sure to restrict FTP access to the new sites so that only a few people on your site-building team have access to the staging site files, and fewer still to the live site (keeping the hands of novice coders, marketing interns, and the like off the live site will help you sleep better at night). Also, make sure curious web surfers and search engines can't view the pre-live sites.

Use password protection, IP-based access rules, or (if you're running your own web server on your network) firewall rules that allow only certain visitors access to the development and staging sites. The process of managing three sites instead of just one adds a significant amount of complexity to a webmaster's job. And, to be sure, the Solution discussed in this Recipe simplifies what can end up being a very complicated arrangement for sites with elaborate web applications, e-commerce functionality, or dynamic, database content that must be synchronized along with static files and scripts. And without a CVS (see Recipe 9.4 for more on CVS), you can't reconcile and merge changes made by two or more site contributors to the same file at the same time. I'll leave the exploration of those matters to the reader. Copying files with PerlOn the other hand, copying just the files that need to be copied between development and staging or staging and production can be handled with a Perl script that you can run manually from the command line, on a regular schedule with a cron job, or as needed through a web-based form. (See Recipe 1.8 for more information about using cron.) The following script copies newer files and directories from one site to another, provided both sites are on the same machine and the user running the script has read and write access to both sites' root directories: #! /usr/local/bin/perl use strict; use File::Find; use File::Copy; First, the script needs to know the location of Perl on your server, which can vary depending on the operating system its running. The lines use strict, use File:: Find, and use File::Copy identify Perl modules that must be loaded for use by the script. Next, variables are defined for the source directory ($src_dir) to scan for new files and directories and the destination directory ($dest_dir) in which to mirror them. With the umask command, it's easy to ensure that new files and directories will have the proper permissions: my $src_dir = "/full/path/to/dev/site/"; my $dest_dir = "/full/path/to/staging/site/"; my $file_umask = 0133; my $dir_umask = 0022; umask $file_umask;

Next, the script runs the subroutine update_web site, which traverses each directory, subdirectory, and file in the $src_dirtree: MAIN: { find(\&update_web site, $src_dir); exit; } If the file in the $src_dir TRee is actually a directory and it does not exist in the $dest_dir TRee, the script will create the directory in the appropriate place in the $dest_dir TRee. If the file in the $src_dir tree was modified more recently than the version in the $dest_dir tree or it does not exist in the $dest_dir TRee, the script will copy the file from the $src_dir tree into the corresponding place in the $dest_dir TRee. If neither condition is true for a file, then the script will do nothing because that part of the $dest_dir tree is up to date. The update_web site functionThe script gets variables to hold the source filename and path, as well as the destination file path. The code s/$src_dir/$dest_dir/ swaps the source directory value with the destination directory value in the destination file path variable: sub update_web site { my $src_file = $_; my $src_qual_file = $File::Find::name; my $dest_qual_file = $src_qual_file; $dest_qual_file =~ s/$src_dir/$dest_dir/; First, the subroutine checks if the source file is actually a directory. If it doesn't exist in the same location in the destination tree, the script will create it: unless ($src_file =~ /^\.\.?$/) { unless (-e $dest_qual_file) { if (-d $src_file) { umask $dir_umask; mkdir $dest_qual_file, 0755; die "Can't create directory $dest_qual_file: $!" unless -e $dest_qual_file; umask $file_umask; } If the source file doesn't exist in the destination tree, the script will copy it to the analogous location there: else { copy($src_file, $dest_qual_file) || die "Can't copy file $src_qual_file to $dest_qual_file: $!\n"; } } Finally, if the same files exist in both locations, the script defines variables for each file's last modification dates and compares the two dates. If the file in the source directory tree is newer than the same file in the destination directory tree, it gets copied to the destination site: else { unless (-d $src_file) { my $src_file_age = -M $src_file; my $dest_file_age = -M $dest_qual_file; if ($src_file_age < $dest_file_age) { copy($src_file, $dest_qual_file) || die "Can't copy file $src_qual_file to $dest_qual_file: $!\n"; } } } } } See AlsoRecipe 1.8 provides more information on using cron, while Recipe 2.7 explains how to use wget. For an explanation of methods to streamline the code on your production site, see Recipe 4.8. |

EAN: 2147483647

Pages: 144