Queuing Methods

|

Bandwidth has to be available for time-sensitive applications, because if it isn’t, packets will be dropped, and retransmit requests will be sent back to the originating host. Time-sensitive applications aren’t the only type of traffic needing to access bandwidth—it’s just flat-out important that bandwidth is there when it’s needed.

Since trying to increase bandwidth capacity can be an expensive pursuit, it might be wise to explore alternatives—such as queuing—first. The Cisco IOS allows for several different types of queuing:

-

First in, first out (FIFO)

-

Weighted fair

-

Priority

-

Custom

Each queuing algorithm provides a solution to different routing problems, so have a clear picture of your desired result before you configure queuing on an interface.

Queuing generally refers to several different criteria to define the priority of incoming or outgoing packets:

-

TCP port number

-

Packet size

-

Protocol type

-

MAC address

-

Logical Link Control (LLC) SAP

After the packet’s priority is determined by the router, the packet is assigned to a buffered queue. Each queue has a priority globally assigned to it and is processed according to that priority and the algorithm it’s using. Because each algorithm has specific features intended to solve specific traffic problems, and because solidly understanding queuing features will make it easier to make decisions regarding which management technique will really solve a given problem, we’ll explain each algorithm’s features in detail.

But first, understand that you should take certain steps before you select a queuing algorithm:

-

Accurately assess the network need. Thoroughly analyze the network to determine the types of traffic present and isolate any special needs that need to be met.

-

Know which protocols and traffic sessions can be delayed, because once you implement queuing, any traffic assigned a higher priority status will push other traffic offline if necessary.

-

Configure and then test the appropriate queuing algorithm.

FIFO Queuing

This is the most basic form of queuing—FIFO stands for first in, first out. Strangely enough, FIFO does not help much with time-sensitive traffic. Working chronologically, all it does is send the oldest packet in the queue out first.

Weighted Fair Queuing

Weighted fair queuing provides equal amounts of bandwidth to each conversation that traverses the interface using a process that refers to the timestamp found on the last bit of a packet as it enters the queue.

Assigning Priorities

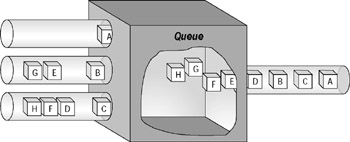

Weighted fair queuing assigns a high priority to all low-volume traffic. Figure 10.1 demonstrates how the timing mechanism for priority assignment occurs. The weighted fair algorithm determines which frames belong to either a high-volume or low-volume conversation and forwards out the low- volume packets from the queue first. Through this timing convention, the remaining packets can be assigned an exiting priority. In Figure 10.1, packets are labeled A through H. As depicted in the diagram, Packet A will be forwarded out first because it’s part of a low-volume conversation, even though the last bit of Packet B arrived before the last bit of the packets associated with Packet A did. The remaining packets are divided between the two high- traffic conversations, with their timestamps determining the order in which they will exit the queue. (A more detailed picture of how bandwidth is shared among conversations will be shown in Figure 10.2.)

Figure 10.1: Priority assignment using weighted fair queuing

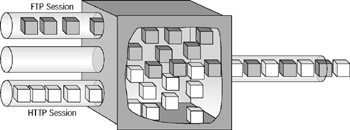

Figure 10.2: Bandwidth allocation with weighted fair queuing

Assigning Conversations

You’ve learned how priority is assigned to a packet or conversation, but it’s also important to understand the type of information the processor needs to associate a group of packets with an established conversation.

The most common elements used to establish a conversation are as follows:

-

Source and destination IP addresses

-

MAC addresses

-

Port numbers

-

Type of service

-

The data-link connection identifier (DLCI) number assigned to an interface

Figure 10.2 shows two conversations. The router, using some or all of the preceding factors to determine which conversation a packet belongs to, allocates equal amounts of bandwidth for the conversations. Each of the two conversations receives half of the available bandwidth.

Priority Queuing

Priority queuing happens on a packet basis instead of on a session basis and is ideal in network environments that carry time-sensitive applications or protocols. When congestion occurs on low-speed interfaces, priority queuing guarantees that traffic assigned a high priority will be sent first. In turn, if the queue for high-priority traffic is always full, monopolizing bandwidth, then packets in the other queues will be delayed or dropped.

Assigning Priorities

The header information that priority queuing uses consists of either the TCP port or the protocol being used to transport the data. When a packet enters the router, it’s compared against a list that will assign a priority to it and forward it to the corresponding queue.

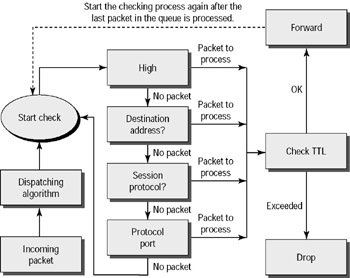

Priority queuing has four different priorities it can assign to a packet: high, medium, normal, and low, with a separate dispatching algorithm to manage the traffic in all four. Figure 10.3 illustrates how these queues are serviced; you can see that the algorithm starts with the high-priority queue processing all of the data there. When that queue is empty, the dispatching algorithm moves down to the medium-priority queue, and so on down the priority chain, performing a cascade check of each queue before moving on. So if the algorithm finds packets in a higher priority queue, it will process them first before moving on; this is where problems can develop. Traffic in the lower queues could be totally neglected in favor of the higher ones if the higher queues are continually busy with new packets arriving.

Figure 10.3: Dispatching algorithm in priority queuing

Custom Queuing

Cisco’s custom queuing functions are based on the concept of sharing bandwidth among traffic types. Instead of assigning a priority classification to a specific traffic or packet type, custom queuing forwards traffic in the different queues by referencing FIFO. Custom queuing offers the ability to customize the amount of actual bandwidth used by a specified traffic type.

While remaining within the limits of the physical line’s capacity, virtual pipes are configured through the custom queuing option. Varying amounts of the total bandwidth are reserved for various specific traffic types, and if the bandwidth isn’t being fully utilized by its assigned traffic type, other types can access it. The configured limits go into effect during high levels of utilization or when congestion on the line causes different traffic types to compete for bandwidth.

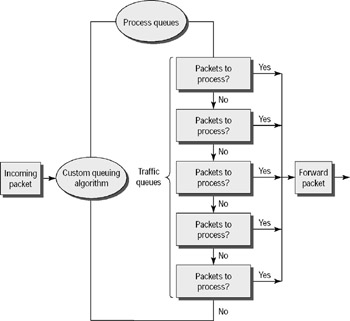

Figure 10.4 shows each queue being processed, one after the other. Once this begins, the algorithm checks the first queue, processes the data within it, then moves to the next queue. If the algorithm comes across an empty queue, it simply moves on without hesitating. The amount of data that will be forwarded is specified by the byte count for each queue, which directs the algorithm to move to the next queue once the byte count has been attained. Custom queuing permits a maximum of 16 configurable queues.

Figure 10.4: Custom queuing algorithm

Traffic Shaping

Traffic shaping is a tool available to network designers working with Cisco equipment. The basic purpose of traffic shaping is to allow an administrator to manage and control network traffic to avoid bottlenecks and meet quality of service (QoS) requirements.

Traffic shaping eliminates bottlenecks by throttling back traffic volume at the source or outbound end. It reduces source traffic to a configured bit rate and queues traffic bursts for that flow.

Administrators can configure traffic shaping by using access lists to select the traffic they want to shape and then applying the access list to an interface. You can use traffic shaping with Frame Relay, ATM, SMDS, and Ethernet.

Frame Relay can use traffic shaping to throttle dynamically to available bandwidth using the backward explicit congestion notification (BECN) mechanism. BECN is a bit set by a Frame Relay network in frames traveling in the opposite direction of frames encountering a congested path.

Quality of Service is an issue in networks due to the bursty nature of network traffic and the possibility of buffer overflow and packet loss. In fact, it’s buffering more than bandwidth that is the issue in the network design. QoS tools are required to manage these buffers to minimize loss, delay, and delay variation.

Transmit buffers have a tendency to fill to capacity in high-speed networks due to the bursty nature of data. If an output buffer fills, ingress interfaces are not able to place new flow traffic into the output buffer. Once the ingress buffer fills, packet drops will occur.

In Voice over IP (VoIP) networks, packet loss causes voice clipping and skips. If two successive voice packets are lost, voice quality begins to degrade. Using multiple queues on transmit interfaces is the only way to eliminate the potential for dropped traffic caused by buffers operating at 100 percent capacity. By separating voice and video (which are both sensitive to delays and drops) into their own queues, you can prevent flows from being dropped at the ingress interface, even if data flows are filling up the data transmit buffer.

Priority queuing is a solution for this problem. Regardless of whether the VoIP traffic is using any of its queue buffers, the dropped packets of lower priority traffic cause each of these applications to send the data again. If this same scenario is configured with a single queue but with multiple thresholds used for congestion avoidance, the default traffic would share the entire buffer space with the VoIP traffic. Only during periods of congestion, when the entire buffer memory approaches saturation, would the lower priority traffic (HTTP and e-mail) be dropped.

|

EAN: 2147483647

Pages: 201