Data Flow Elements

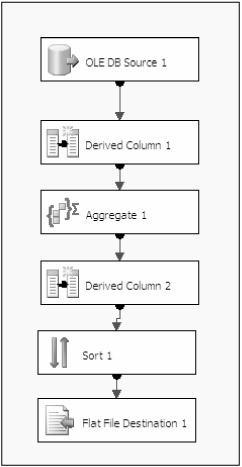

Once you create a Data Flow task, it spawns a new data flow. Just as the Controller Flow handles the main workflow of the package, the data flow handles the transformation of data. Almost anything that manipulates data falls into the data flow category. As data moves through each step of the data flow, the data changes based on what the transform does. For example in Figure 1-7, a new column is derived using the Derived Column transform, and that new column is then available to subsequent transformations or to the destination.

Figure 1-7

In this section, each of the sources, destinations, and transformations will be briefly covered. These areas are covered in much more detail in Chapters 3 and 4.

Sources

A source is where you specify the location of your source data to pull from in the data pump. Sources will generally point to the Connection Manager in SSIS. By pointing to the Connection Manager, you can reuse connections throughout your package, because you need only change the connection in one place. There are six sources altogether that can be used out of the box with SSIS:

-

OLE DB Source: Connects to nearly any OLE DB data source, such as SQL Server, Access, Oracle, or DB2, to name just a few.

-

Excel Source: Source that specializes in receiving data from Excel spreadsheets. This source also makes it easy to run SQL queries against your Excel spreadsheet to narrow the scope of the data that you wish to pass through the flow.

-

Flat File Source: Connects to a delimited or fixed-width file.

-

Raw File Source: A specialized file format that was produced by a Raw File Destination (discussed momentarily). The Raw File Source usually represents data that is in transit and is especially quick to read.

-

XML Source: Can retrieve data from an XML document.

-

Data Reader Source: The DataReader source is an ADO.NET connection much like the one you see in the .NET Framework when you use the DataReader interface in your application code to connect to a database.

Destinations

Inside the data flow, destinations accept the data from the data sources and from the transformations. The flexible architecture can send the data to nearly any OLE DB-compliant data source or to a flat file. Like sources, destinations are managed through the Connection Manager. The following destinations are available to you in SSIS:

-

Data Mining Model Training: This destination trains an Analysis Services mining model by passing in data from the data flow to the destination.

-

DataReader Destination: Allows you to expose data to other external processes, such as Reporting Services or your own .NET application. It uses the ADO.NET DataReader interface to do this.

-

Dimension Processing: Loads and processes an Analysis Services dimension. It can perform a full, update, or incremental refresh of the dimension.

-

Excel Destination: Outputs data from the data flow to an Excel spreadsheet.

-

Flat File Destination: Enables you to write data to a comma-delimited or fixed-width file.

-

OLE DB Destination: Outputs data to an OLE DB data connection like SQL Server, Oracle, or Access.

-

Partition Processing: Enables you to perform incremental, full, or update processing of an Analysis Services partition.

-

Raw File Destination: This destination outputs data that can later be used in the Raw File Source. It is a very specialized format that is very quick to output to.

-

Recordset Destination: Writes the records to an ADO record set.

-

SQL Server Destination: The destination that you use to write data to SQL Server most efficiently.

-

SQL Server Mobile Destination: Inserts data into a SQL Server running on a Pocket PC.

Transformations

Transformations are key components to the data flow that change the data to a desired format. For example, you may want your data to be sorted and aggregated. Two transformations can accomplish this task for you. The nicest thing about transformations in SSIS is that it's all done in-memory and it no longer requires elaborate scripting as in SQL Server 2000 DTS. The transformation is covered in Chapters 4 and 6. Here's a complete list of transforms:

-

Aggregate: Aggregates data from transform or source.

-

Audit: The transformation that exposes auditing information to the package, such as when the package was run and by whom.

-

Character Map: This transformation makes string data changes for you, such as changing data from lowercase to uppercase.

-

Conditional Split: Splits the data based on certain conditions being met. For example, this transformation could be instructed to send data down a different path if the State column is equal to Florida.

-

Copy Column: Adds a copy of a column to the transformation output. You can later transform the copy, keeping the original for auditing purposes.

-

Data Conversion: Converts a column's data type to another data type.

-

Data Mining Query: Performs a data-mining query against Analysis Services.

-

Derived Column: Creates a new derived column calculated from an expression.

-

Export Column: This transformation allows you to export a column from the data flow to a file. For example, you can use this transformation to write a column that contains an image to a file.

-

Fuzzy Grouping: Performs data cleansing by finding rows that are likely duplicates.

-

Fuzzy Lookup: Matches and standardizes data based on fuzzy logic. For example, this can transform the name Jon to John.

-

Import Column: Reads data from a file and adds it into a data flow.

-

Lookup: Performs a lookup on data to be used later in a transformation. For example, you can use this transformation to look up a city based on the zip code.

-

Merge: Merges two sorted data sets into a single data set in a data flow.

-

Merge Join: Merges two data sets into a single data set using a join function.

-

Multicast: Sends a copy of the data to an additional path in the workflow.

-

OLE DB Command: Executes an OLE DB command for each row in the data flow.

-

Percentage Sampling: Captures a sampling of the data from the data flow by using a percentage of the total rows in the data flow.

-

Pivot: Pivots the data on a column into a more non-relational form. Pivoting a table means that you can slice the data in multiple ways, much like in OLAP and Excel.

-

Row Count: Stores the row count from the data flow into a variable.

-

Row Sampling: Captures a sampling of the data from the data flow by using a row count of the total rows in the data flow.

-

Script Component: Uses a script to transform the data. For example, you can use this to apply specialized business logic to your data flow.

-

Slowly Changing Dimension: Coordinates the conditional insert or update of data in a slowly changing dimension. You'll learn the definition of this term and study the process in Chapter 6.

-

Sort: Sorts the data in the data flow by a given column.

-

Term Extraction: Looks up a noun or adjective in text data.

-

Term Lookup: Looks up terms extracted from text and references the value from a reference table.

-

Union All: Merges multiple data sets into a single data set.

-

Unpivot: Unpivots the data from a non-normalized format to a relational format.

EAN: 2147483647

Pages: 182