Chapter 6: Processing Analysis Services Objects with SSIS

This chapter targets the integration points between SSIS and SQL Server 2005 Analysis Services (SSAS). Business Intelligence (BI) solutions that involve SSAS cubes typically include a method for processing the objects from the data warehouse or data mart database sources into the OLAP cube structures. With the focus of Chapters 4 and 5 on handling dimension and fact table ETL, the final step in the process is to load the related SSAS objects, which, in most cases, will be the responsibility of the ETL process.

Since a big portion of SSAS integration in SSIS involves processing data and managing measure group partitions, the focus of this chapter is on processing and partition management techniques and best practices, starting with the out-of-the-box basics and followed by handling more complicated situations. The chapter concludes with a consideration of dimension change type implications with SSAS attribute relationships.

SSAS ETL Processing and Management Overview

Enterprise BI systems often have recurring ETL schedules that are predicated on the availability of source data. Often, these schedules are set to run nightly when either a source system is idle or has downtime, or when some prior batch process or precedence is complete. However, there are times when scheduling is required more or less frequently, such as when an inventory system sends data updates weekly or monthly, or on the flip-side, when data is made available several times a day. You may even have a situation that contains a diversity of processing schedule requirements, from monthly to near real-time.

The common thread in most of the enterprise ETL processes is that the load times are known. The ETL schedules kick off at either pre-defined times, or by scheduling tools that work in conjunction with other environment precedence. This can be for ETL jobs that run every five minutes to once a month. And, when a job finishes, the scheduling tool or package that coordinates execution can spawn off another process.

ETL scheduling is important in a discussion on SSAS processing because, in the majority of cases, when an Enterprise BI system involves SSAS, the processing of the SSAS objects is the responsibility of the ETL subsystem. Therefore, you must take into consideration the aspects and methods involved in it.

To be sure, a handful of BI solutions are real-time or very near real-time, meaning the transactions that are happening from the source systems are trickled through to the BI solution throughout the day as they happen. This scenario requires leveraging some of the real-time and near real-time features of SSAS, including Relational Online Analytical Process (ROLAP) storage for objects and proactive caching. These are both out of the scope of this chapter, but important in describing the overall picture of SSAS processing. You can refer to SQL Server’s online documentation on Proactive Caching for more information on near real-time processing.

SSAS Objects and Processing Basics

Before jumping into the details of SSAS processing, here’s a quick refresher on the concepts and components involved in SSAS processing.

To begin, the main types of objects in SSAS that must be processed are dimensions and partitions. If you are using the data mining features of SSAS, you will also be processing data mining objects. You may ask, “What about processing the SSAS database, cubes, and measure groups?” True, these objects can be processed, but they are just containers for subordinate objects, and when you choose to process them, you are really identifying the set of dimensions, partitions, and mining objects to process.

| Tip | Note that even though a cube doesn’t contain data itself (only its related dimensions and measure group partitions), when a cube is processed, that cube does have a script cache that is refreshed. In other words, you should process the cube object itself if you made any changes to calculations or to the MDX script. |

Dimensions

An SSAS dimension, as you would guess, maps very closely to a dimension table in the database. In fact, when you have a dimensionally structured modeled data warehouse or data mart (with an ETL process, as described in Chapters 4 and 5), then the SSAS dimensions are loaded directly from their underlying dimension tables. However, it’s not always the case that the mapping is exactly one-to-one, because at times you may need to combine multiple tables or use a single source dimension table for more than one SSAS dimension. Furthermore, an SSAS solution can be built on top of a transactional system where several source tables are involved. However, a transactionally sourced SSAS solution is limited in size and the tracking of history, and this is mostly reserved for proof-of-concept, specialized, or smaller-scale solutions.

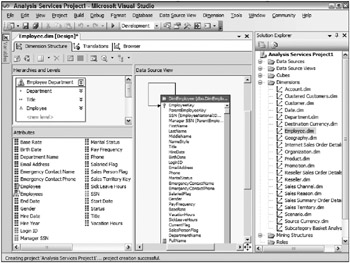

Figure 6-1 shows the Employee dimension in the AdventureWorks example SSAS solution.

Figure 6-1: Employee dimension in the AdventureWorks example SSAS solution

The main Business Intelligence Development Studio (BIDS) designer window shows the dimension editor. The Solution Explorer (on the right) shows the list of dimensions created in the SSAS solution. Dimensions contain attributes and defined hierarchies, which come into play when loading the underlying table data into the SSAS objects.

Several processing types exist for SSAS dimensions, including the following:

-

Full process- A full process involves a rebuild of the data structure underlying the SSAS dimension, which requires full rescans of the dimension table to populate the attribute’s structures, hierarchies, and indexes created for optimization. A full process is required when you make any structural change to the dimension (such as adding attributes and hierarchies, and moving or adding attribute relationships).

| Tip | When you perform a full process, the data is dropped in any associated measure group partitions because the structures are rebuilt. |

-

Process update- A process update is similar to a full process, in that the underlying dimension table is rescanned. However, the attribute, hierarchy, and index structures in the SSAS dimension are merely updated with changes and additions. This is the best choice when processing an SSAS database because it will preserve the related structures.

| Tip | Some dimension changes, including deletes, may not be allowed based on the design, which has implications in dimension ETL change types. This is discussed later in this chapter when reviewing the implications of rigid and flexible attribute relationships. |

-

Unprocess- Occasionally, you may want to unprocess your dimension to perhaps free space, or perhaps to force a full rebuild of a partition with the full processing.

-

Process data- Process data is similar to the process update, but only involves processing the data structures for the attributes and the hierarchies, excluding the attribute bitmap indexes.

-

Process index- The complement to process data is process index, which only processes the indexes. The requirement for this is that the SSAS dimension must first be processed, because process index does not require re-scanning the dimension table.

-

Process add- Process add enables you to add dimension members to the SSAS dimension without requiring the entire dimension table to be rescanned. This option is not available in the UI processing features found in SQL Server Management Studio (SSMS) or SSIS, but is a method that can be used programmatically or through XML for Analysis (XMLA), which is the industry specification message for interacting with OLAP structures.

Partitions

The second SSIS object involved in processing is the measure group partition. A measure group partition is most often sourced from a fact table when loading data from a data warehouse or mart. If loading data from an active transactional database system, your partitions would map to your primary transaction tables. But again, enterprise scale solutions are best designed with a dimensionally normalized underlying database.

When associating SSAS objects with fact tables to SSAS objects, the mapping is at the measure group level. The partitions are a subordinate object to the measure group, and provide the data storage for the measure group. Therefore, when a measure group contains more than one partition, the partition only contains a subset of the measure group’s entire data set.

As already mentioned, SSAS has the capability to drive the scheduling and processing times of the partitions; this feature is called proactive caching. Furthermore, you may have some partitions that act like database views where the structure allows queries but the queries are passed through to the database that contains the cube data. This is called relational OLAP (ROLAP), which is useful for a rapidly changing subset of data. For most of your partitions (if not all) you will be using Multidimensional Online Analytical Process (MOLAP) storage, where the underlying data is completely pulled into the partition structure and aggregates can be added.

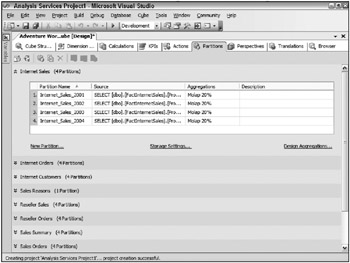

Figure 6-2 shows the cube designer in BIDS, with the Partitions tab selected, which highlights the partition management UI for SSAS.

Figure 6-2: Cube designer in BIDS

As you can see, the partitions are associated with measure groups, and, furthermore, each partition has a different name and, although not shown, also a different underlying query to keep the partition data separate from one another.

When processing partitions, the following options are available:

-

Process full- A process full for partitions is equivalent to both the process update and process full for dimensions. The underlying partition’s data is rescanned and the partition is rebuilt with any changes and updates. When process full is complete (or the transaction that it is contained in), the new data will be made available, and during processing, the old data is still online.

-

Incremental process- An incremental process is the equivalent of process add, where data can be added to the partition without the need to rebuild it. However, process add does not update any data currently in the partitions; it only brings in new underlying fact rows. In other words, if you have a fact record that is updated, you will need to perform a process full. Alternatively, you could use a change-record approach to offset the original value in the partition.

-

Unprocess- Unprocess drops the data, aggregates, and indexes from the partition.

-

Process data- Process data, just like the equivalent on the dimension side, only loads the detailed data into the partition’s MOLAP structure without processing the indexes or aggregates. Since ROLAP and Hybrid Online Analytical Processing (HOLAP) keep data in the underlying database, this only applies to MOLAP structures.

-

Process index- Process index processes both the bitmap indexes and the aggregation, provided that at least the partition’s core data is already loaded. In other words, the process index does not need to access the underlying table data.

Mining Structures

When processing data mining objects in SSAS, you are really dealing with processing mining structures and mining models, which are very different in regard to what the processing is doing.

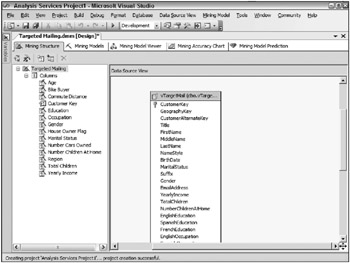

The mining structure is the base organization of data that the mining models are built upon. Mining structures define the organization of data that will be mined (hence, structure), as well as the training data that give the mining models the ability to perform predictions and associations. Figure 6-3 highlights the Targeted Mailing mining structure in SSAS, which contains a series of columns that will be used in the mining.

Figure 6-3: Targeted Mailing mining structure in SSAS

When processing the mining structures in SSAS, what you are doing is loading the training data into the structure so that the mining models can perform their analysis. When you process the mining models, you are applying the chosen mining model algorithms to the content of the mining structures.

Both mining structures and mining models have only two general processing types: process full (called process structure for mining structures) and unprocess (called process clear for mining structures). As you would expect, a process full rebuilds the structures or models. However, be aware that a process full on a mining structure does not automatically perform a process full on the associated mining models; therefore, if you process a mining structure, you must then process the mining models created on top of that structure.

Methods to Process SSAS Objects Through SSIS

Here’s where it starts to come together. When you have an SSAS solution involved in your BI solution, just what are the different methods that you can use to process these related objects in your SSIS-based ETL process?

There are several, which involve leveraging different aspects of SSIS in the data flow and control flow:

-

Using the control flow object Analysis Services Processing Task is the most straightforward approach, and is discussed in the next section. This allows any SSAS objects (that involve processing) and their processing method to be selected. A few drawbacks are listed in the next section.

-

Although the Analysis Services Execute DDL Task may sound as if it only allows objects to be modified, created, or deleted, its functionality goes way beyond Data Definition Language (DDL). This task will run an SSAS XMLA script. XMLA includes the ability not just to run DDL, but also query and process. Therefore, this task will become very useful for SSAS processing because the XMLA can be modified in an SSIS package before it is executed.

-

The data flow in SSIS includes two destinations: the Dimension Processing destination and the Partition Processing destination. These allow data directly from the pipeline to be pushed into either an SSAS dimension or SSAS partition, respectively. Unlike all the other SSIS approaches discussed here, this is the only approach where data is pushed directly to SSAS. The other approaches essentially tell SSAS to start pulling the data from a data source. Like the other SSIS approaches, this approach is described later in this chapter.

-

The programming API for SSAS, called Analysis Management Objects (AMO), allows the interface into the SSAS object model, and can leverage the processing methods to process any of the SSAS objects described. To use AMO for processing, you must leverage either the Script Task in the control flow, or the Script Component in the data flow.

-

A final mainline approach involves using the Execute Command Task to call the ASCMD executable that comes with SSAS. This executable can run Multidimensional Expressions (MDX), Data Mining Expressions (DMX), and XMLA, but also contains some command line switches to make your processing more dynamic.

When it comes down to it, your situation may use a combination of approaches as the needs arrive, or even a one-off derivative of these choices.

Creating and Modifying Partitions

When discussing SSIS integration with SSAS, just reviewing partition processing is not the complete story. Any enterprise BI solution involving SSAS almost always requires more than one partition per measure group for data management, processing, and performance reasons.

In other words, in your ETL, you will often have to create new partitions before processing them. The easy way out may be to create several partitions a couple years out into the future. Just don’t give them your phone number if you leave the project or organization, because you might get a call in two years asking why the processing is breaking!

To create and manage SSAS partitions within an SSIS process, the methods available are merely a subset of the processing options:

-

The Execute Analysis Services DDL Task is the most natural choice for managing and creating partitions because these are DDL-type operations, and XMLA provides all the needed operations to handle partition operations.

-

Since AMO allows objects to be created, modified, and deleted, it can be used with the scripting features in SSIS, the Script Task and Script Component.

-

Finally, the ASCMD.exe executable also provides partition-management functionality since it can run XMLA code and handle parameterization within the XMLA, therefore allowing partitions to be managed. Within SSIS, the ASCMD is run from an Execute Process Task.

EAN: 2147483647

Pages: 111