Chapter 18: Scyld Beowulf

|

Walt Ligon and Dan Stanzione

The first Beowulf developed at NASA Goddard Space Flight Center [107] was billed as a "Giga-ops workstation." The first and most important part being the performance (giga-ops) but the second part being a workstation. In the minds of the creators, a Beowulf was to be a single computer used to solve large problems quickly. The implementation, of course, was quite different. Each node in a Beowulf was, in reality, a distinct computer system with a distinct copy of the operating system running independently of the other nodes. The Beowulf architecture supports the notion of a single computer in that there was one node that was connected to the external network, there was often a network file system to provide a common storage area, and there was software for running programs across the nodes. This software, typically PVM [43] or an implementation of MPI [48], creates a virtual parallel computer that allows the programmer to create and manage processes on the various nodes. This software is not, however integrated with the operating system, it does little or nothing to assist in system configuration and management, and is not well suited to managing processing resources on a system-wide basis. This chapter describes Scyld, a system designed to provide a system-wide view of a Beowulf cluster.

18.1 Introduction

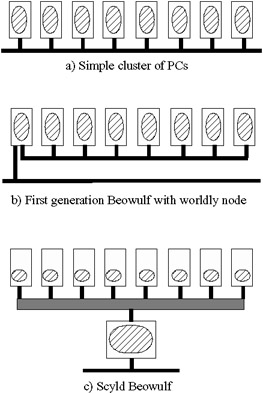

Early on, the ideal was to have a single system image: cooperation between the nodes of a Beowulf at the operating system level that not only eases programming across the nodes, but all aspects of interacting with the machine, including programming, configuration, and management tasks. The first attempts at this were to develop a global process ID space, so that processes running anywhere on the Beowulf could be uniquely identified, and so that the node the process executes on can be determined. This mechanism was implemented for the Linux kernel but proved to be of limited value. A more complete implementation later emerged that extended the process ID space of the master node of the machine, including all aspects of process control and management. The bproc process management system included fast creation and migration of processes across nodes, maintained information on remote processes for local reporting, included signal delivery services, and continued the abstraction of the single image process space to processes subsequently created on the remote nodes. Later versions included the ability to control access rights for creating processes on remote nodes.

With bproc process management in place, the next logical step is stripping the nodes down to a bare minimum of processes and services, and letting the master node start and manage all other processes using bproc. This quickly reduces the number of actual processes run directly on each compute node to a bproc daemon and a few other key processes. Once this runtime image is reduced, the next and final step is to move the copy of the runtime image off of the node completely so that each node boots from the master. The fact that the runtime image is very small makes this feasible for even a fairly large number of nodes. Finally, the resulting system provides a single system image that allows easy management of the configuration (it is all stored on the master node and loaded to the compute nodes at boot time), of the running system (almost everything is visible from the master node) and programming (uses the same programming model as the original Beowulf).

Figure 18.1: Evolution of Beowulf System Image.

The resulting single system image approach has been developed and marketed by Scyld Computing, Inc. as Scyld Beowulf. Scyld Beowulf is a complete Linux distribution based on RedHat Linux with modified installation scripts, a Scyld-enabled kernel, and the rest of the tools needed to implement the system. The Scyld CD is booted to install the Master node. Installation scripts configure the private network and set up the services to boot the nodes. Once installed, the Master node can make a CD or floppy that can boot the nodes, or they can boot using PXE boot. Nodes boot a minimal kernel, connect to the server, and then re-boot from the server. Facilities are provided to install a boot area on the local hard disk of each node so that subsequent boots do not require the CD or a floppy. The boot kernel is used only to start the system and thus does not need to be changed even in the event that the desired runtime kernel configuration is changed. The node kernel is updated on the server, the nodes are rebooted, and are ready to go. Utilities are provided to manage the nodes, determine their status, start and monitor processes, and even control access rights to the nodes.

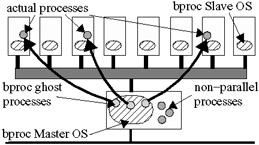

18.1.1 Process Management with Bproc

The heart of Scyld Beowulf is the bproc process management facility. On the surface, bproc is just another facility for starting remote processes such as rsh or ssh. In reality, bproc is a sophisiticated tool for migrating processes to remote nodes, while maintaining a centralized locus of control. The principle function in bproc is bproc_move() which migrates a process from the master to a remote node. This function is built upon the VMADump facility, which is a library for copying and restoring the complete virtual address space of a process. Essentially, VMADump copies the virtual address space on the master node, the copy is sent to the remote node, a new process is started there and then VMADump restores the address space in this new process. The original process does not go away, but becomes a "ghost process." This ghost process stands in on the master node as a placeholder of sorts for the remote process. It is just like a regular process, except that it has no memory space and no open files. In implementation, ghost processes behave like Linux kernel threads, they can sleep or run as needed, they can catch signals (including SIGKILL) and forward them to the running process. They are different than regular threads in that they inherit a number of process statistics like CPU time used from the remote process. For the user on the master node, the ghost process is the remote process.

Figure 18.2: Migration of processes using bproc.

18.1.2 Node Management with Beoboot

The key to managing nodes with Scyld Beowulf lies in the fact that the nodes have no permanent state other than a simple boot loader. All of the node configuration is maintained on the master node and downloaded to the compute nodes when they boot with beoboot. The beoboot utilities, along with the beoserv daemon which runs on the master node, allow the compute node configuration to be tuned as needed. The Scyld Beowulf software allows the kernel to be configured, startup scripts to be adjusted, and very importantly, shared libraries to be managed. Since all processes that are run on the compute nodes of a Scyld Beowulf machine are transferred from the master node, it is important that any libraries used by the executables are either statically linked or present on the compute node. Since this can be a rather extreme requirement, bproc will transfer any shared library linked to a migrated process along with the process itself, if the shared library is not installed on the target compute node. This solves a potential problem with running applications, but may not be as efficient as it could be. Thus, the beoboot system allows the compute nodes to be configured with shared libraries that are highly likely to be used. This increases the size of the boot image, and hence the boot time, but reduces the size of the typical process image and the time to start an application.

|

- Chapter II Information Search on the Internet: A Causal Model

- Chapter III Two Models of Online Patronage: Why Do Consumers Shop on the Internet?

- Chapter VI Web Site Quality and Usability in E-Commerce

- Chapter XI User Satisfaction with Web Portals: An Empirical Study

- Chapter XII Web Design and E-Commerce