2 Human-computer dialogue

2 Human-computer dialogue

2.1 Various approaches

There are various points of view concerning modelling and implementation of HCD systems [3] .

Structural approaches assert the existence of an interaction structure, built on the regularity of exchanges appearing in a dialogue. According to these approaches, this structure is determinable a priori , and would be established in a finished way (see [SAD 99] for a recall of these approaches).

Differential approaches consider a dialogue as the realisation of one or more communicative acts. Based on the principle that to communicate, is to act [AUSTIN, 1962], they start from the idea that communicative actions, following the example of classical actions, are justified by goals and are planned in this way. In particular, these goals relate to the change of the mental states (of the interlocutors), represented in terms of mental attitudes, such as knowledge, intention , uncertainty . These approaches consider the dialogue from more general models of the action and the mental attitudes (see [SAD 99] for a range of these approaches).

Rational communicating agents fall under this approach, while insisting on the vision of natural and user -friendly communication as an intrinsically emergent aspect of intelligent behaviour (see [COH 90], [SAD 91], [COH 94] for the primary works and [SAD 99] for a more exhaustive overview). This approach makes the problems of flexible and co- operative interface design and intelligent artificial agents overlap. Flexibility appears by an unconstrained dialogue which makes it possible to evolve in the interaction freely , to deviate from the consensual behaviour of conversation, in order to, for example, signal a problem to the system to possibly remedy it. Cooperation appears in many forms: reaction to requests , adoption of user's intentions, sincerity of the system, relevance of the answers, etc.

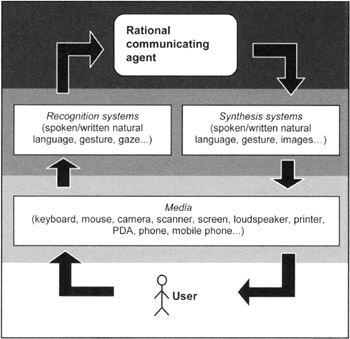

The rational communicating agent constitutes the core of the human-computer dialogue system. To establish the link with the user, the agent, which is nothing else than a program, uses a certain number of interfaces made up of more or less physical and tangible communicating objects.

Figure 3.1 illustrates this matter with several layers of communicating objects. The "high" layer, the agent one, communicates with the transition layer made up of recognition and synthesis systems. This layer is in relation with a lower layer which represents physical media of interaction (such as peripherals and physical communicating objects). The last layer is embodied by the user, since he/she can also be seen as a communicating object.

Figure 3.1: Layered model of communicative objects involved in a multimodal human-computer dialogue

2.2 Multimodal human-computer dialogue

The first systems of Multimodal HCD made it possible to dialogue in written natural language, for three major reasons.

When those systems appeared, interaction devices were limited, typically to a keyboard and an alphanumeric screen.

One thought that natural language was the prevalent means of dialogue, the most effective to exchange information and to understand one another.

Since Turing ([TUR 50]), natural language was seen as the ultimate demonstration of intelligence.

It is well known today that this is not true. Many other dimensions come into play during interactions and dialogue. Natural language in fact constitutes only one component of dialogue. Gestures, postures, gaze, facial gestures, prosodic cues, effects, cultural dimension, micro-social proxemic and some others are quite as important [COS 97].

For example, gestures are involved in thinking as much as language [MAC NEIL, 1992]. They can illustrate some mental images in the scene of speech, which the language cannot always do in a satisfying manner [4] . They make it possible, in other cases, to replace word groups and/or word traits [5] , thus dispersing the message on two communication modalities (which are natural language and gestures).

New technologies bring new media of communication. They open a potential new way towards other modalities of interaction with the computer. Thus, to the old (but always useful) keyboards, mice and screens are added voice recognition, gesture recognition, gaze tracking, haptic devices (tactile screens, data gloves etc), natural language generation, graphic and image synthesis, voice synthesis, talking faces, virtual clones (see [BEN 98] and [LEM 01] for a broad range of these systems); it is already possible to convey fragrances on the WWW!

In order to build HCD more user-friendly and natural to use systems, much work over the past ten years has tried to benefit from these new technological potentials in trying to conceive multimodal HCD.

The stakes are numerous . The user should be able to converse naturally, using the modalities he wishes [6] , to switch from one modality to the other ( transmodality ). The emotions expressed by the prosody of his voice or his facial gestures should be understood and taken into account. Symmetrically, the system is expected to answer using the best modalities: the selected modalities should be the most effective for the type of information to convey, those preferred by the user and the emotional dimension should also be present (see Chapter 5), etc.

[3] Conscious of the heaviness of ˜a computer system whose human-computer interface is dialoguing , we will use, from now even if it is an abusive language ˜dialogue system or ˜human-computer dialogue system .

[4] These gestures are called illustrative gestures.

[5] These gestures can be either called illustrative gestures or emblematic gestures (see [COS 97] for a survey on communicative gestures).

[6] See [BEL 95] for an exhaustive definition of the various types of multimodalities.

EAN: 2147483647

Pages: 191