4.5 Multicast routing with PIM

|

|

4.5 Multicast routing with PIM

Protocol-Independent Multicast (PIM) is a relatively new IP multicast protocol that works with IGMP and with existing unicast routing protocols, such as OSPF, RIP, IS-IS, BGP, and Cisco's IGRP. PIM was developed by the IETF IDMR Working Group and defined in two Internet drafts entitled "Protocol-Independent Multicast (PIM): Motivation and Architecture" and "Protocol-Independent Multicast (PIM): Protocol Specification." As with DVMRP, PIM is independent of any underlying unicast routing protocol (unlike MOSPF, which relies on OSPF). PIMv1 uses the IGMP all-routers multicast group destination address 224.0.0.2, whereas PIMv2 has its own dedicated multicast address, 224.0.0.13. Generally, PIM packet types use a TTL of one to prevent forwarding.

PIM provides design flexibility and resolves potential design scalability problems by offering two operational modes for multicast distribution: dense mode (PIM-DM) and sparse mode (PIM-SM), specified in [19].

The terms dense and sparse refer to the density and proximity of group members. For example, a dense population of group members could be situated on an enterprise LAN. Here many users on different subnets may be interested in using the same multicast application, so there is a high probability that all subnets will require the same multicast feed. In contrast a sparse population may comprise a relatively small number of group members, widely distributed over a disparate network of wide area links. In this case only a small subset of the wide area links available will require multicast service for any particular group. Clearly, these two examples are extremes, and there will be some networks that fall in between the two camps. PIM-DM and PIM-SM are described in the following text.

4.5.1 Dense-mode PIM

Dense-mode PIM (PIM-DM) is currently the subject of an Internet draft [20]. It uses reverse path forwarding (described in section 4.1.6) and in many ways is similar to its predecessor, DVMRP. PIM-DM is, however, simpler than DVMRP in that it does not require routing tables (and is independent of any specific unicast routing protocol). PIM-DM simply forwards multicast packets out to all interfaces (except the receive interface) until explicit pruning occurs. In effect, PIM-DM floods the network and then prunes back specific branches later, based on multicast group member information. DVMRP, by contrast, uses the parent-child relationship and split horizon to recognize downstream routers, as described earlier. Dense mode is most useful when the following conditions occur:

-

Senders and receivers are in close proximity to one another.

-

There are few senders and many receivers.

-

The volume of multicast traffic is high.

-

The stream of multicast traffic is constant.

PIM-DM is effective, for example, in a LAN TV multicast environment, because it is likely that there will be a group member on each subnet. Flooding the network is effective, because little pruning is necessary. Note that PIM-DM does not support tunnels.

Operations

When a multicast source starts transmitting, PIM-DM assumes that every downstream system is potentially a group member. Multicast datagrams are, therefore, flooded to all areas of the network. If some areas of the network do not have group members, PIM-DM will prune off the forwarding branch by setting up prune state. The prune state has an associated timer, which, on expiration, will turn into forward state, allowing data to go down the branch previously in prune state. The prune state contains source and group address information. When a new member appears in a pruned area, a router can graft toward the source for the group, turning the pruned branch into forward state. The forwarding branches form a tree rooted at the source leading to all members of the group. This tree is called a source-rooted tree.

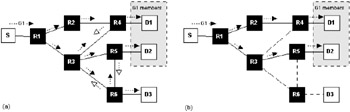

In the example network shown in Figure 4.9(a), host S transmits multicast packets destined for multicast group 1 (G1). Router R1 receives this traffic, duplicates each packet, and then forwards them out onto all downstream interfaces to R2 and R3. In the same way, routers R2 and R3 duplicate these packets and forward them to routers R4, R5, and R6. Initially multicasts are delivered to all hosts via flooding. To optimize delivery the process of pruning is then used. Host D1 is a member of G1, so R5 does not send a prune message. Host D2 is also a member of G1, but notice that R4 receives multicast traffic from both R2 and R3, so R4 prunes back one of these branches. Since host D3 is not a member of G1, R6 also prunes back its feeds. The resulting pruned tree is shown in Figure 4.9(b). Note that this is a logical tree for a specific multicast group. With many multicast sources there are likely to be many overlapping logical trees.

Figure 4.9: PIM dense-mode operation, (a) Shows multicast flooding for multicast group G1 and reverse pruning (either because G1 multicast is not required or duplicate sources are detected). (b) The resulting pruned multicast tree for group G1.

4.5.2 Sparse-mode PIM

Sparse-mode PIM is optimized for environments where there are many multipoint data streams. Each data stream goes to a relatively small number of the LANs in the internetwork. For these types of groups, reverse path forwarding would make inefficient use of the network bandwidth. In relation to PIM-DM, PIM-SM differs in two essential ways: There are no periodic joins transmitted, only explicit triggered grafts/prunes in PIM-DM; and there is no Rendezvous Point (RP) in PIM-DM. PIM-SM assumes that no hosts want the multicast traffic unless they specifically ask for it. It works by defining a Rendezvous Point (RP). The RP is used by senders to a multicast group to announce their existence and by receivers of multicast packets to learn about new senders. When a sender wants to send data, it first sends the data to the RP. When a receiver wants to receive data, it registers with the RP. Once the data stream begins to flow from sender to RP to receiver, the routers in the path automatically optimize the path to remove any unnecessary hops. PIM-SM is most useful when the following conditions occur:

-

There are few receivers in a group.

-

Senders and receivers are separated by WAN links.

-

The stream of multicast traffic is intermittent.

Operations

PIM-SM is designed for sparse multicast regions. For a PIM-SM route to be established there must be a source, a receiver, and a rendezvous point. If any of these are missing, then data in the multicast routing table can be potentially misleading. The basic concept is that multicast data are blocked unless a downstream router explicitly asks for it in order to control the amounts of the traffic that traverse the network. A router receives explicit join/prune messages from those neighboring routers that have downstream group members. The router then forwards data packets addressed to a multicast group, G1, only onto those interfaces on which explicit joins have been received.

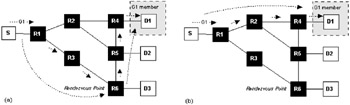

The basic PIM-SM operation is illustrated in Figure 4.10 and can be described as follows:

-

A Designated Router (DR), in this case Rl, sends periodic join/prune messages toward a group-specific Rendezvous Point (RP), in this case R6, for each group for which it has active members. The DR is responsible for sending triggered join/prune and register messages toward the RP.

-

Each router along the path toward the RP builds and sends join/prune messages on toward the RP. Routers with local members join the group-specific multicast tree rooted at the RP (see Figure 4.10). The RP's route entry may include such fields as the source address, the group address, the incoming interface from which packets are accepted, the list of outgoing interfaces to which packets are sent, timers, flag bits, and so on.

-

The sender's DR initially encapsulates multicast packets in the resister message and unicasts them to the RP for the group (see Figure 4.10). The RP decapsulates each register message and forwards the data packet natively to downstream routers on the shared RP tree.

-

A router with directly connected members, in this case R4, first joins the shared RP tree. The router can initiate a source's SPT based on a threshold (see Figure 4.10).

-

After the router receives multicast packets from both the source's SPT and RP tree, PIM prune messages are sent upstream toward the RP to prune the RP tree. Now multicast data from the source will only flow over the source's SPT toward group members (see Figure 4.10).

Figure 4.10: PIM sparse-mode operation. (a) Shows multicast delivery to the rendezvous point (router R6) for multicast group G1. (b) The resulting optimized multicast tree for group G1.

The recommended policy is to initiate the switch to the SP tree after receiving a significant number of data packets during a specified time interval from a particular source. To realize this policy the router can monitor data packets from sources for which it has no source-specific multicast route entry and initiate such an entry when the data rate exceeds the configured threshold.

4.5.3 Design issues

The following guidelines are worth observing when using PIM:

-

PIM-SM versus PM-DM—PIM sparse mode is generally the preferred way to use PIM. PIM-SM is much more efficient, since it uses an explicit join model, so traffic only flows to where it's required and router state is only created along flow paths. PIM dense mode is simple to configure and useful for small multicast groups (perhaps for small pilot networks). For most applications the flood and prune behavior of PIM-DM is simply too inefficient. Unlike PIM-SM, PIM-DM does not support shared trees.

-

Mixed-mode PIM—PIMv2 greatly simplifies the issues for defining a group as sparse or dense. In PIMv1 the router determined the mode; with PIMv2 both sparse and dense groups can coexist on a router interface at the same time (sometimes referred to as PIM sparse-dense mode). With PIMv2 the mode is determined dynamically by testing for the presence of an RP (i.e., if an RP exists for the group; then the mode must be sparse, otherwise, it will operate in dense mode). In rare situations you may want to specifically configure an interface to be sparse or dense—for example, if you are an ISP or if you are connecting to another multicast cloud (i.e., two separate PIM clouds).

-

Rendezvous point configuration—If available, use automated techniques to define RP(s), since this avoids configuring an RP on every router and enables simple RP assignment and reassignment. The location of RPs is not especially critical, since SPTs are normally used by default (traffic does not normally flow through the RP, so the RP should not be a bottleneck from the traffic perspective). The exception is where the traffic stays on the shared tree (SPT - Threshold = Infinity).

-

Rendezvous point performance considerations—The RP has several resource-intensive tasks to perform and could become a bottleneck in this respect. The RP must process registers and shared-tree joins and prunes; it must also send periodic SPT joins toward the source. PIM routers typically perform the RPF recalculation every five seconds, and you should monitor CPU and memory utilization as the number of multicast routes expands. Overloaded RPs must either have system resources increased (CPU speed, RAM), or you may consider distributing the load across several RPs.

PIM-SM scales much better than PIM-DM, because of its conservative approach to multicast delivery and its low demands on routing resource. PIM-SM is appropriate for wide-scale deployment for both densely and sparsely populated groups in the enterprise. PIM-DM is much more brutal, since it creates an (s, g) state in every router, even when there are no receivers for the traffic, and can cause problems in some network topologies (traffic engineering is virtually impossible). In contrast traffic engineering is possible with PIM-SM, since it uses shared trees rooted at different RPs. PIM-SM is also the basis for Multicast BGP (MBGP) and MSDP interdomain multicast routing [21].

|

|