Interesting Applications

Let's now look at a few applications of Markov Chains for HMMs. The first example, speech recognition, is a practical method used to determine the words spoken in natural language processing. The following two examples are more thought provoking than useful, but provide a greater understanding of the possibilities with Markov Chains.

Speech Recognition

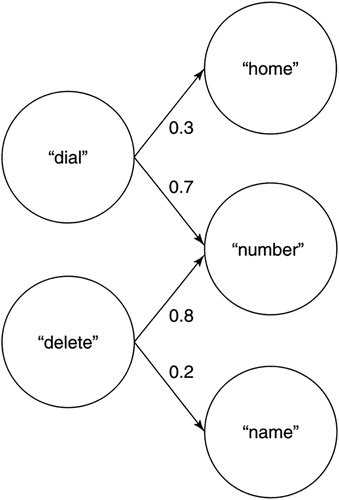

Recall from Figure 10.1 that the pronunciation of a word can take on one or more variations based upon the dialect or origin of the speaker. Building speech recognition systems then becomes very difficult, because the system must deal with a variety of pronunciations of a given word.

Hidden Markov Chains provide the opportunity to simplify speech recognition systems through probabilistic parsing of the phonemes of speech. For example, let's say that a speech system is designed to understand a handful of words, two of which are "tomorrow" and "today." When the system first hears the "tah" phoneme, the spoken word can be either "tomorrow" or "today." The next phoneme parsed from the speech is "m"; the probability that the word being spoken is "today" is now 0. Given our HMM, the "m" phoneme is a legal state, so we transition and then process the next phoneme. Given the probabilities at the transitions, the speech recognizer can use these to take the most likely path through the chain to identify the most likely phoneme that followed.

This example can be extended from phonemes to words. Given a set of words that can be understood by a speech recognizer, a Markov Chain can be created that identifies the probabilities of a given word following another. This would allow the recognizer to better identify which words were spoken, based upon their context (see Figure 10.3).

Figure 10.3: Higher level speech recognizer Markov Chain.

It's clear from these examples that HMMs can greatly simplify tasks such as speech recognition and speech understanding. In this example, the phoneme inputs caused the transition of states within the model to define words. Further, word inputs caused a transition for contextual understanding of a sentence . Next, we'll look at the use of HMMs to generate symbols based upon predefined or learned transition probabilities.

Modeling Text

In the previous examples, the Markov Chain was used to probabilistically identify the next state given the current state and an external stimulus. Let's now look at some examples where no external stimuli is provided ”transitions between the states of the Markov Chain are based solely on the defined probabilities as a random process.

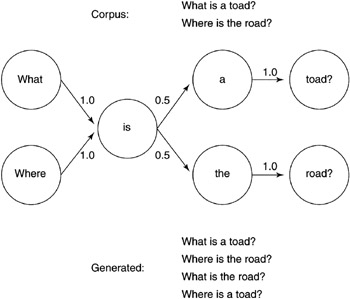

In Figure 10.4, an example Markov Chain is shown for two sample sentences. The Markov Chain is a product of these two sentences, using the bigram model.

Figure 10.4: Sample bigram from a corpus of seven unique words.

The only non-unique word within this corpus is the word "is." With even probability, the word "is" can lead either to the word "a" or "the." Note that this now leads to four possible sentences that can be generated with the Markov Chain (shown at the bottom of Figure 10.4).

Modeling Music

In a similar fashion to words, consider training the HMM from a vocabulary of musical notes from a given composer [Zhang 2001]. The HMM could then be used to compose a symphony via the probabilistic generation of notes with a style of the given composer. Consider also the training of an HMM from a vocabulary of two or more composers. With a large enough n-gram, symphonies could be arranged from the combinations of great composers.

EAN: 2147483647

Pages: 175