Chapter 10: The Bigram Model

In this chapter, we'll look at the Markov model (named after the Russian mathematician , Andrei Markov), and a specific variation called the bigram model. These models can be very useful in describing processes that encompass a number of states and the probabilities associated with paths through these states. A number of very interesting applications exist for these models. We'll investigate the use of the bigram model for the automatic generation of interesting text.

Introduction to Markov Models

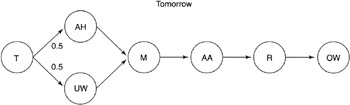

A Markov Chain is simply a process that consists of a number of states with probabilities attached to the transitions between the states. For example, consider Figure 10.1.

Figure 10.1: Example Markov Chain.

Figure 10.1 illustrates the pronunciation of the word "tomorrow." Two possible pronunciations are available within the diagram. The probability of the "t ah morrow" pronunciation is 0.5, while the probability of the alternate "t uw morrow" pronunciation is also 0.5.

This is a very simple case, involving a decision at a single point within the chain. Each state involves the production of a phoneme. At the end of the chain, a completed pronunciation is available. We'll look more at the purpose for this in the applications section.

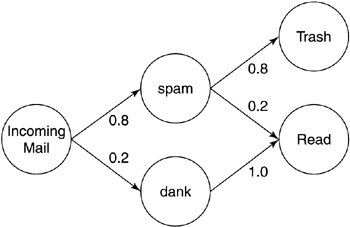

Next, let's look at a different application. Consider an email program that monitors the behavior of the user. When an email arrives, it observes what the user does with the email, and uses this information to learn how to automatically deal with subsequent emails. See the chain in Figure 10.2

Figure 10.2: Learning user interaction with an email program.

Our email agent has observed that 8 out of 10 emails are spam, 2 out of 10 are from user "dank." The email agent further observes that 80% of the time, we delete the spam email without reading it. The other 20%, we read the email. From these probabilities, the email agent can deduce that we're more likely to delete the email than to read it. Using these probabilities provides an opportunity for the email agent to simplify our task of managing email.

| Tip | In the examples shown here, a limited number of states and connections were defined. The Markov Chain can support a very large state space with very large numbers of connections to model very complex processes. |

An interesting property of both examples is that the current state is always a function of the previous state, given a connection probability. This is called the "Markov Property." Additionally, since in our examples, a state can be reached by more than one previous state (for example, the "Read" state from Figure 10.2), these models are known as Hidden Markov Models (HMMs) or Hidden Markov Chains.

EAN: 2147483647

Pages: 175