Documentation reviews

3.3 Documentation reviews

One of the two goals of the SQS is to facilitate the building of quality into the software products as they are produced. Documentation reviews provide a great opportunity to realize this goal. By maintaining a high standard for conducting and completing these reviews and establishing the respective baselines, the software quality practitioner can make significant contributions to the attainment of a quality software product.

Software quality practitioners have much to do with the documentation reviews. They must make sure that the proper reviews are scheduled throughout the development life cycle. This includes determining the appropriate levels of formality, as well as the actual reviews to be conducted. The software quality practitioner also monitors the reviews to see that they are conducted and that defects in the documents are corrected before the next steps in publication or development are taken. In some cases, software quality itself is the reviewing agency, especially where there is not a requirement for in-depth technical analysis. In all cases, the software quality practitioner will report the results of the reviews to management.

There are a number of types of documentation reviews, both formal and informal, that are applicable to each of the software documents. The appendixes present suggested outlines for most of the development documents. These outlines can be used as they are or adapted to the specific needs of the organization or project.

The most basic of the reviews is the peer walk-through. As discussed in Section 3.1, this is a review of the document by a group of the author's peers who look for defects and weaknesses in the document as it is being prepared. Finding defects as they are introduced avoids more expensive corrective action later in the SDLC, and the document is more correct when it is released.

Another basic document review is the format review or audit. This can be either formal or informal. When it is a part of a larger set of document reviews, the format audit is usually an informal examination of the overall format of the document to be sure that it adheres to the minimum standards for layout and content. In its informal style, little attention is paid to the actual technical content of the document. The major concern is that all required paragraphs are present and addressed. In some cases, this audit is held before or in conjunction with the document's technical peer walkthrough.

A more formalized approach to the format audit is taken when there is no content review scheduled. In this case, the audit takes the form of a review and will also take the technical content into consideration. A formal format audit usually takes place after the peer walk-throughs and may be a part of the final review scheduled for shortly before delivery of the document. In that way, it serves as a quality-oriented audit and may lead to formal approval for publication.

When the format audit is informal in nature, a companion content review should evaluate the actual technical content of the document. There are a number of ways in which the content review can be conducted. First is a review by the author's supervisor, which generally is used when formal customer-oriented reviews, such as the PDR and CDR, are scheduled. This type of content review serves to give confidence to the producer that the document is a quality product prior to review by the customer.

A second type of content review is one conducted by a person or group outside the producer's group but still familiar enough with the subject matter to be able to critically evaluate the technical content. Also, there are the customer-conducted reviews of the document. Often, these are performed by the customer or an outside agency (such as an independent verification and validation contractor) in preparation for an upcoming formal, phase-end review.

Still another type of review is the algorithm analysis. This examines the specific approaches, called out in the document, that will be used in the actual solutions of the problems being addressed by the software system. Algorithm analyses are usually restricted to very large or critical systems because of their cost in time and resources. Such things as missile guidance, electronic funds transfer, and security algorithms are candidates for this type of review. Payroll and inventory systems rarely warrant such in-depth study.

3.3.1 Requirements reviews

Requirements reviews are intended to show that the problem to be solved is completely spelled out. Informal reviews are held during the preparation of the document. A formal review is appropriate prior to delivery of the document.

The requirements specification (see Appendix D) is the keystone of the entire software system. Without firm, clear requirements, there will no way to determine if the software successfully performs its intended functions. For this reason, the informal requirements review looks not only at the problem to be solved, but also at the way in which the problem is stated. A requirement that says "compute the sine of x in real time" certainly states the problem to be solved-the computation of the sine of x. However, it leaves a great deal to the designer to determine, for instance, the range of x, the accuracy to which the value of sine x is to be computed, the dimension of x (radians or degrees), and the definition of real time.

Requirements statements must meet a series of criteria if they are to be considered adequate to be used as the basis of the design of the system. Included in these criteria are the following:

-

Necessity;

-

Feasibility;

-

Traceability;

-

Absence of ambiguity;

-

Correctness;

-

Completeness;

-

Clarity;

-

Measurability;

-

Testability.

A requirement is sometimes included simply because it seems like a good idea; it may add nothing useful to the overall system. The requirements review will assess the necessity of each requirement. In conjunction with the necessity of the requirement is the feasibility of that requirement. A requirement may be thought to be necessary, but if it is not achievable, some other approach will have to be taken or some other method found to address the requirement. The necessity of a requirement is most often demonstrated by its traceability back to the business problem or business need that initiated it.

Every requirement must be unambiguous. That is, every requirement should be written in such a way that the designer or tester need not try to interpret or guess what the writer meant. Terms like usually, sometimes, and under normal circumstances leave the door open to interpretation of what to do under unusual or abnormal circumstances. Failing to describe behavior in all possible cases leads to guessing on the part of readers, and Murphy's Law suggests that the guess will be wrong a good portion of the time.

Completeness, correctness, and clarity are all criteria that address the way a given requirement is stated. A good requirement statement will present the requirement completely; that is, it will present all aspects of the requirement. The sine of x example was shown to be lacking several necessary parts of the requirement. The statement also must be correct. If, in fact, the requirement should call for the cosine of x, a perfectly stated requirement for the sine of x is not useful. And, finally, the requirement must be stated clearly. A statement that correctly and completely states the requirement but cannot be understood by the designer is as useless as no statement at all. The language of the requirements should be simple, straightforward, and use no jargon. That also means that somewhere in the requirements document the terms and acronyms used are clearly defined.

Measurability and testability also go together. Every requirement will ultimately have to be demonstrated before the software can be considered complete. Requirements that have no definite measure or attribute that can be shown as present or absent cannot not be specifically tested. The sine of x example uses the term real time. This is hardly a measurable or testable quality. A more acceptable statement would be "every 30 milliseconds, starting at the receipt of the start pulse from the radar." In this way, the time interval for real time is defined, as is the starting point for that interval. When the test procedures are written, this interval can be measured, and the compliance or noncompliance of the software with this requirement can be shown exactly.

The formal SRR is held at the end of the requirements phase. It is a demonstration that the requirements document is complete and meets the criteria previously stated. It also creates the first baseline for the software system. This is the approved basis for the commencement of the design efforts. All design components will be tracked back to this baseline for assurance that all requirements are addressed and that nothing not in the requirements appears in the design.

The purpose of the requirements review, then, is to examine the statements of the requirements and determine if they adhere to the criteria for requirements. For the software quality practitioner, it may not be possible to determine the technical accuracy or correctness of the requirements, and this task will be delegated to those who have the specific technical expertise needed for this assessment. Software quality or its agent (perhaps an outside contractor or another group within the organization) will review the documents for the balance of the criteria.

Each nonconformance to a criterion will be recorded along with a suggested correction. These will be returned to the authors of the documents, and the correction of the nonconformances tracked. The software quality practitioner also reports the results of the review and the status of the corrective actions to management.

3.3.2 Design reviews

Design reviews verify that the evolving design is both correct and traceable back to the approved requirements. Appendix E suggests an outline for the design documentation.

3.3.2.1 Informal reviews

Informal design reviews closely follow the style and execution of informal requirements reviews. Like the requirements, all aspects of the design must adhere to the criteria for good requirements statements. The design reviews go further, though, since there is more detail to be considered, as the requirements are broken down into smaller and smaller pieces in preparation for coding.

The topic of walk-throughs and inspections has already been addressed. These are in-process reviews that occur during the preparation of the design. They look at design components as they are completed.

Design documents describe how the requirements are apportioned to each subsystem and module of the software. As the apportionment proceeds, there is a tracing of the elements of the design back to the requirements. The reviews that are held determine if the design documentation describes each module according to the same criteria used for requirements.

3.3.2.2 Formal reviews

There are at least two formal design reviews, the PDR and the CDR. In addition, for larger or more complex systems, the organization standards may call for reviews with concentrations on interfaces or database concerns. Finally, there may be multiple occurrences of these reviews if the system is very large, critical, or complex.

The number and degree of each review are governed by the standards and needs of the specific organization.

The first formal design review is the PDR, which takes place at the end of the initial design phase and presents the functional or architectural breakdown of the requirements into executable modules. The PDR presents the design philosophy and approach to the solution of the problem as stated in the requirements. It is very important that the customer or user take an active role in this review. Defects in the requirements, misunderstandings of the problem to be solved, and needed redirections of effort can be resolved in the course of a properly conducted PDR.

Defects found in the PDR are assigned for solution to the appropriate people or groups, and upon closure of the action items, the second baseline of the software is established. Changes made to the preliminary design are also reflected as appropriate in the requirements document, so that the requirements are kept up to date as the basis for acceptance of the software later on. The new baseline is used as the foundation for the detailed design efforts that follow.

At the end of the detailed design, the CDR is held. This, too, is a time for significant customer or user involvement. The result of the CDR is the code-to design that is the blueprint for the coding of the software. Much attention is given in the CDR to the adherence of the detailed design to the baseline established at PDR. The customer or user, too, must approve the final design as being acceptable for the solution of the problem presented in the requirements. As before, the criteria for requirements statements must be met in the statements of the detailed design.

So that there is assurance that nothing has been left out, each element of the detailed design is mapped back to the approved preliminary design and the requirements. The requirements are traced forward to the detailed design, as well, to show that no additions have been made along the way that do not address the requirements as stated. As before, all defects found during CDR are assigned for solution and closure. Once the detailed design is approved, it becomes the baseline for the coding effort. A requirements traceability matrix is an important tool to monitor the flow of requirements into the preliminary and detailed design and on into the code. The matrix can also help show that the testing activities address all the requirements.

3.3.2.3 Additional reviews

Another review that is sometimes held is the interface design review. The purpose of this review is to assess the interface specification that will have been prepared if there are significant interface concerns on a particular project. The format and conduct of this review are similar to the PDR and CDR, but there is no formal baseline established as a result of the review. The interface design review will contribute to the design baseline.

The database design review also may be conducted on large or complex projects. Its intent is to ascertain that all data considerations have been made as the database for the software system has been prepared. This review will establish a baseline for the database, but it is an informal baseline, subordinate to the baseline from the CDR.

3.3.3 Test documentation reviews

Test documentation is reviewed to ensure that the test program will find defects and will test the software against its requirements.

The objective of the test program as a whole is to find defects in the software products as they are developed and to demonstrate that the software complies with its requirements. Test documentation is begun during the requirements phase with the preparation of the initial test plans. Test documentation reviews also begin at this time, as the test plans are examined for their comprehensiveness in addressing the requirements. See Appendix G for a suggested test plan outline.

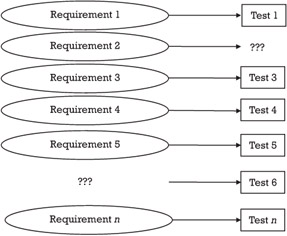

The initial test plans are prepared with the final acceptance test in mind, as well as the intermediate tests that will examine the software during development. It is important, therefore, that each requirement be addressed in the overall test plan. By the same token, each portion of the test plan must specifically address some portion of the requirements. It is understood that the requirements, as they exist in the requirements phase, will certainly undergo some evolution as the software development process progresses. This does not negate the necessity for the test plans to track the requirements as the basis for the testing program. At each step further through the SDLC, the growing set of test documentation must be traceable back to the requirements. The test program documentation must also reflect the evolutionary changes in the requirements as they occur. Figure 3.4 shows how requirements may or may not be properly addressed by the tests. Some requirements may get lost, some tests may just appear. Proper review of the test plans will help identify these mismatches.

Figure 3.4: Matching tests to requirements.

As the SDLC progresses, more of the test documentation is prepared. During each phase of the SDLC, additional parts of the test program are developed. Test cases (see Appendix H) with their accompanying test data are prepared, followed by test scenarios and specific test procedures to be executed. For each test, pass/fail criteria are determined, based on the expected results from each test case or scenario. Test reports (see Appendix I) are prepared to record the results of the testing effort.

In each instance, the test documentation is reviewed to ascertain that the test plans, cases, scenarios, data, procedures, reports, and so on are complete, necessary, correct, measurable, consistent, traceable, and unambiguous. In all, the most important criterion for the test documentation is that it specifies a test program that will find defects and demonstrate that the software requirements have been satisfied.

Test documentation reviews take the same forms as the reviews of the software documentation itself. Walk-throughs of the test plans are conducted during their preparation, and they are formally reviewed as part of the SRR. Test cases, scenarios, and test data specifications are also subject to walk-throughs and sometimes inspections. At the PDR and CDR, these documents are formally reviewed.

During the development of test procedures, there is a heavy emphasis on walk-throughs, inspections, and even dry runs to show that the procedures are comprehensive and actually executable. By the end of the coding phase, the acceptance test should be ready to be performed, with all documentation in readiness.

The acceptance test is not the only test with which the test documentation is concerned, of course. All through the coding and testing phases, there have been unit, module, integration, and subsystem tests going on. Each of these tests has also been planned and documented, and that documentation has been reviewed. These tests have been a part of the overall test planning and development process and the plans, cases, scenarios, data, and so on have been reviewed right along with the acceptance test documentation. Again, the objective of all of these tests is to find the defects that prevent the software from complying with its requirements.

3.3.4 User documentation reviews

User documentation must not only present information about the system, it must be meaningful to the reader.

The reviews of the user documentation are meant to determine that the documentation meets the criteria already discussed. Just as important, however, is the requirement that the documentation be meaningful to the user. The initial reviews will concentrate on completeness, correctness, and readability. The primary concern will be the needs of the user to understand how to make the system perform its function. Attention must be paid to starting the system, inputting data and interpreting or using output, and the meaning of error messages that tell the user something has been done incorrectly or is malfunctioning and what the user can do about it.

The layout of the user document (see Appendix K for an example) and the comprehensiveness of the table of contents and the index can enhance or impede the user in the use of the document. Clarity of terminology and avoiding system-peculiar jargon are important to understanding the document's content. Reviews of the document during its preparation help to uncover and eliminate errors and defects of this type before they are firmly imbedded in the text.

A critical step in the review of the user documentation is the actual trial use of the documentation, by one or more typical users, before the document is released. In this way, omissions, confusing terminology, inadequate index entries, unclear error messages, and so on can be found. Most of these defects are the result of the authors' close association with the system rather than outright mistakes. By having representatives of the actual using community try out the documentation, such defects are more easily identified and recommended corrections obtained.

Changes to user work flow and tasks may also be impacted by the new software system. To the extent that they are minor changes to input, control, or output actions using the system, they may be summarized in the user documentation. Major changes to behavior or responsibilities may require training or retraining.

Software products are often beta-tested. User documents should also be tested. Hands-on trial use of the user documentation can point out the differences from old to new processes and highlight those that require more complete coverage than will be available in the documentation itself.

3.3.5 Other documentation reviews

Other documents are often produced during the SDLC and must be reviewed as they are prepared.

In addition to the normally required documentation, other documents are produced during the software system development. These include the software development plan, the software quality system plan, the CM plan, and various others that may be contractually invoked or called for by the organization's standards. Many of these other documents are of an administrative nature and are prepared prior to the start of software development.

The software development plan (see Appendix A), which has many other names, lays out the plan for the overall software development effort. It will discuss schedules, resources, perhaps work breakdown and task assignment rules, and other details of the development process as they are to be followed for the particular system development.

The software quality system plan and the CM plan (see Appendixes B and C, respectively) address the specifics of implementing these two disciplines for the project at hand. They, too, should include schedule and resource requirements, as well as the actual procedures and practices to be applied to the project. There may be additional documents called out by the contract or the organization's standards as well.

If present, the safety and risk management plans (see Appendixes L and M) must undergo the same rigor of review as all the others. The software maintenance plan (see Appendix N), if prepared, is also to be reviewed.

Since these are the project management documents, it is important that they be reviewed at each of the formal reviews during the SLC, with modifications made as necessary to the documents or overall development process to keep the project within its schedule and resource limitations.

Reviews of all these documents concentrate on the basic criteria and completeness of the discussions of the specific areas covered. Attention must be paid to complying with the format and content standards imposed for each document.

Finally, the software quality practitioner must ascertain that all documents required by standards or the contract are prepared on the required schedule and are kept up to date as the SLC progresses. Too often, documentation that was appropriate at the time of delivery is not maintained as the software is maintained in operation. This leads to increased difficulty and cost of later modification. It is very important to include resources for continued maintenance of the software documentation, especially the maintenance documentation discussed in Chapter 8. To ignore the maintenance of the documentation will result in time being spent reinventing or reengineering the documentation each time maintenance of the software is required.

EAN: 2147483647

Pages: 137