Streams at a Glance

|

| < Day Day Up > |

|

The Streams technology lies on top of a queuing architecture. At its most basic, Streams is a methodology for gathering events, storing them in a queue, and then publishing those events to different subscribers. The power of this, from our perspective, is that Streams captures events from the redo log being produced by an Oracle database. As such, Streams has access to every database change that occurs, and can pass that information to another database-propagating, or replicating, the database changes, across the network to multiple databases.

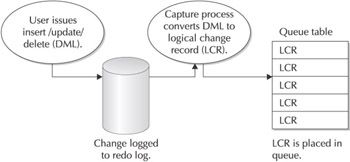

When Streams is configured, an Oracle background process, known as a capture process, will read through the redo stream and gather the DML and DDL statements that it finds. Streams converts these statements into a specific format called a logical change record (LCR). When it comes from the redo log, an LCR is an atomic row change, and it's important to note that a transaction can be made up of one or more LCRs, depending on how many rows are affected by the transaction. Once converted into an LCR, Streams stores the DML or DDL event in a queue table. From this queue table, the LCRs can be propagated to another queue table in the same database or a different database. Finally, an apply process translates the LCR and applies it to the corresponding database object in the new database. Figure 10-1 illustrates this model.

Figure 10-1: Streams translates the redo stream into LCRs in a queue table

What's important to note here is that Streams is turning DML and DDL operations from your database redo log into logical records that can be passed to another database. In the process of making the pass, you (as the DBA) are provided the opportunity to filter, manipulate, or change the logical record to meet your business demands. The power of this lies in your ability to very closely control how data is propagated from one database to another.

Hey, Isn't This What Advanced Replication Is For?

Yes. advanced replication does almost exactly what Streams does (although without all the control). Replication, however, is trigger-based; this means that if you want to replicate a particular object from one database to another, you must set up a trigger on the table to fire on each DML action. The trigger then adds the same row to a stage table. This stage table gets its contents pushed periodically to another database. This process can be very expensive.

Streams, on the other hand, utilizes the records about DML that are already being recorded in the redo log, so no further activity at the source database is required. You can turn on Streams replication for one object or the entire database. The workload falls almost entirely to the destination database, which must translate the redo logs from the source into LCRs and then queue/dequeue and apply them. So, Streams can replicate data in a much more efficient manner than advanced replication, in addition to providing a better level of control over the process.

Streams divides the workload into three separate operations (more or less): capture, propagation, and apply.

Streams Capture Process

The capture process is responsible for executing the first stage of a Streams configuration. The capture process must check the DML and DDL records in the redo log and evaluate them for possible capture. The evaluation process checks against the rule set that has been set up for the capture process. Rules are database objects that you set up to determine what type of change events you want to extract from the redo logs. Based on the rule set, the capture process extracts the appropriate changes and converts them into LCRs, and queues the LCR into the queue table.

Local vs. Downstream Capture

New to Oracle Database 10g, you can now move the capture process away from the source database. Up to this point, the capture had to take place at the source database. But Oracle Database 10g now gives you the ability to push the archivelogs from the source database to another database (in the same fashion as you would with a Data Guard configuration), and then initiate the capture process at the downstream location. In this way, you push the entire Streams environment away from the source database completely.

Streams Propagation

The capture process is responsible for placing the LCRs that it captures into a queue table. From the queue table, the LCRs can be consumed by an apply process, or they can be propagated to another queue. The other queue can be local or remote-remote being the key to using Streams for availability.

Propagation takes place via propagation jobs that you configure. Propagation jobs are just database jobs, detailed using DBMS_JOB, but they have certain characteristics that make them unique. The most important characteristic is that a propagation job can evaluate a rule set, in much the same way as the capture process, so that only certain LCRs are propagated to the next queue.

Streams Apply Process

After the capture process defines and enqueues the LCRs from the redo stream, and after the propagation job has moved the LCRs from the source database to the queue table at the destination database, it is time to do something. Ultimately, the LCR that represents a change to a table needs to be applied to the copy of the table at the destination. For this, Oracle has an apply process. The apply process reads the queue table, and based on its rule set, determines which of the LCRs it needs to consume. By consume, we mean take the LCR and apply the change to an object.

There is a considerable amount of flexibility and configurability built into the apply process of Streams. You can configure a rule set for apply (as you can for the capture process and propagation jobs) so that you have further filtering options. The apply process can use rules to determine if an LCR is applied directly to its associated object, or if you want to pass the LCR to an intermediary procedure. If you pass the LCR to a procedure, you can encode changes to the data, such as modifying a datatype or adding a value to a new column, before the LCR gets applied. As you might imagine, this provides an unparalleled way of morphing data from an OLTP database to a data warehouse, or to modify data to test a new application.

Rules, Rules, Rules

As you may have noticed, there's a lot of talk about rules in Streams. Everything obeys rules of operation, but for Streams, a rule is actually a specific thing. Literally, rules are objects that reside in the Oracle database. You use DBMS_RULES to define a rule, and to modify it. Rules are organized into rule sets. A rule set can be referenced by a capture process, a propagation job, or an apply process in order to determine which records will be used by the Streams configuration at each step of the process. The capture process uses a rule set to determine which records in the redo log are converted to LCRs and placed in the queue table. The propagation job uses a rule set to determine which LCRs in a queue are moved to the remote queue table. The apply process will reference a rule set to determine who will consume the LCRs in the queue table.

The same rule set can be referenced by the capture, propagation, and apply agent. Conversely, each capture, propagation, or apply agent can have more than one rule set. Rule sets are typically defined as positive rule sets, or negative rule sets. Positive rule sets indicate a set that informs the process of what to include when reviewing records. For example, a positive rule set will inform the capture process to capture all rows for the table woodscrew_orders. A negative rule set will inform the process of what should be excluded during a review of records. For instance, a negative rule set may tell the capture process to exclude row changes for woodscrew_orders table that have an order date prior to January 2002. In this example, then, the capture process is informed by its positive rule set to capture DML for woodscrew_orders, but the negative rule set then discards records that have an ORD_DATE < 01-01-2002.

Through rule creation, you can very closely control the exact nature of all data that gets propagated and applied via Streams. We will not delve too deep into all the possibilities, but understand that rules are the foundation of successful Streams replication.

|

| < Day Day Up > |

|

EAN: N/A

Pages: 134