Backing Up the Available Database

|

| < Day Day Up > |

|

It's time to get into the meat of things, and that means taking backups of our database that emphasize availability. What does that mean, exactly? Well, backing up for availability requires that you envision your recovery requirements prior to establishing the backup strategy. It means looking at the different types of failures that your media recovery strategy must account for, and then preparing in advance for as fast a recovery as possible.

The High-Availability Backup Strategy

The HA backup strategy is based on a few guiding principles:

-

A complete restore of the database must be avoided at all costs.

-

Datafile recovery should take minutes, not hours.

-

User errors should be dealt with using Flashback Technology, and not media recovery.

-

Backup operations should have minimal performance drain on normal database operations.

-

Backup maintenance should be automated to fit business rules.

When you consider these guiding principles, they lead us toward a specific configuration for our backups in the HA environment.

Whole Datafile Backups

The centerpiece of our backups must be, of course, backups of the Oracle datafiles. You can ignore your temporary tablespace temp files; RMAN does not consider them when backing up, and neither should you. They are built from scratch every time you open the DB anyway. Every other file needs a complete, whole backup taken.

RMAN Backupsets Using the backup Command RMAN's specialty, leading up to version Oracle Database 10g, was taking a backup using a format called the backupset. The backupset was an RMAN-specific output file that was a multiplexed catchall for all the datafiles of the database, streamed into a single backup piece (think of a backupset as a logical unit, like a tablespace; think of a backup piece as a physical unit, like the datafile). This is an extremely efficient method for getting a backup taken that minimizes the load by eliminating unused blocks. This is the only way RMAN can back up to tape.

The only problem with backupsets is that you have to stream the blocks from the backupset through the Oracle memory area, through input/output buffers, and then back to the datafile locations-then rebuild any empty blocks from scratch. So the restore takes a little time. No big deal, if you are restoring from tape; the tape restore is the bottleneck, anyway.

We've spoken briefly about allocating multiple channels to run an RMAN backup. When two or more channels are allocated for a single backup operation, this is referred to as parallelization. When two or more channels are allocated, RMAN breaks up the entire workload of the backup job into two relatively equal workloads, based on datafile size, disk affinity, and other factors. Then the two jobs are run in parallel, creating two separate backupsets. Datafiles cannot span backupsets, so if you allocate two channels to back up a single datafile, one channel will sit idle.

Parallelization increases the resource load used by RMAN during the backup, from disk I/O to memory to CPU, so the backup will run faster but also eat up more resources on your target database server. Typically, as well, it makes little sense to parallelize to a high degree if your backup location is serialized-say, you are backing up to a single disk, or to a single tape device. Particularly for tape backups, it makes little sense to parallelize channels to a single tape, as you simply increase the bottleneck at the access point to the tape.

Multiplexing, on the other hand, refers to the number of files that are included in a single backupset. During a backup of type backupset, RMAN will stream data from all the files included in the backup job into a single backupset. This leads to efficiencies at the time of the backup, as all files can be accessed at the same time. However, be cautious of multiplexing, as having a high degree of multiplexing can lead to problems during restore. Say you have a backupset with 10 files multiplexed together in the backupset. During restore of a single datafile, RMAN must read the entire backupset from tape into a memory structure to gather information about the single datafile. This means the network activity is extremely high, and the restore is very slow for a single file. Multiplexing does not apply to backups of type copy, as the backup product is a full-fledged copy of each datafile.

The moral of this story:

-

Parallelize based on the number of backup locations available. If you have four tapes, allocate four channels.

-

If you multiplex datafiles into the same backupset, attempt to multiplex files that would be restored together.

RMAN Datafile Copies to Disk RMAN can also take copies of the Oracle datafiles. It still uses the memory buffers during the backup, to take advantage of corruption checking, but empty blocks are not eliminated-and the end result is a complete, block-for-block copy of the datafile. You can only do this to disk; it doesn't make sense to do it to tape because backupsets are far more efficient.

The benefit of datafile copies is that, given a restore operation, the copy can quickly be switched to by the Oracle database, instead of physically restoring the file to the datafile location. The restore goes from minutes (or hours) to mere seconds. Talk about a desirable feature for availability: we can begin to apply archivelogs immediately to get the file back up to date.

The problem, of course, is that you need the disk space to house a complete copy of the datafiles in your database. Of course, this is becoming less and less of a problem as Storage Area Networks (SANs) become commonplace, and disk prices continue to drop. But, given a multiterabyte database, using disk-based copies might prove impossible.

We recommend that in Oracle Database 10g, you take advantage of disk-based copies whenever possible. Using local datafile copies is the best way to decrease the mean time to recovery (MTTR), which is the goal of any HADBA. And creating them using RMAN means you have all the benefits: corruption checking, backup file management, block media recovery, and so forth. If you don't have space for copies of all your files, prioritize your files based on those that would be most affected by an extended outage, and get copies of those files. Send the rest directly to tape.

Incremental Backups

After you figure out what to do about getting your full datafile copies taken, its time to consider the use of incremental backups. RMAN provides the means by which to take a backup of only those data blocks that have changed since the last full backup, or the last incremental. An incremental strategy allows you to generate less backup file product, if you have limited disk or tape space. Using incremental backups during datafile recovery is typically more efficient than using archivelogs, so you usually improve your mean time to recovery as well.

If you have the ability to do so, taking full database backups every night is preferable to taking a full backup once a week, and then taking incrementals. The first step of any recovery will be to restore the last full datafile backup, and then begin the process of replacing blocks from each subsequent incremental backup. So if you have a recent full backup, recovery will be faster. Incremental strategies are for storage-minded DBAs who feel significant pressure to keep backups small due to the size of the database.

RMAN divides incremental backups into two levels: 0 and 1 (if you used incremental backups in RMAN9i and lower, levels 2-4 have been removed because they really never added much functionality). A level 0 incremental backup is actually a full backup of every block in the database; a level 0 is the prerequisite for any incremental strategy. Specifying a level 0 incremental, versus taking a plain old backup, means that RMAN records the incremental SCN: the marker by which all blocks will be judged for inclusion in an incremental backup. The incremental SCN is not recorded when level 0 is not specified when the backup is taken.

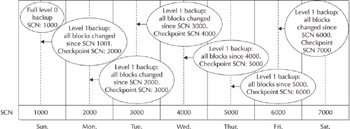

After you take your level 0 backup, you are free to take as many level 1 backups as you require. During a level 1 backup, RMAN determines if a block has been modified since the level 0 backup. If so, the block makes the pass from the input buffer to the output buffer, and ends up in the backup piece. When complete, the level 1 backup is a pile of blocks that, by itself, is a significant subset of the actual datafile it represents (depending on your database volatility, of course). By default, the level 1 will check back to the last incremental backup, meaning if you take a level 1, and then another level 1, the second will only back up blocks since the first level 1, instead of checking back to the level 0 (full). This is depicted in Figure 8-6.

Figure 8-6: Incremental backups for a week

Incrementally Updated Backups In Oracle Database 10g, Oracle introduces the ability to leverage incremental backups for fast recovery via incrementally updated backups. In a nutshell, RMAN now allows you to apply an incremental backup to a copy of the datafiles on an ongoing basis, so that the copy is always kept as recent as the last incremental backup. Theoretically, then, you could switch to the datafile copy, apply archivelogs since the last incremental backup, and be back in business.

The benefits are enormous: now you can mimic taking a new, full copy of the datafiles every night without the overhead of such an operation. You also extend the lifespan of your full datafile copy, as you can theoretically apply incremental backups to the copy forever without taking a new full copy of the file.

The requirements are minimal for incrementally updated backups:

-

You need to tag your full, level 0 backup so that it can be specified for the incremental apply.

-

You can only apply an incremental to a copy, and not a backupset.

-

The incremental backup must be taken with the keywords 'for recover of copy.'

HA Workshop: Using Incrementally Updated Backups

Workshop Notes

This workshop assumes you configured your permanent parameters as they were outlined in the previous workshop. This will mean you have your default device type set to disk, and the default backup type is copy (instead of backupset). We also assume the presence of a flash recovery area to handle file naming, organization, and so forth.

Step 1. Take the full, level 0 backup. Be sure to tag it, as this is how we refer to it during the application of the incremental backup. Be sure there is enough space in the recovery area to house full copies of all your datafiles.

backup incremental level 0 tag 'INC_4_APPLY' database;

| Note | Because we set our default backup type to copy, and we set parallelism to 2, we will only be creating two file copies at a time in the preceding command.If you want your backup to access more datafiles at the same time, increase disk parallelism to a higher value. |

Step 2. Take the level 1 incremental backup. If this is production, you should schedule your level 1 to meet business needs. Every night is a very common approach. If you are merely testing incremental application at this time, be sure to run some DML changes against your database after making the level 0 backup, and before running the level 1. Switch the logfiles a few times, as well, to give some distance between the two backups.

backup incremental level 1 for recover of copy tag 'INC_4_APPLY' database;

Step 3. Apply the incremental backup to the datafile copies of your database.

recover copy of database with tag 'INC_4_APPLY';

After applying the first incremental level 1, you can script the incremental backup and the incremental application to the backup copy so that you apply the latest incremental backup immediately after taking it.

Using Block Change Tracking for Better Incremental Performance If you do decide to use incremental backups in your recovery strategy, you should take note of a new performance feature for incrementals that has been introduced in Oracle Database 10g. This new feature is called block change tracking, and it refers to an internal Oracle mechanism that, when switched on, will keep track of all blocks being modified since the last level 0 backup. When the incremental level 1 backup is taken, RMAN looks at the block change tracking file to determine which blocks to back up.

Prior to Oracle Database 10g, the only way that RMAN could determine which data blocks to include in a level 1 incremental backup was to read every block in the database into an input buffer in memory and check it. This led to a common misconception about incremental backups in RMAN: that they would go significantly faster than full backups. This simply didn't hold up to scrutiny, as RMAN still had to read every block in the database to determine its eligibility-so it was reading as many blocks as the full backup.

With block change tracking, the additional I/O overhead is eliminated almost entirely, as RMAN can now read only those blocks required for backup. Now, there is a little bit of robbing Peter to pay Paul, in the sense that block change tracking incurs a slight performance degradation for the database. But if you plan on using incremental backups, chances are you can spare the CPU cycles for the increased backup performance and backup I/O decrease.

To enable block change tracking, you simply do the following:

ALTER DATABASE ENABLE BLOCK CHANGE TRACKING;

Alternatively, if you are not using a flashback recovery area, you can specify a file for change tracking:

ALTER DATABASE ENABLE BLOCK CHANGE TRACKING USING FILE '/dir/blck_change_file';

You turn off change tracking like so:

Alter database disable block change tracking;

It should be noted that after you turn change tracking on, it does not have any information about block changes until the next level 0 backup is taken.

Backing Up the Flashback Recovery Area

With datafile copies, incremental backups, and archivelogs piling up in the flash recovery area, it's time to start thinking about housekeeping operations. First, though, it's important to recognize the powerful staging functionality that the FRA provides. With all backups first appearing in the FRA, the process of staging those backups to tape has been greatly simplified.

RMAN now comes equipped with a command to back up the recovery area:

backup recovery area;

This backs up all recovery files in the recovery area: full backups, copies, incrementals, controlfile autobackups, and archivelogs. Block change tracking files, current controlfiles, and online redo logs are not backed up. This command can only back up to channel type SBT (tape), so it will fail if you do not have tape channels configured. This is why we recommended them in the HA Workshop 'Setting Permanent Configuration Parameters for High-Availability Backup Strategy.' For more on tape backups, see the section 'Media Management Considerations.'

Alternatively, if you want the same functionality but you do not use a flashback recovery area, you can use

backup recovery files;

This has the same effect: full backups, copies, incrementals, cfile autobackups, and archivelogs are backed up. This can only go to tape, so have SBT channels configured.

Backup Housekeeping

More often than not, backups are never used. This is a good thing. However, we leave quite a trail of useless files and metadata behind us as we move forward with our backup strategy. So we must consider housekeeping chores as part of any serious HA backup strategy. Outdated backups clog up our flashback recovery area and take up needed space on our tapes, and the metadata inexorably builds up in our RMAN catalog, making reports less and less useful with every passing day. Something must be done.

Determine, Configure, and Use Your Retention Policy

Lucky for us, that something became much simpler in Oracle Database 10g. Backup maintenance had already become simple in Oracle9i when a retention policy was properly configured. The retention policy refers to the rules by which you dictate which backups are obsolete, and therefore eligible for deletion. RMAN distinguishes between two mutually exclusive types of retention policies: redundancy and recovery windows.

Redundancy policies determine if a backup is obsolete based on the number of times the file has been previously backed up. Therefore, if you set your policy to REDUNDANCY = 1 and then back up the database, you have met your retention policy. The next time you back up your database, all previous backups are obsolete, because you have one backup. Setting redundancy to 2, then, would mean that you keep the last two backups, and all backups prior to that are obsolete.

The recovery window refers to a time frame in which all backups will be kept, regardless of how many backups are taken. The recovery window is anchored to sysdate, and therefore is a constantly sliding window of time. So, if you set your policy to a 'Recovery Window of 7 Days,' then all backups less than seven days old would be retained, and all backups older than seven days would be marked for deletion. As time rolls forward, new backups are taken and older backups become obsolete.

You cannot have both retention policies, so choose one that best fits your backup practices and business needs. There is a time and a place for both types, and it always boils down to how you view your backup strategy: is it something that needs redundancy to account for possible problems with the most recent backup, or do you view a backup strategy as defining how far back in time you can recover?

After you determine what policy you need, configuration is easy: use the configure command from the RMAN prompt and set it in the target database controlfile. By default, the retention policy is set to redundancy 1.

CONFIGURE RETENTION POLICY TO REDUNDANCY 1; # default

You change it with simple verbiage:

RMAN> CONFIGURE RETENTION POLICY TO RECOVERY WINDOW OF 7 DAYS; new RMAN configuration parameters: CONFIGURE RETENTION POLICY TO RECOVERY WINDOW OF 7 DAYS; new RMAN configuration parameters are successfully stored

After you configure the policy, it is simple to use. You can list obsolete backups and copies using the report command, and then when you are ready, use the delete obsolete command to remove all obsolete backups. Note that RMAN will always ask you to confirm the deletion of files:

RMAN> report obsolete; RMAN> delete obsolete; RMAN retention policy will be applied to the command RMAN retention policy is set to redundancy 1 using channel ORA_DISK_1 Deleting the following obsolete backups and copies: Type Key Completion Time Filename/Handle -------------------- ------ ------------------ -------------------- Backup Set 11 12-JAN-04 Backup Piece 9 12-JAN-04 /U02/BACKUP/0DFBCJ5H_1_1 Do you really want to delete the above objects (enter YES or NO)?

You can automate the delete obsolete command, so that a job can perform the action at regular intervals and save you the effort. To do so, you have to include the force keyword to override the deletion prompt:

delete force noprompt obsolete;

Retention Policies and the Flashback Recovery Area

The flashback recovery area is self-cleaning. (You know, like a cat, only without those disturbing leg-over-the-head in-front-of-the-television moments.) What this means is that as files build up in the FRA and storage pressure begins to appear, the FRA will age out old files that have passed the boundaries of the retention policy you implemented. Take an example where you have a retention policy of redundancy 1. You take a full database backup on Monday. On Tuesday, you take a second full database backup. On Wednesday, you take a third full database backup. However, there is not room for three full backups in the FRA. So the FRA checks the policy, sees that it only requires one backup, and it deletes the backup taken on Monday-and the Wednesday backup completes.

An important note about this example: with redundancy set to 1, you still must have enough space in your FRA for two whole database backups. This is because RMAN will not delete the only backup of the database before it writes the new one, because the possibility exists for a backup failure and then you have no backups. So before the Monday backup is truly obsolete, the Tuesday backup has to exist in its entirety, meaning you have two backups.

If the FRA fills up with files, but no files qualify for deletion based on your retention policy, you will see an error and the backup that is currently underway will fail with an error:

RMAN-03009: failure of backup command on ORA_DISK_2 channel at 11/04/2003 15:44:56 ORA-19809: limit exceeded for recovery files ORA-19804: cannot reclaim 471859200 bytes disk space from 1167912960 limit continuing other job steps, job failed will not be re-run

A full FRA can be a far more serious problem than just backup failures. If you set up your FRA as a mandatory site for archivelog creation, then the ARCH process can potentially hang your database waiting to archive a redo log to a full location. By all estimations, it behooves the HADBA to keep the FRA open for business.

Stage the Flashback Recovery Area to Tape

So our goal is to keep a copy of our database in the FRA, as well as maintain our retention policy that requires more than a single backup of the database be available for restore. The way to accomplish this, of course, is to back up the FRA to tape. The retention policy that you configure applies to all backups, whether they be on disk or tape. However, if you use the FRA as a staging area that is frequently moved to tape, you will never be required to clean up the FRA. Your maintenance commands will always be run against the backups on tape. When you back up the recovery area, you free up the transient files in the FRA to be aged out when space pressure occurs, so the errors noted above don't impair future backups or archivelog operations.

Take our script from the HA Workshop 'Using Incrementally Updated Backups,' and modify it to include an FRA backup and a tape maintenance command to delete obsolete backups from tapes (remember, our retention policy is set to a recovery window of seven days):

backup incremental level 1 for recover of copy tag 'INC_4_APPLY' database; recover copy of database with tag 'INC_4_APPLY'; backup recovery area; delete obsolete device type sbt;

|

| < Day Day Up > |

|

EAN: N/A

Pages: 134